There’s romanticism with A/B testing. Like something from a Fabio novel.

We’ve all heard the story of the $300 million button, right? You know, the one where adding “checkout as a guest” increased sales by millions of dollars.

Ever since you heard about that story, you have been looking for your $300 million change, haven’t you? I have too.

I have too.

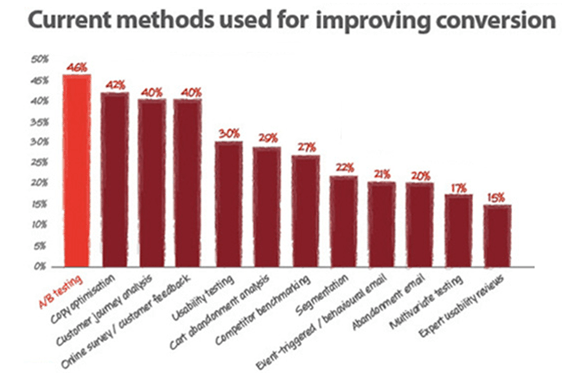

Of all the methods used in conversion rate optimization, A/B testing reigns supreme. For two years straight, the most popular method is A/B testing, followed by copy optimization.

And yet, for every great A/B test story you hear, there’s hundreds (or thousands) of failed A/B tests we don’t hear about. I mean, who wants to report a failed test, right?

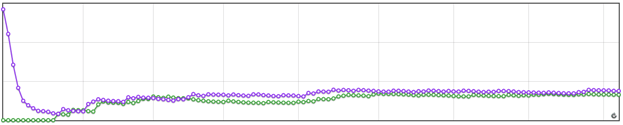

Take a look at the results of this A/B test:

That chart tells me one thing; I’m testing the wrong thing and it has zero impact on my conversion rates!

Every marketer wants to share that one easy change they made to a website that generated thousands of additional dollars in revenue, which is probably why 63% of marketers’ test and optimize websites based on best practices.

Let me guess, you read a successful case study, copy the exact steps and then hope for the same gain. Newsflash! That is not how conversion rate optimization works

Heck, I know that all too well!

When I used social proofing on a lead generation site, conversion rate decreased and revenue dropped by $3,000 per month. In another test, sales fell by $10,000 per month when I removed distractions from an e-commerce store.

So before I go into detail about this specific test, I want to make it clear that you should not expect to replicate this test and see the same results.

Playing with Words

OK, back to the case study.

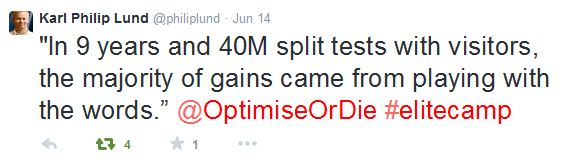

In June 2014, I came across a tweet by Karl Philip Lund who attended the Digital Elite Camp conference, which is hosted by conversion rate expert, Peep Laja.

The tweet, a quote from Craig Sullivan who spoke at the event, said:

Interesting, right?

Most gains come from playing with words.

Fast forward a few months to September 2014, when a company I work with launched a free trial version of their CRM software – A free 30-day trial with no credit card required. We launched the free trial, promoted the launch on the home page, and kept the CTA simple “Get a free trial.”

It looks OK, doesn’t it?

But that one quote from Craig Sullivan stayed with me – “Most gains come from playing with words.” I decided that I wanted to combine them both A/B testing, followed by copy optimization.

I knew we could do better with the CTA copy. To get started, we tested the CTA copy with three variations:

- Original: Get a free trial

- Variation 1: Start free trial

- Variation 2: Get free trial

- Variation 3: Free 30 day trial

Here’s how the CTA’s look compared to each other:

Not much difference, right? Well, keep reading…

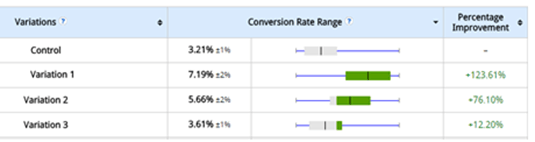

We ran this test for 30 days and here’s what happened:

With a 99% statistical significance, variation one outperformed the original by 123%.

We were blown away by the results. Twice as many people were now visiting the form when we added a sense of urgency to the CTA copy and used “Start”, instead of “Get.”

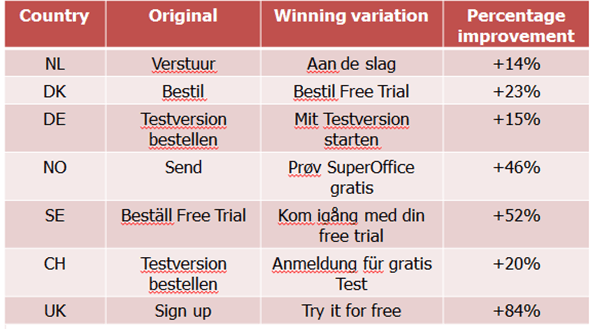

This was a good A/B test to share with the team, but this client has eight websites in six languages, so what about the other sites?

Localizing the A/B Test into Six Languages

The local websites have a free trial form whereas the main website doesn’t, and instead of a form, the page links to each local website’s form. So, we localized the form CTA copy instead of the home page CTA copy.

Here’s the CTA copy we used for the free trial form A/B test:

- Original: Get a free trial

- Variation 1: Sign up for free trial

- Variation 2: Start free trial

- Variation 3: Try it for free

Some countries included 2-3 additional variations to the CTA copy due to the way the copy was localized in the local language.

More than 3,000 visitors participated in the A/B test, and here’s what happened:

Every single website saw an increase in free trial sign-ups when we tested the CTA copy on the form!

Most people would be over the moon with that kind of increase in free trial sign ups, right?

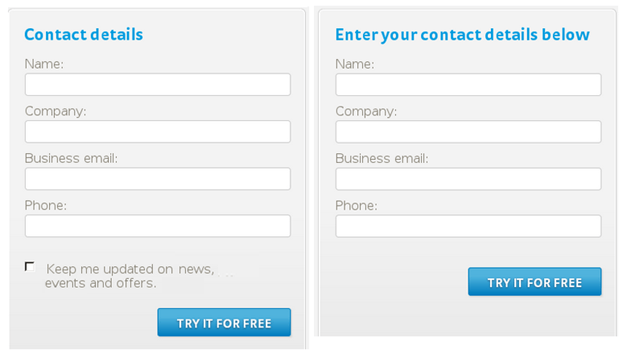

Again, I knew we could do better, so we ran a second A/B test on the free trial form. Below is a preview of how the free trial forms look when compared next to each other (original, left and variation, right):

The variation included three small changes:

- Form copy: We changed the form heading from “Contact details” to “Enter your contact details below”.

- Newsletter sign up: We removed the “subscribe to newsletters” checkbox from above the call to action

- Social sharing icons: We removed the social sharing icons from the page

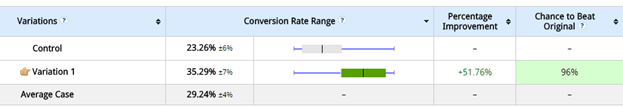

And here are the results:

With a 96% statistical significance, the variation outperformed the original by 51% – the page now converts 1 in 3 visits to the free trial form.

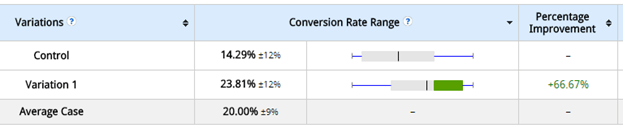

Better yet, we tested this on the Norwegian form and again, we saw an increase (as shown below)!

The A/B Test Results

You can call me biased, but I love case studies like these – easy to implement and extremely valuable to any business.

Research shows that one in three marketers’ claim that A/B testing is “too complex” – But CTA button testing is the least complex thing you can do. You don’t need to perform extensive analysis or create a conversion rate optimization framework for button testing – just do it!

Both Optimizely and VWO are easy to set up and you can launch an A/B test quickly. This A/B test took less than 15 minutes to set up and launch, and while this test clearly isn’t a $300 million change, it has a significant impact.

What kind of impact?

A website lead is worth approximately $125, and we’ve been able to increase not only visits to the free trial sign-up page (doubled visits), but also free trial sign-ups by 47% across all 8 sites. These numbers don’t include the new A/B test results that we have yet to roll out in all countries.

But enough about percentages, we’re talking high-quality leads and these changes will double the number of leads we get which, based on the average value of a website lead, is worth approx. $330,000!

Not bad for about eight seconds of work.

Conclusion

I mentioned earlier in the post that you cannot expect to replicate these results, but what you can replicate is the continuous optimization of testing winning variations against newer variations based on the data you collect. Just because you run one A/B test, doesn’t mean it’s over. Where there’s higher gains to be made, there’s more testing involved.

What kind of wins have you seen from testing copy or call to action buttons?

Leave a comment and let me know.

Image Credits

Featured Image: Gustavo Frazao via Shutterstock

All screenshots taken June 2015

Disclosure: I am a paying customer for VWO, but have no other affiliations with the companies mentioned in this post.