Have you found yourself hitting a plateau with your organic growth?

High-quality content and links will take you far in SEO, but you should never overlook the power of technical SEO.

One of the most important skills to learn for 2019 is how to use technical SEO to think like Googlebot.

Before we dive into the fun stuff, it’s important to understand what Googlebot is, how it works, and why we need to understand it.

What Is Googlebot?

Googlebot is a web crawler (a.k.a., robot or spider) that scrapes data from webpages.

Googlebot is just one of many web crawlers. Every search engine has their own branded spider. In the SEO world, we refer to these branded bot names as “user agents.”

We will get into user agents later, but for now, just understand that we’re referring to user agents as a specific web crawling bot. Some of the most common user agents include:

- Googlebot – Google

- Bingbot – Bing

- Slurp Bot – Yahoo

- Alexa Crawler – Amazon Alexa

- DuckDuckBot – DuckDuckGo

How Does Googlebot Work?

We can’t start to optimize for Googlebot until we understand how it discovers, reads, and ranks webpages.

How Google’s Crawler Discovers Webpages

Short answer: Links, sitemaps, and fetch requests.

Long answer: The fastest way for you to get Google to crawl your site is to create a new property in Search Console and submit your sitemap. However, that’s not the whole picture.

While sitemaps are a great way to get Google to crawl your website, this does not account for PageRank.

Internal linking is a recommended method for telling Google which pages are related and hold value. There are many great articles published across the web about page rank and internal linking, so I won’t get into it now.

Google can also discover your webpages from Google My Business listings, directories, and links from other websites.

This is a simplified version of how Googlebot works. To learn more, you can read Google’s documentation on their crawler.

How Googlebot Reads Webpages

Google has come a long way with their site rendering. The goal of Googlebot is to render a webpage the same way a user would see it.

To test how Google views your page, check out the Fetch and Render tool in Search Console. This will give you a Googlebot view vs. User view. This is useful for finding out how Googlebot views your webpages.

Technical Ranking Factors

Just like traditional SEO, there is no silver bullet to technical SEO. All 200+ ranking factors are important!

If you’re a technical SEO professional thinking about the future of SEO, then the biggest ranking factors to pay attention to revolve around user experience.

Why Should We Think Like Googlebot?

When Google tells us to make a great site, they mean it. Yes, that’s a vague statement from Google, but at the same time, it’s very accurate.

If you can satisfy users with an intuitive and helpful website, while also appeasing Googlebot’s requirements, you may experience more organic growth.

User Experience vs. Crawler Experience

When creating a website, who are you looking to satisfy? Users or Googlebot?

Short answer: Both!

Long answer: This is a hot debate that can cause tension between UX designers, web developers, and SEO pros. However, this is also an opportunity for us to work together to better understand the balance between user experience and crawler experience.

UX designers typically have users’ best interest in mind, while SEO professionals are looking to satisfy Google. In the middle, we have web developers trying to create the best of both worlds.

As SEO professionals, we need to learn the importance of each area of the web experience.

Yes, we should be optimizing for the best user experience. However, we should also optimize our websites for Googlebot (and other search engines).

Luckily, Google is very user-focused. Most modern SEO tactics are focused at providing a good user experience.

The following 10 Googlebot optimization tips should help you win over your UX designer and web developer at the same time.

1. Robots.txt

The robots.txt is a text file that is placed in the root directory of a website. These are one of the first things that Googlebot looks for when crawling a site. It’s highly recommended to add a robots.txt to your site and include a link to your sitemap.xml.

There are many ways to optimize your robots.txt file, but it’s important to take caution doing so.

A developer might accidentally leave a sitewide disallow in robots.text, blocking all search engines from crawling sites when moving a dev site to the live site. Even after this is corrected, it could take a few weeks for organic traffic and rankings to return.

There are many tips and tutorials on how to optimize your robots.txt file. Do your research before attempting to edit your file. Don’t forget to track your results!

2. Sitemap.xml

Sitemaps are a key method for Googlebot to find pages on your website and are considered an important ranking factor.

Here are a few sitemap optimization tips:

- Only have one sitemap index.

- Separate blogs and general pages into separate sitemaps, then link to those on your sitemap index.

- Don’t make every single page a high priority.

- Remove 404 and 301 pages from your sitemap.

- Submit your sitemap.xml file to Google Search Console and monitor the crawl.

3. Site Speed

The quickness of loading has become one of the most important ranking factors, especially for mobile devices. If your site’s load speed is too slow, Googlebot may lower your rankings.

An easy way to find out if Googlebot thinks your website loads too slow is to test your site speed with any of the free tools out there.

Many of these tools will provide recommendations that you can send to your developers.

4. Schema

Adding structured data to your website can help Googlebot better understand the context of your individual web pages and website as a whole. However, it’s important that you follow Google’s guidelines.

For efficiency, it’s recommended that your use JSON-LD to implement structured data markup. Google has even noted that JSON-LD is their preferred markup language.

5. Canonicalization

A big problem for large sites, especially ecommerce, is the issue of duplicate webpages.

There are many practical reasons to have duplicate webpages, such as different language pages.

If you’re running a site with duplicate pages, it’s crucial that you identify your preferred webpage with a canonical tag and hreflang attribute.

6. URL Taxonomy

Having a clean and defined URL structure has shown to lead to higher rankings and improve user experience. Setting parent pages allows Googlebot to better understand the relationship of each page.

However, if you have pages that are fairly old and ranking well, Google’s John Mueller does not recommend changing the URL. Clean URL taxonomy is really something that needs to be established from the beginning of the site’s development.

If you absolutely believe that optimizing your URLs will help your site, make sure you set up proper 301 redirects and update your sitemap.xml.

7. JavaScript Loading

While static HTML pages are arguably easier to rank, JavaScript allows websites to provide more creative user experiences through dynamic rending. In 2018, Google placed a lot of resources toward improving JavaScript rendering.

In a recent Q&A session with John Mueller, Mueller stated that Google plans to continue focusing on JavaScript rendering in 2019. If your site relies heavily on dynamic rendering via JavaScript, make sure your developers are following Google’s recommendations on best practices.

8. Images

Google has been hinting at the importance of image optimization for a long time, but has been speaking more about it in recent months. Optimizing images can help Googlebot contextualize how your images related and enhance your content.

If you’re looking into some quick wins on optimizing your images, I recommend:

- Image file name: Describe what the image is with as few words as possible.

- Image alt text: While you may copy the image file name, you also are able to use more words here.

- Structured Data: You can add schema markup to describe the images on the page.

- Image Sitemap: Google recommends adding a separate sitemap for them to crawl your images.

9. Broken Links & Redirect Loops

We all know broken links are bad, and some SEOs have claimed that broken links can waste crawl budget. However, John Mueller has stated that broken links do not reduce crawl budget.

With a mix of information, I believe that we should play it safe, and clean all broken links. Check Google Search Console or your favorite crawling tool to find broken links on your site!

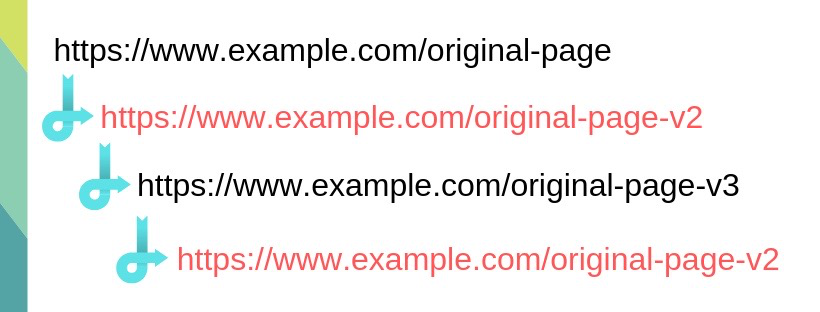

Redirect loops are another phenomenon that is common with older sites. A redirect loop occurs when there are multiple steps within a redirect command.

Version 3 of the redirect chain redirects back to the previous page (v2), which continues to redirect back to version 3, which causes the redirect loop.

Version 3 of the redirect chain redirects back to the previous page (v2), which continues to redirect back to version 3, which causes the redirect loop.Search engines often have a hard time crawling redirect loops and can potentially end the crawl. The best action to take here is to replace the original link on each page with the final link.

10. Titles & Meta Descriptions

This may be a bit old hat for many SEO professionals, but it’s proven that having well optimized titles and meta descriptions can lead to higher rankings and CTR in the SERP.

Yes, this is part of the fundamentals of SEO, but it’s still worth including because Googlebot does read this. There are many theories about best practice for writing these, but my recommendations are pretty simple:

- I prefer pipes (|) instead of hyphens (-), but Googlebot doesn’t care.

- In your meta titles, include your brand name on your home, about, and contact pages. In most cases, the other page types don’t matter as much.

- Don’t push it on length.

- For your meta description, copy your first paragraph and edit to fit within the 150-160 character length range. If that doesn’t accurately describe your page, then you should consider working on your body content.

- Test! See if Google keeps your preferred titles and meta descriptions.

Summary

When it comes to technical SEO and optimizing for Googlebot, there are many things to pay attention to. Many of it requires research, and I recommend asking your colleagues about their experience before implementing changes to your site.

While trailblazing new tactics is exciting, it has the potential to lead to a drop in organic traffic. A good rule of thumb is to test these tactics by waiting a few weeks between changes.

This will allow Googlebot to have some time to understand sitewide changes and better categorize your site within the index.

Image Credits

Screenshot taken by author, January 2019