Google only crawls about half the pages on large enterprise websites, meaning those pages won’t be added to the index. This also means they can’t rank or generate organic traffic and revenue for the business.

SEO for large websites simply cannot start with rankings and keywords or you will miss an enormous opportunity.

Enterprise website owners need to go deeper and focus on the entire search process – starting with its technical foundation and how search engines crawl it, to how its real audience engage with it.

On September 11, I moderated a sponsored SEJ webinar presented by Kameron Jenkins, Director of Brand Content and Communications at Botify.

Jenkins shared how enterprises can gain massive wins simply by removing the barriers that stand between Google and their website.

Here’s a recap of the webinar presentation.

Most enterprises are focused on getting rankings and traffic from their websites that they lose sight of how traffic is acquired in the first place – and it starts with how Google crawls a website.

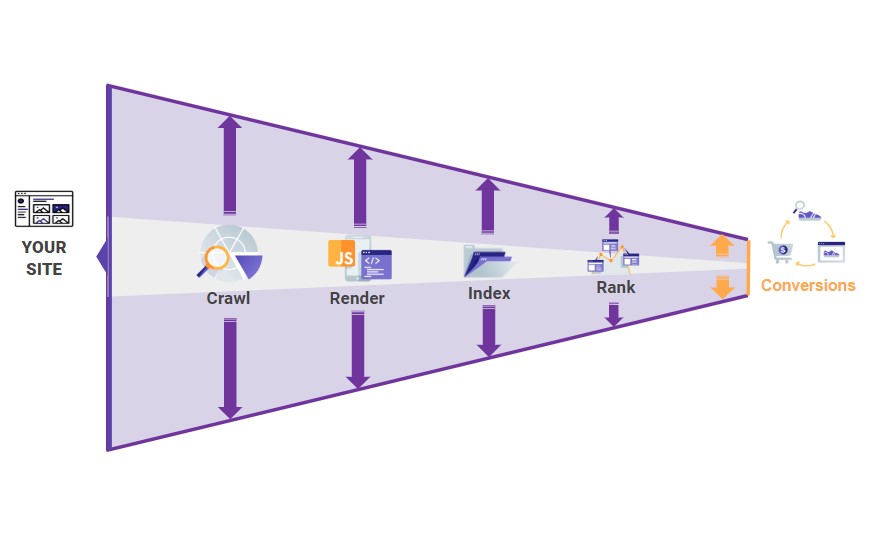

Enterprises need to be thinking about SEO holistically, from crawling to conversions, if they want to be effective.

In 2018, Botify conducted a comprehensive study about how Google crawls the web. They analyzed 413 million crawled pages and 6 billion Googlebot requests pulled from those websites’ log files.

The findings revealed that Google was missing 51% of those pages even though they are “compliant” pages.

Why is this happening?

For one thing, the web is huge and getting bigger every day – there are literally trillions of other pages Google needs to get to, which means they have a cap on how much time/pages they can get through on your site before moving on.

The modern web is also more complex than ever, leaving Google spending more time crawling and rendering things like JavaScript.

What Botify found in the data helped validate their presumption that Google was treating large websites differently than small websites.

If you look at the graph here, you can see that as websites get bigger (in terms of how many URLs they have) the smaller their percentage of crawled pages and pages getting organic search traffic.

Now, is it just that Google doesn’t like big websites?

Not at all. It’s just that due to the size and complexity of the web, Google is strapped for resources.

While they have no issue getting through the content on smaller sites, this definitely matters for large sites with millions of URLs.

Botify also found that the larger the website, the more you’d have to worry about crawl budget optimization, because every little thing that could take up Google’s time (such as things that cause page load time to increase) multiplied at the scale of millions of pages had a drastic impact on how many pages Google was able to get through.

So while a smaller SMB website might not have to worry about crawl budget optimizations and have the liberty to jump straight into keyword optimization and rankings, larger sites simply don’t have that luxury.

Enterprise Websites Need a Different Approach

We need to acknowledge that enterprise websites need to take a different approach to SEO.

So what does that look like exactly?

Instead of starting and focusing at the rankings stage – like many SEO professionals are used to – you’ve got to zoom out.

SEO professionals who work on large websites need to look at the full funnel.

Enterprise sites simply can’t start with keywords and rankings or they’ll miss an enormous opportunity.

How a Crawling-to-Conversions Process Works

With search, the starting point is your website, then how Google crawls it, then how it’s added to the index, then how searchers find it and interact with it.

You can use that same logical sequence that search has and mirror it in how you evaluate your website for SEO.

1. Understand What Pages Exist on Your Website

Start by understanding what pages exist on your website.

This step is usually really eye-opening for people, especially those who work on ecommerce websites that have multiple different ways to access a single page due to sorting and filtering options that can create tons of different versions of a single page with the same content.

You might not have an accurate idea of how many pages or what pages are actually in your site structure until you crawl it.

2. Look at Which of Those Pages Google Is Actually Visiting

Next, look at which of those pages Google is actually visiting by analyzing your log files.

The goal of a crawl is to go through your site the way Google would if it could devote unlimited time to exploring your website, but since Google doesn’t have unlimited time, this step is really important as it allows you to see which pages Google is visiting vs. ignoring.

3. Know How Your Indexed Pages Are Performing in SERPs

In those first two steps, you’ll likely find pages that Google is visiting that you don’t want them to visit, as well as important pages that they’re missing.

To ensure that Google is adding your key pages to its index, you can:

- Adjust your robots.txt file to direct Google away from certain pages.

- Update your sitemaps to include your key pages.

- Remove bad URLs like those that have 404-redirected.

- Improve your internal linking and page depth of key pages.

Because only once a page has been indexed can people find it via search.

In this third step, you’ll want to know how your indexed pages are performing in SERPs:

- How many impressions they’re getting.

- What keywords they’re showing up for.

- What position they’re ranking in.

4. Evaluate How Searchers Are Interacting with Your Pages

Once a page is showing up in SERPs, it can be found and clicked on by searchers, so the last step would be to evaluate how searchers are interacting with your pages:

- How many visits they’re getting (if any).

- What the bounce rate is.

- What the conversion rate is.

Why Implement a Crawling-to-Conversions Framework for Enterprise SEO

The beauty of this framework is that when you improve one thing, it has a ripple effect downstream.

For example, you get Google to crawl more of your pages, you have more pages in the index, and more pages in the index means more opportunities to earn organic traffic and revenue.

People typically think of rankings and traffic as the “growth-focused” stuff and things like crawl optimization as the technical/QA stuff, but the technical fixes lead to growth.

They all affect each other, you just have to have the full picture in mind or you might miss the opportunity.

Takeaways & Tips

Takeaway 1: Big Sites Have Crawl Issues

First, remember that large enterprise websites need a different framework because they face different challenges than smaller websites do.

About half, and in many cases a lot more, of key pages on these websites are being completely missed by Google.

Takeaway 2: They Can’t Afford to Start with Keywords

Because of that reality, SEO professionals who work on large websites can’t afford to start with keywords, on-page optimization, and rankings.

If they do, they’ll miss huge opportunity that could come from removing those issues further up your funnel in the crawl/render/index stage.

So what can you do right now?

Tip 1: Arm Yourself with All the Data

The first step is just to make sure you’re armed with all the data. You can’t evaluate and improve your full funnel unless you have a clear picture of your site at each phase of the search process.

- Crawl your site and take an inventory of everything that’s there.

- Access your log files so you can see which pages Google is visiting and which they’re missing.

- Know how your indexed pages are performing in SERPs, which you can get from Google Search Console – such as your impressions, queries you’re showing up for, position, and CTR.

- Get your website analytics data so you can know what searchers are doing once they land on your pages.

- How much time are they spending?

- What’s the bounce rate?

- Are they converting?

Tip 2: Look at the Full Picture When Auditing

Without all these data points, and without unifying them, you won’t have a full picture of your site in the search process.

If you only had keyword data, for example, you might only know what position you were ranking in, and not why you’re ranking in that position, which could be uncovered through looking at that page’s crawl or log data.

Having all this data and unifying it helps show you the why behind the what.

Tip 3: Get Yourself a Seat at the Table

The final tip is to get yourself a seat at the table. Easier said than done, but the way you’re structured and valued in your organization makes all the difference.

If your SEO department sits in a silo away from your development team or maybe even away from your marketing team too, decisions are going to keep being made that are not ideal for your search performance.

If you can’t get the organizational shift required to integrate SEO into your product and marketing teams, start by developing relationships with those teams.

Make sure they know who you are, and get to know them. Develop friendships.

When you already have a relationship, it’s a lot easier to share your point of view, as well as learn about their point of view.

Help connect the dots to show them how certain changes (that were probably made to improve one thing) actually ended up hurting search performance, and present an alternative.

They’ll learn to lean on you and hopefully will start to remember to ask your opinion before deploying these changes.

Q&A

Here are just some of the attendee questions answered by Kameron Jenkins.

Q: We’re about to launch an enterprise site from scratch with thousands of landing pages. Do you have any recommendations on getting those indexed quicker?

Kameron Jenkins (KJ): Botify has a tool called “sitemap generator” that’s perfect for this. After you’ve crawled your site to get a list of your pages, you can filter to view only your “compliant” pages (pages you want in the index, like those that aren’t marked noindex, have a 200 status code, etc.).

If you have a very large website and need to build XML sitemaps over 50,000 URLs, the URLs will automatically be broken into multiple sitemaps (as per Google’s sitemap guidelines). Botify lets you export that list of pages into a sitemap.xml file that you can submit to Google Search Console.

To make sure Google is actually crawling your submitted URLs, you can view Botify’s “URLs crawled by Google by Day” report. If your URLs still aren’t being crawled, make sure you’re not accidentally blocking them via robots.txt, and make sure they’re linked to throughout your site.

We also recommend keeping your most important pages close to the home page, as page depth can correlate with less frequent crawls.

Q: What are some crawling platforms you recommend?

KJ: We’re biased, but we think Botify’s crawler is pretty great! It’s called Botify Analytics, and it can crawl up to 50 million URLs in a single crawl at a rate of 250 URLs per second.

Q: How do I tell if Google is limiting the pages crawled/indexed? What size website is considered “enterprise”?

KJ: You’ll need to compare your crawl data with your log file data. The first step is to inventory your site to take an accounting of all your URLs, then by layering on log file data, you can see which of your URLs Google is hitting vs. missing.

While there’s no rigid number that qualifies a site as “enterprise,” we tend to define it as sites with hundreds of thousands of URLs or millions of URLs.

Google has said that “if a site has fewer than a few thousand URLs, most of the time it will be crawled efficiently,” so sites with more than a few thousand URLs, like enterprise websites, may start to experience crawl issues.

Q: What are KPIs for tech SEO?

KJ: We have a great article that answers that exact question! It’s called The Top 5 Technical SEO KPIs. An important thing to remember with the crawling to conversions framework is that even technical changes, while they most directly result in technical improvements, usually also have a cascading effect that improves “traditional” SEO KPIs like ranking and traffic as well.

Q: Is there any benefit in SEOs learning JavaScript, HTML, or CSS to help communicate with other teams?

KJ: While SEO pros don’t necessarily need to learn how to program, it’s always beneficial to be able to speak your developer’s language.

An added benefit to knowing the basics of things like JavaScript is that you’ll be able to understand when it does and doesn’t impact SEO. See JavaScript 101 for SEOs for more info on that.

Q: When your company sells hundreds of thousands of products, how do you prioritize what to work on?

KJ: Not an easy feat, for sure! There are two main ways you can prioritize effectively though:

- Knowing what’s important to your business

- Knowing what’s working/not working on your website currently.

It’s a good idea to make a list of pages that are important to your business (for example, your strategic products) and keep a close eye on those pages’ performance specifically.

In Botify, you can accomplish this via segments. It’s also a good idea to keep track of what’s working and not working on your website.

If you see, for example, that your deepest product pages are getting the lowest traffic, that’s a good indicator that you should focus on improving the depth of those pages.

Q: How do you review log files?

KJ: It can be difficult to wade through log files in their raw form, so we recommend a log file analyzer made specifically with SEOs in mind, like Botify’s.

Q: Didn’t Google recently come out and recommend not using the robots.txt and recommended using the meta noindex?

KJ: Google will no longer support the noindex directive in robots.txt files, and instead asks that you add the noindex meta tag in the page’s HTML code or by returning a noindex header in the HTTP request.

This does not mean that robots.txt itself is not valid, only that noindex will not work as a directive in the robots.txt file.

[Video Recap] An Enterprise SEO Framework: From Crawling to Conversions

Watch the video recap of the webinar presentation and Q&A session.

Or check out the SlideShare below.

Image Credits

In-Post Image: Botify

Join Us For Our Next Webinar!

The Data Reveals: What It Takes To Win In AI Search

Register now to learn how to stay away from modern SEO strategies that don’t work.