What can I say? It’s been damned slow as far as search-related patent awards over the last while – which is actually quite odd.

I was joking with fellow patent hound, Bill Slawski, recently that Google figures we’re on to them… and stopped filing stuff.

Just kidding!

But here’s a few from the past few weeks.

Latest Google Patents of Interest

Selecting content using a location feature index

- Filed: December 20, 2017

- Awarded: February 18, 2020

Abstract

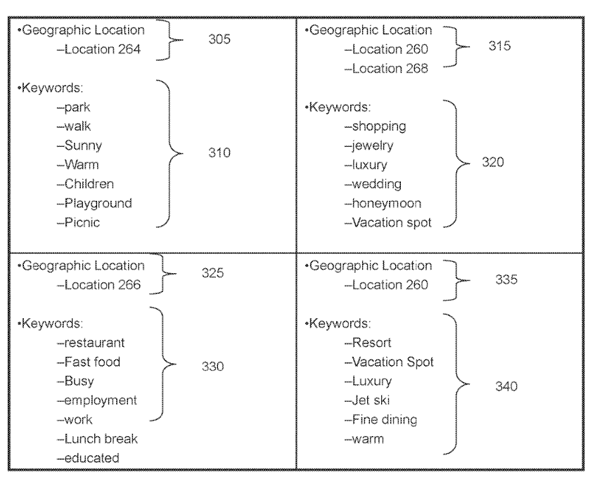

“Systems and methods of providing content for display on a computing device via a computer network using a location feature index are provided. A data processing system can receive a request for content from the computing device, and can determine a geographic location of the computing device associated with the request for content. The data processing system can identify a keyword indicating a non-geographic semantic feature of the determined geographic location. The identification can be based on the determined geographic location and from a location feature index that maps geographic areas to keywords that indicate non-geographic semantic features of the geographic areas. The data processing system can select, based on the keyword, a candidate content item for display on the computing device.”

Notable

“The data processing system can determine a geographic location of the computing device associated with a request for content. The data processing system can to identify a keyword indicating a non-geographic semantic feature of the determined geographic location. The data processing system can make the identification based on the determined geographic location and using a location feature index that maps geographic areas to keywords that indicate non-geographic semantic features of the geographic areas.”

“For example, the data processing system can determine that a geographic location has high end retail clothing stores. From a semantic analysis of this information, the data processing systems can identify keywords “diamonds” “luxury car” or “tropical beach vacation” and associate or map these keywords to the geographic area. The data processing system can receive a request for content to display on a computing device in the geographic area, e.g., the computing device is in the vicinity of the high end retail clothing stores. Responsive to the request, the data processing system can use the “tropical beach vacation” keyword to select a content item for a luxury tropical resort as a candidate for display on the computing device responsive to the request. In this example, the geographic area having high end clothing stores can be unrelated to any tropical location, although a user of a computing device (e.g., a smartphone) walking down a street having high end clothing stores may also be interested in high end luxury vacations. Thus, the data processing system can identify a group of keywords for one or more geographic areas based on semantic features or characteristics of those areas, and content providers can select content for a type of geographic area (e.g., high end retail areas) in general rather than focusing on a single area such as one set of latitude and longitude coordinates near a particular clothing store.”

Proactive virtual assistant

- Filed: December 16, 2016

- Awarded: February 4, 2020

Abstract

“An assistant executing at, at least one processor, is described that determines content for a conversation with a user of a computing device and selects, based on the content and information associated with the user, a modality to signal initiating the conversation with the user. The assistant is further described that causes, in the modality, a signaling of the conversation with the user.”

Notable

“… a user interface from which a user can chat, speak, or otherwise communicate with a virtual, computational assistant (e.g., also referred to as “an intelligent personal assistant” or simply as an “assistant”) to cause the assistant to output useful information, respond to a user’s needs, or otherwise perform certain operations to help the user complete a variety of real-world or virtual tasks.”

“The assistant may proactively guide the user to interesting information, even in instances where there was no explicit user request for the information. For instance, in response to the assistant determining that a flight reservation for the user indicates that the flight is delayed, the assistant may notify the user without the user requesting that the assistant provide information about the flight status.”

“The assistant may generate a single notification representing multiple notifications and multiple types of user experiences, based on an importance of the notifications. For instance, the assistant may collapse multiple notifications into a single notification (e.g., Jon, you have four alerts. Your package is on time, your flight is still on time, and your credit card was paid.”) for trivial notifications.”

Contextually disambiguating queries

- Filed: March 20, 2017

- Awarded: February 18, 2020

Abstract

“Methods, systems, and apparatus, including computer programs encoded on a computer storage medium, for contextually disambiguating queries are disclosed. In an aspect, a method includes receiving an image being presented on a display of a computing device and a transcription of an utterance spoken by a user of the computing device, identifying a particular sub-image that is included in the image, and based on performing image recognition on the particular sub-image, determining one or more first labels that indicate a context of the particular sub-image. The method also includes, based on performing text recognition on a portion of the image other than the particular sub-image, determining one or more second labels that indicate the context of the particular sub-image, based on the transcription, the first labels, and the second labels, generating a search query, and providing, for output, the search query.”

Notable

“(…) a graphical interface being presented on a display of a computing device and a transcription of an utterance spoken by a user of the computing device; identifying two or more images that are included in the graphical interface; for each of the two or more images, determining a number of entities that are included in the image; based on the number of entities that are included in each of the two or more images and for each of the two or more images, determining an image confidence score that reflects a likelihood that the image is of primary interest to the user;”

“For example, a user may ask a question about a photograph that the user is viewing on the computing device, such as “What is this?” The computing device may detect the user’s utterance and capture a respective image of the computing device that the user is viewing.”

“The server can identify visual and textual content in the image. The server generates labels for the image that correspond to content of the image, such as locations, entities, names, types of animals, etc. The server can identify a particular sub-image in the image. The particular sub-image may be a photograph or drawing. In some aspects, the server identifies a portion of the particular sub-image that is likely of primary interest to the user, such as a historical landmark in the image. The server can perform image recognition on the particular sub-image to generate labels for the particular sub-image. The server can also generate labels for textual content in the image, such as comments that correspond to the particular sub-image, by performing text recognition on a portion of the image other than the particular sub-image. The server can generate a search query based on the received transcription and the generated labels. Further, the server may be configured to provide the search query for output to a search engine.”

Determining a quality score for a content item

- Filed: July 26, 2017

- Awarded: February 18, 2020

NOTE: This seems to be targeted at Google Plus, as such may have no bearing in current approaches with Google.

Abstract

“Systems and methods for determining a user engagement level for a content item are provided. In some aspects, indicia of one or more user interactions with a content item are received. Each user interaction in the one or more user interactions has an associated time and an interaction type. A user engagement level for the content item is determined based on the one or more user interactions, the associated times, and the interaction types. The user engagement level for the content item is stored in association with the content item.”

Notable

“The subject technology generally relates to social networking services and, in particular, relates to determining a quality score for a content item in a social networking service.”

“A recentness score of the content item is determined based on the time stamp. An affinity score representing an affinity of the first user to the second user in the web-based application is also determined. A popularity score of the content item is determined based on user interactions with the content item. A quality score of the content item is generated based on the recentness score of the content item and a combination of the affinity score and the popularity score of the content item.”

“The recentness score of the content item may be determined based on a half-life decay function applied to a duration calculated as a current time minus a time corresponding to the time stamp. The half-life decay function calculates a number of half-life decays by dividing the calculated duration by a predetermined half-life duration. The time stamp associated with the content item corresponds to a most recent user interaction with the content item. The user interactions with the content item may comprise one or more of updating the content item, commenting on the content item, re-sharing the content item, or endorsing the comment. The affinity of the first user and the second user in the web-based application may be determined based on at least one of a number of and a quality of communication sessions between the first user and the second user.”

And there we have it for another week.

Drop back next week for more (unless Google decides to rain on our parade again).

More Resources:

- Google Search Patent Update – January 29, 2020

- When Google SERPs May Undergo a Sea Change

- Patent: Google Going Old School with Local SEO, But In A New Way

Image Credits

Featured Image: Created by author, February 2020

In-Post Images: USPTO