Bill Slawski and I had an email discussion about a recent algorithm. Bill suggested a specific research paper and patent might be of interest to look at. What Bill suggested challenged me to think beyond Neural Matching and RankBrain.

Recent algorithm research focuses on understanding content and search queries. It maybe useful to consider how they might help to explain certain changes.

The Difference Between RankBrain and Neural Matching

These are official statements from Google on what RankBrain and Neural Matching are via tweets by Danny Sullivan (aka SearchLiaison).

— RankBrain helps Google better relate pages to concepts

… primarily works (kind of) to help us find synonyms for words written on a page….— Neural matching helps Google better relate words to searches.

…primarily works to (kind of) to help us find synonyms of things you typed into the search box.…”kind of” because we already have (and long have had) synonym systems. These go beyond those and do things in different ways, too. But it’s an easy way (hopefully) to understand them.

For example, neural matching helps us understand that a search for “why does my TV look strange” is related to the concept of “the soap opera effect.”

We can then return pages about the soap opera effect, even if the exact words aren’t used…”

Here are the URLs for the tweets that describe what Neural Matching is:

- https://twitter.com/searchliaison/status/1108776359508099072

- https://twitter.com/dannysullivan/status/1108791313850204160

- https://twitter.com/dannysullivan/status/1108791555995758592

- https://twitter.com/searchliaison/status/1108776358996369408

What is CLSTM and is it Related to Neural Matching?

The paper Bill Slawski discussed with me was called, Contextual Long Short Term Memory (CLSTM) Models for Large Scale Natural Language Processing (NLP) Tasks.

The research paper PDF is here. The patent that Bill suggested was related to it is here.

That’s a research paper from 2016 and it’s important. Bill wasn’t suggesting that the paper and patent represented Neural Matching. But he said it looked related somehow.

The research paper uses an example of a machine that is trained to understand the context of the word “magic” from the following three sentences, to show what it does:

“1) Sir Ahmed Salman Rushdie is a British Indian novelist and essayist. He is said to combine magical realism with historical fiction.

2) Calvin Harris & HAIM combine their powers for a magical music video.

3) Herbs have enormous magical power, as they hold the earth’s energy within them.”

The research paper then explains how this method understands the context of the word “magic” in a sentence and a paragraph:

“One way in which the context can be captured succinctly is by using the topic of the text segment (e.g., topic of the sentence, paragraph).

If the context has the topic “literature”, the most likely next word should be “realism”. This observation motivated us to explore the use of topics of text segments to capture hierarchical and long-range context of text in LMs.

…We incorporate contextual features (namely, topics based on different segments of text) into the LSTM model, and call the resulting model Contextual LSTM (CLSTM).”

This algorithm is described as being useful for

Word Prediction

This is like predicting what your next typed word will be when typing on a mobile phone

Next Sentence Selection

This relates to a question and answer task or for generating “Smart Replies,” templated replies in text messages and emails.

Sentence Topic Prediction

The research paper describes this as part of a task for predicting the topic of a response to a user’s spoken query, in order to understand their intent.

That last bit kind of sounds close to what Neural Matching is doing (“…helps Google better relate words to searches“).

Question Answering Algorithm

The following research paper from 2019 seems like a refinement of that algo:

A Hierarchical Attention Retrieval Model for Healthcare Question Answering

Overview

https://ai.google/research/pubs/pub47789

PDF

http://dmkd.cs.vt.edu/papers/WWW19.pdf

This is what it says in the overview:

“A majority of such queries might be non-factoid in nature, and hence, traditional keyword-based retrieval models do not work well for such cases.

Furthermore, in many scenarios, it might be desirable to get a short answer that sufficiently answers the query, instead of a long document with only a small amount of useful information.

In this paper, we propose a neural network model for ranking documents for question answering in the healthcare domain. The proposed model uses a deep attention mechanism at word, sentence, and document levels, for efficient retrieval for both factoid and non-factoid queries, on documents of varied lengths.

Specifically, the word-level cross-attention allows the model to identify words that might be most relevant for a query, and the hierarchical attention at sentence and document levels allows it to do effective retrieval on both long and short documents.”

It’s an interesting paper to consider.

Here is what the Healthcare Question Answering paper says:

“2.2 Neural Information Retrieval

With the success of deep neural networks in learning feature representation of text data, several neural ranking architectures have been proposed for text document search.

…while the model proposed in [22] uses the last state outputs of LSTM encoders as the query and document features. Both these models then use cosine similarity between query and document representations, to compute their relevance.

However, in majority of the cases in document retrieval, it is observed that the relevant text for a query is very short piece of text from the document. Hence, matching the pooled representation of the entire document with that of the query does not give very good results, as the representation also contains features from other irrelevant parts of the document.”

Then it mentions Deep Relevance Matching Models:

“To overcome the problems of document-level semantic-matching based IR models, several interaction-based IR models have been proposed recently. In [9], the authors propose Deep Relevance Matching Model (DRMM), that uses word count based interaction features between query and document words…”

And here it intriguingly mentions attention-based Neural Matching Models:

“…Other methods that use word-level interaction features are attention-based Neural Matching Model (aNMM) [42], that uses attention over word embeddings, and [36], that uses cosine or bilinear operation over Bi-LSTM features, to compute the interaction features.”

Attention Based Neural Matching

The citation of attention-based Neural Matching Model (aNMM) is to a non-Google research paper from 2018.

Does aNMM have anything to do with what Google calls Neural Matching?

aNMM: Ranking Short Answer Texts with Attention-Based Neural Matching Model

Overview

https://arxiv.org/abs/1801.01641

PDF

https://arxiv.org/pdf/1801.01641.pdf

Here is a synopsis of that paper:

“As an alternative to question answering methods based on feature engineering, deep learning approaches such as convolutional neural networks (CNNs) and Long Short-Term Memory Models (LSTMs) have recently been proposed for semantic matching of questions and answers.

…To achieve good results, however, these models have been combined with additional features such as word overlap or BM25 scores. Without this combination, these models perform significantly worse than methods based on linguistic feature engineering.

In this paper, we propose an attention based neural matching model for ranking short answer text.”

Long Form Ranking Better in 2018?

Jeff Coyle of MarketMuse stated that in the March Update he saw high flux in SERPs that contained long-form lists (ex: Top 100 Movies).

That was interesting because some of the algorithms this article discusses are about understanding long articles and condensing those into answers. Specifically, that was similar to what the Healthcare Question Answering paper discussed (Read Content Strategy and Google March 2019 Update).

So when Jeff mentioned lots of flux in the SERPs associated with long-form lists, I immediately recalled these recently published research papers focused on extracting answers from long-form content.

Could the March 2019 update also include improvements to understanding long-form content? We can never know for sure because that’s not the level of information that Google reveals.

What Does Google Mean by Neural Matching?

In the Reddit AMA, Gary Illyes described RankBrain as a PR Sexy ranking component. The “PR Sexy” part of his description implies that the name was given to the technology for reasons having to do with being descriptive and catchy and less to do with what it actually does.

The term RankBrain does not communicate what the technology is or does. If we search around for a “RankBrain” patent, we’re not going to find it. That may be because, as Gary said, it’s just a PR Sexy name.

I searched around at the time of the official Neural Matching announcement for patents and research tied to Google with those explicit words in them and did not find any.

So… what I did was to use Danny’s description of it to find likely candidates. And it so happened that ten days earlier I had come across a likely candidate and had started writing an article about it.

Deep Relevance Ranking using Enhanced Document-Query Interactions

PDF

http://www2.aueb.gr/users/ion/docs/emnlp2018.pdf

Overview

https://ai.google/research/pubs/pub47324

And I wrote this about that algorithm:

“Although this algorithm research is relatively new, it improves on a revolutionary deep neural network method for accomplishing a task known as Document Relevance Ranking. This method is also known as Ad-hoc Retrieval.”

In order to understand that, I needed to first research Document Relevance Ranking (DRR), as well as Ad-hoc Retrieval, because the new research is built upon that.

Ad-hoc Retrieval

“Document relevance ranking, also known as ad-hoc retrieval… is the task of ranking documents from a large collection using the query and the text of each document only.”

That explains what Ad-hoc Retrieval is. But does not explain what DRR Using Enhanced Document-Query Interactions is.

Connection to Synonyms

Deep Relevance Ranking Using Enhanced Document-Query Interactions is connected to synonyms, a feature of Neural Matching that Danny Sullivan described as like super-synonyms.

Here’s what the research paper describes:

“In the interaction based paradigm, explicit encodings between pairs of queries and documents are induced. This allows direct modeling of exact- or near-matching terms (e.g., synonyms), which is crucial for relevance ranking.”

What that appears to be discussing is understanding search queries.

Now compare that with how Danny described Neural Matching:

“Neural matching is an AI-based system Google began using in 2018 primarily to understand how words are related to concepts. It’s like a super-synonym system. Synonyms are words that are closely related to other words…”

The Secret of Neural Matching

It may very well be that Neural Matching might be more than just one algorithm. It may be worth considering that Neural Matching may be a comprised of a variety of algorithms and that the term Neural Matching is a name given to describe a group of algorithms working together.

Takeaways

Don’t Synonym Spam

I cringed a little when Danny mentioned synonyms because I imagined that some SEOs might be encouraged to begin seeding their pages with synonyms. I believe it’s important to note that Danny said “like” a super-synonym system.

So don’t take that to mean seeding a page with synonyms. The patents and research papers above are far more sophisticated than simple-minded synonym spamming.

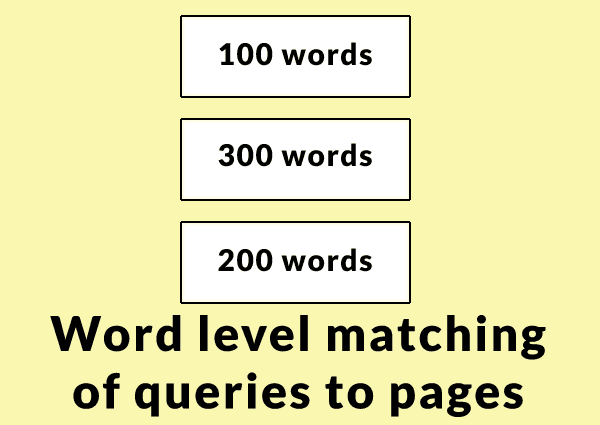

Focus on Words, Sentences and Paragraphs

Another takeaway from those patents is that they describe a way to assign topical meaning at three different levels of a web page. Natural writers can sometimes write fast and communicate a core meaning that sticks to the topic. That talent comes with extensive experience.

Not everyone has that talent or experience. So for the rest of us, including myself, I believe it pays to carefully plan and write content and learn to be focused.

Long-form versus Long-form Content

I’m not saying that Google prefers long-form content. I am only pointing out that many of these new research papers discussed in this article are focused on better understanding long form content by understand what the topic of those words, sentences and paragraphs mean.

So if you experience a ranking drop, it may be useful to review the winners and the losers and see if there is evidence of flux that might be related to long-form or short-form content.

The Google Dance

Google used to update it’s search engine once a month with new data and sometimes new algorithms. The monthly ranking changes was what we called the Google Dance.

Google now refreshes it’s index on a daily basis (what’s known as a rolling update). Several times a year Google updates the algorithms in a way that usually represents an improvement to how Google understands search queries and content. These research papers are typical of those kinds of improvements. So it’s important to know about them so as to not be fooled by red herrings and implausible hypotheses.

More Resources

Images by Shutterstock, Modified by Author

Screenshots by Author, Modified by Author