In 2012, I had a large client that was ranked in the top position for most of the terms that mattered to them.

And then, overnight, their ranking and traffic dropped like a rock.

Have you ever experienced this? If so, you know exactly how it feels.

The initial shock is followed by the nervous feeling in the pit of your stomach as you contemplated how it will affect your business or how you’ll explain it to your client.

After that is the frenzied analysis to figure out what caused the drop and hopefully fix it before it causes too much damage.

In this case, we didn’t need Sherlock Holmes to figure out what happened. My client had insisted that we do whatever it took to get them to rank quickly. The timeframe they wanted was not months, but weeks, in a fairly competitive niche.

If you’ve been involved in SEO for a while, you probably already know what’s about to go down here.

The only way to rank in the timeline they wanted was to use tactics that violated Google’s Webmaster Guidelines. While this was risky, it often worked. At least before Google released their Penguin update in April 2012.

Despite my clearly explaining the risks, my client demanded that we do whatever it took. So that’s what we did.

When their ranking predictably tanked, we weren’t surprised. Neither was my client. And they weren’t upset because we explained the rules and the consequences of breaking those rules.

They weren’t happy that they had to start over, but the profits they reaped leading up to that more than made up for it. It was a classic risk vs. reward scenario.

Unfortunately, most problems aren’t this easy to diagnose. Especially when you haven’t knowingly violated any of Google’s ever-growing list of rules

In this article, you’ll learn some ways you can diagnose and analyze a drop in ranking and traffic.

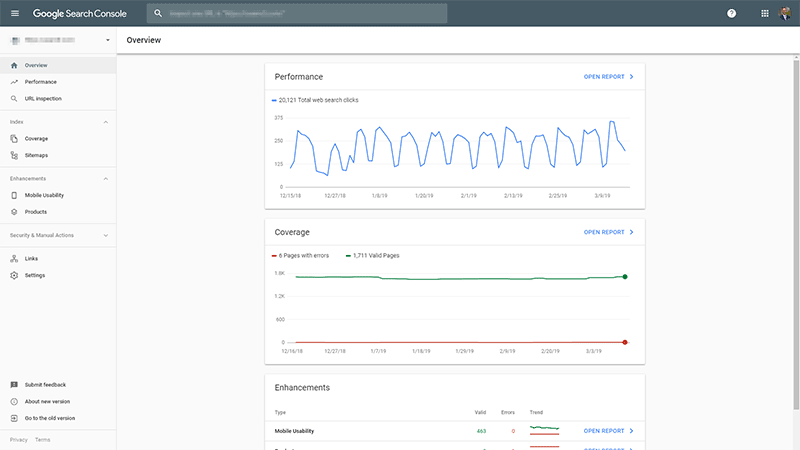

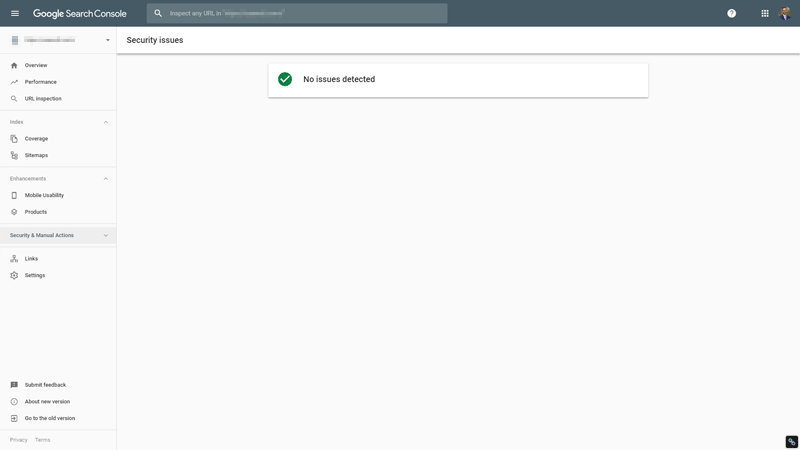

1. Check Google Search Console

This is the first place you should look. Google Search Console provides a wealth of information on a wide variety of issues.

Google Search Console will send email notifications for many serious issues, including manual actions, crawl errors, and schema problems, to name just a few. And you can analyze a staggering amount of data to identify other less obvious but just as severe issues.

Overview is a good section to start with because it lets you see the big picture, giving you some direction to get more granular.

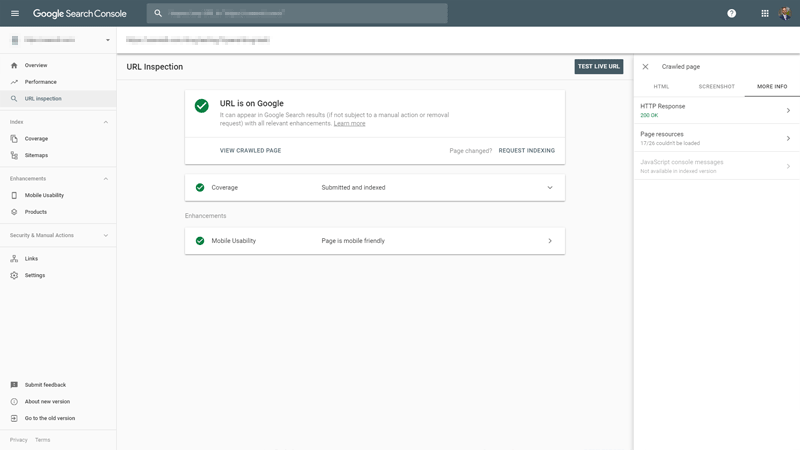

If you want to analyze specific pages, URL Inspection is a great tool because it allows you to look at any pages through the beady little eyes of the Google bot.

This can come in especially handy when problems on a page don’t cause an obvious issue on the front end but do cause issues for Google’s bots.

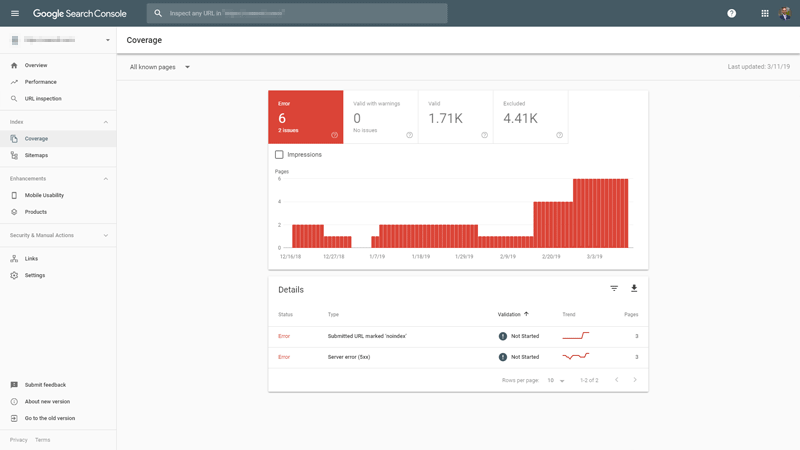

Coverage is great for identifying issues that can affect which pages are included in Google’s index, like server errors or pages that have been submitted but contain the noindex metatag.

Generally, if you receive a manual penalty, you’ll know exactly why. It’s most often the result of a violation of Google’s webmaster guidelines, such as buying links or creating spammy low-quality content.

However, it can sometimes be as simple as an innocent mistake in configuring schema markup. You can check the Manual Actions section for this information. GSC will also send you an email notification of any manual penalties.

The Security section will identify any issues with viruses or malware on your website that Google knows about.

It’s important to point out that just because there is no notice here, it doesn’t mean that there is no issue – it just means that Google isn’t aware of it yet.

2. Check for Noindex & Nofollow Meta Tags & Robots.Txt Errors

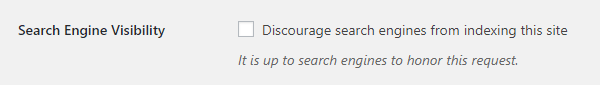

This issue is most common when moving a new website from a development environment to a live environment, but it can happen anytime.

It’s not uncommon for someone to click the wrong setting in WordPress or a plugin, causing one or more pages to be deindexed.

Review the Search Engine Visibility setting in WordPress at the bottom of the Reading section. You’ll need to make sure it’s left unchecked.

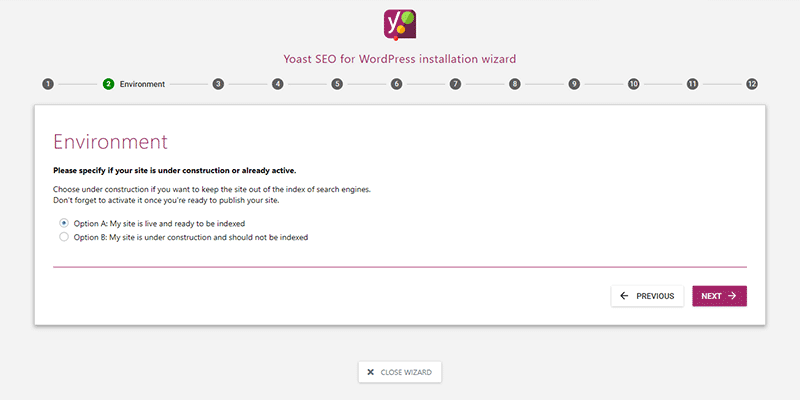

Review the index setting for any SEO-related plugins you have installed. (Yoast, in this example.) This is found in the installation wizard.

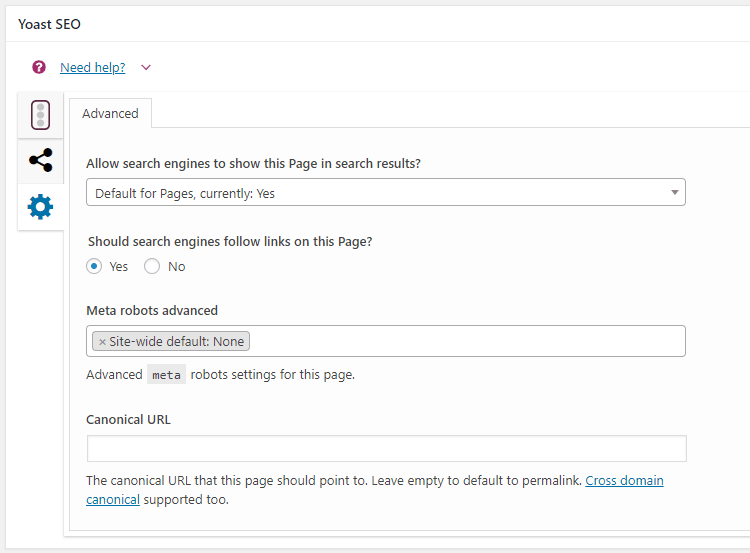

Review page-level settings related to indexation. (Yoast again, in this example.) This is typically found below the editing area of each page.

You can prioritize the pages to review by starting with the ones that have lost ranking and traffic, but it’s important to review all pages to help ensure the problem doesn’t become worse.

It’s equally important to also check your robots.txt file to make sure it hasn’t been edited in a way that blocks search engine bots. A properly configured robots.txt file might look like this:

User-agent: *

Disallow: /cgi-bin/

Disallow: /tmp/

Disallow: /wp-admin/

On the other hand, an improperly configured robots.txt file might look like this:

User-agent: *

Disallow: /

Google offers a handy tool in Google Search Console to check your robots.txt file for errors.

3. Determine If Your Website Has Been Hacked

When most people think of hacking, they likely imagine nefarious characters in search of juicy data they can use for identity theft.

As a result, you might think you’re safe from hacking attempts because you don’t store that type of data. Unfortunately, that isn’t the case.

Hackers are opportunists playing a numbers game, so your website is simply another vector from which they can exploit other vulnerable people – your visitors.

By hacking your website, they may be able to spread their malware and/or viruses to exploit even more other computers, when they might find the type of data they’re looking for.

But the impact of hacking doesn’t end there.

We all know the importance of inbound links from other websites, and rather than doing the hard work to earn those links, some people will hack into and embed their links on other websites.

Typically, they will take additional measures to hide these links by placing them in old posts or even by using CSS to disguise them.

Even worse, a hacker may single your website out to be destroyed by deleting your content, or even worse, filling it with shady outbound links, garbage content, and even viruses and malware.

This can cause search engines to completely remove a website from their index.

Taking appropriate steps to secure your website is the first and most powerful action you can take.

Most hackers are looking for easy targets, so if you force them to work harder, they will usually just move on to the next target. You should also ensure that you have automated systems in place to screen for viruses and malware.

Most hosting companies offer this, and it is often included at no charge with professional-grade web hosting. Even then, it’s important to scan your website from time to time to review any outbound links.

Screaming Frog makes it simple to do this, outputting the results as a CSV file that you can quickly browse to identify anything that looks out of place.

If your ranking drop was related to being hacked, it should be obvious because even if you don’t identify it yourself, you will typically receive an email notification from Google Search Console.

The first step is to immediately secure your website and clean up the damage. Once you are completely sure that everything has been resolved, you can submit a reconsideration request through Google Search Console.

4. Analyze Inbound Links

This factor is pretty straightforward. If inbound links caused a drop in your ranking and traffic, it will generally come down to one of three issues.

It’s either:

- A manual action caused by link building tactics that violate Google’s Webmaster Guidelines.

- A devaluation or loss of links.

- Or an increase in links to one or more competitors’ websites.

A manual action will result in a notification from Google Search Console. If this is your problem, it’s a simple matter of removing or disavowing the links and then submitting a reconsideration request.

In most cases, doing so won’t immediately improve your ranking because the links had artificially boosted your ranking before your website was penalized.

You will still need to build new, quality links that meet Google’s Webmaster Guidelines before you can expect to see any improvement.

A devaluation simply means that Google now assigns less value to those particular links. This could be a broad algorithmic devaluation, as we see with footer links, or they could be devalued because of the actions of the website owners.

For example, a website known to buy and/or sell links could be penalized, making the links from that site less valuable, or even worthless.

An increase in links to one or more competitors’ websites makes them look more authoritative than your website in the eyes of Google.

There’s really only one way to solve this, and that is to build more links to your website. The key is to ensure the links you build meet Google’s Webmaster Guidelines, otherwise, you risk eventually being penalized and starting over.

5. Analyze Content

Google’s algorithms are constantly changing. I remember a time when you could churn out a bunch of low-quality, 300-word pages and dominate the search results.

Today, that generally won’t even get you on the first page in for moderately topics, where we typically see 1,000+ word pages holding the top positions.

But it goes much deeper than that.

You’ll need to evaluate what the competitors who now outrank you are doing differently with their content.

Word count is only one factor, and on its own, doesn’t mean much. In fact, rather than focusing on word count, you should determine whether your content is comprehensive.

In other words, does it more thoroughly answer all of the common questions someone may have on the topic compared to the content on competitors’ websites?

Is yours well-written, original, and useful?

Don’t answer this based on feelings – use one of the reading difficulty tests so that you’re working from quantifiable data.

Yoast’s SEO plugin scores this automatically as you write and edit right within WordPress. SEMrrush offers a really cool plugin that does the same within Google Docs, but there are a number of other free tools available online.

Is it structured for easy reading, with subheadings, lists, and images?

People don’t generally read content online, but instead, they scan it. Breaking it up into manageable chunks makes it easier for visitors to scan, making them more likely to stick around long enough to find the info they’re looking for.

This is something that takes a bit of grunt work to properly analyze. Tools like SEMrush are incredibly powerful and can provide a lot of insight on many of these factors, but there are some factors that still require a human touch.

You need to consider the user intent. Are you making it easier for them to quickly find what they need? That should be your ultimate goal.

More Resources:

- 20 Reasons Why Your Search Ranking & Traffic Might Drop

- Google Rankings Dropped? How to Evaluate with Raters Guidelines

- More Things to Do After a Rankings Decline

Image Credits

All screenshots taken by author, March 2019