“Hi, nice to meet you, my name is ZettaByte!”

If you are from the generation familiar with the terms MegaByte, GigaByte, and TerraByte, prepare yourselves for a whole new vocabulary, the likes of which your predecessors probably could not imagine. Soon terms like PetaByte, ExaByte, and ZettaByte will be as common as the aforementioned, with benefits and difficulties to match their magnitude.

IBM claims 90% of the world’s data has been accumulated in the last 2 years. If that is true, those math savvy reader can imagine how steep the exponential curve must be with regard to the weight of data, the enormity of what’s coming.

What is a ZettaByte, you ask? It is something out of this world frankly. But, one part of me is the old fashion physicist, so even I am in dire need of visualizing ZettaByte in units I can see. I try and visualize what would happen if someone accidentally pushed the Print button and 1 ZettaByte of data was printed on paper? Considering that an average book of 500 pages, with some photos and diagrams in it, contains about 10 MegaBytes of data, and the book weighs 1 pound and is 2 inch thick, 1 ZettaByte equivalent books would weigh about 1016 pounds or 5 x 1010 tonnes. Still, “listing” the number does not come close to illustrating this monumental sum. So, I made a list below to have some fun with it.

A single ZettaByte of equivalent books:

- would fill up 10 billion Trucks or 500,000 aircraft carriers

- equally distributed, would be 10,000 books for each person living on the planet today

- 5 return trips to the sun if stacked up one atop the another

- would require 3 times the number of trees in the world to make just the paper

Now that we have “visualized”what ZettaByte means, let’s turn our attention to data accumulation. The situation here is so ridiculous that one does not have to be accurate anymore to determine “when” we will have 1,000 ZettaBytes of information piled up. If this milestone does not happen in 2013, it will surely be in the next few years. It may even be we are already there, no one really can tell for sure. A reliable source tells me the World Wide Web (WWW) already contains 1 ZettaByte of information. Part of the sum is also the annual global IP traffic (0.8 ZettaByte) and annual Internet Video (0.3 ZettaByte) as we enter 2013.

Now for where the data comes from. Here are a list of mechanisms that generate big data:

- Data from scientific measurements and experiments (astronomy, physics, genetics, etc.)

- Peer to peer communication (text messaging, chat lines, digital phone calls)

- Broadcasting (News, blogs)

- Social Networking (Facebook, Twitter)

- Authorship (digital books, magazines, Web pages, images, videos)

- Administrative (enterprise or government documents, legal and financial records)

- Business (e-commerce, stock markets, business intelligence, marketing, advertising)

- Other

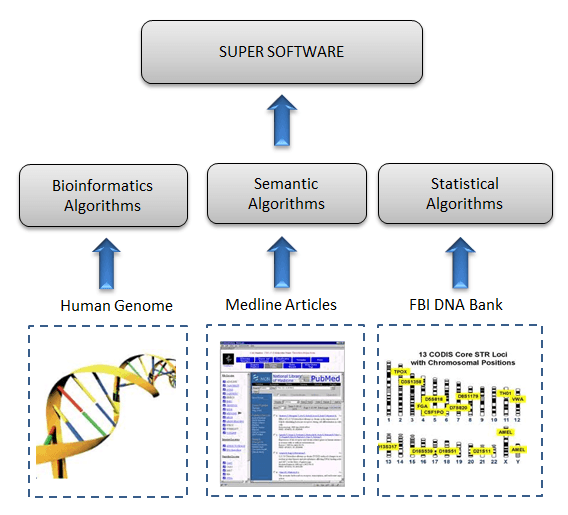

“Big Data” is not just a data silo, but rather this term when all the relevant part of data is used for a specific purpose. Although there should be clear boundaries between data segments that belong to specific objectives, this very concept is misleading and can undermine potential opportunities. For example, scientists working on human genome data may improve their analysis if they could take the entire content (publications) on Medline (or Pubmed) and analyze it in conjunction with the human genome data. However, this requires natural language processing (semantic) technology combined with bioinformatics algorithms, which is an unusual coupling at best.

Along these lines, seemingly two different data segments in different formats, when combined, actually define a new “big data”. Now, add to that a 3rd data segment, say the FBI’s DNA bank, or how about a database of social genetic information as in geneology.com? As you can see, the complications/opportunities can go on and on. This is where the mystery and the excitement resides with the concept of big data. Welcome to our world.

Analyzing Big Data example

Analyzing Big Data exampleTackling the Problem – The Opportunity

While we are generating data at colossal volumes, are we prepared for it? In one aspect, the difficulty resembles chopping wood and piling it up before we ever invented the stove. That said, we should look at this question in two stages: (1) Platform and (2) Analytics “super” Software.

In the department of platforms, Apache Hadoop’s open source software enables the distributed processing of large data sets across clusters of commodity servers, also known as cloud computing. IBM’s Platform Symphony is another example of grid management suitable for a variety of distributed computing and big data analytics applications. Oracle, HP, SAP, and Software AG are very much in the game for this $10 billion industry. While these giants are offering variety of solutions for distributed computing platforms, there is still a huge void at the level of Analytics Super Software.

So, what would a Super Software be doing at the top of the pyramid? This component would be managing multiple applications under its umbrella, almost like an auto-pilot for airplanes, Super Software’s main function would be to discover new knowledge which would otherwise be impossible to acquire via manual means. To that end –

Discovery requires the following functions:

- Finding associations across information in any format

- Visualization of associations

- Search

- Categorization, compacting, summarization

- Characterization of new data (where it fits)

- Alerting

- Cleaning (deleting unnecessary clogging information)

In our ongoing example in genetics, Super Software would be able to identify genetic patterns of a disease from human genome data, supported by clinical results reported in Medline, and further analyzed to unveil mutation possibilities using FBI’s DNA bank of millions of DNA information. One can extend the scope and meaning of top level objectives which is only limited by our imagination.

Information overload and pollution are holding us back now

Information overload and pollution are holding us back nowThen too, big data can also be a curse if the cleaning (deleting) technologies are not considered as part of the Super Software operation. In my previous post, “information pollution”, I have emphasized the danger of uncontrollable growth of information which is the invisible devil in information age.

With big data, we have finally reached a cross-roads where computers are going to “have” to think on behalf of humans to discover new knowledge. There is honestly no turning back anymore, we have reached the limit of mental capacity to cope, let alone absorb information to process into knowledge.

There are few startup companies headed in this particular direction, which I intend to cover in a future article. I will welcome inputs to identify those startups and your concerns and interests too.

Photo credit: Big Data monolith – courtesy © rolffimages – Fotolia.com, Information Overload – courtesy © Sergey Nivens – Fotolia.com