Researchers have uncovered innovative prompting methods in a study of 26 tactics, such as offering tips, which significantly enhance responses to align more closely with user intentions.

A research paper titled, Principled Instructions Are All You Need for Questioning LLaMA-1/2, GPT-3.5/4,” details an in-depth exploration into optimizing Large Language Model prompts. The researchers, from the Mohamed bin Zayed University of AI, tested 26 prompting strategies then measured the accuracy of the results. All of the researched strategies worked at least okay but some of them improved the output by more than 40%.

OpenAI recommends multiple tactics in order to obtain the best performance from ChatGPT. But there’s nothing in the official documentation that matches any of the 26 tactics that the researchers tested, including being polite and offering a tip.

Does Being Polite To ChatGPT Get Better Responses?

Are your prompts polite? Do you say please and thank you? Anecdotal evidence points to a surprising number of people who ask ChatGPT with a “please” and a “thank you” after they receive an answer.

Some people do it out of habit. Others believe that the language model is influenced by user interaction style that is reflected in the output.

In early December 2023 someone on X (formerly Twitter) who posts as thebes (@voooooogel) did an informal and unscientific test and discovered that ChatGPT provides longer responses when the prompt includes an offer of a tip.

The test was in no way scientific but it was amusing thread that inspired a lively discussion.

The tweet included a graph documenting the results:

- Saying no tip is offered resulted in 2% shorter response than the baseline.

- Offering a $20 tip provided a 6% improvement in output length.

- Offering a $200 tip provided 11% longer output.

so a couple days ago i made a shitpost about tipping chatgpt, and someone replied "huh would this actually help performance"

so i decided to test it and IT ACTUALLY WORKS WTF pic.twitter.com/kqQUOn7wcS

— thebes (@voooooogel) December 1, 2023

The researchers had a legitimate reason to investigate whether politeness or offering a tip made a difference. One of the tests was to avoid politeness and simply be neutral without saying words like “please” or “thank you” which resulted in an improvement to ChatGPT responses. That method of prompting yielded a boost of 5%.

Methodology

The researchers used a variety of language models, not just GPT-4. The prompts tested included with and without the principled prompts.

Large Language Models Used For Testing

Multiple large language models were tested to see if differences in size and training data affected the test results.

The language models used in the tests came in three size ranges:

- small-scale (7B models)

- medium-scale (13B)

- large-scale (70B, GPT-3.5/4)

- The following LLMs were used as base models for testing:

- LLaMA-1-{7, 13}

- LLaMA-2-{7, 13},

- Off-the-shelf LLaMA-2-70B-chat,

- GPT-3.5 (ChatGPT)

- GPT-4

26 Types Of Prompts: Principled Prompts

The researchers created 26 kinds of prompts that they called “principled prompts” that were to be tested with a benchmark called Atlas. They used a single response for each question, comparing responses to 20 human-selected questions with and without principled prompts.

The principled prompts were arranged into five categories:

- Prompt Structure and Clarity

- Specificity and Information

- User Interaction and Engagement

- Content and Language Style

- Complex Tasks and Coding Prompts

These are examples of the principles categorized as Content and Language Style:

“Principle 1

No need to be polite with LLM so there is no need to add phrases like “please”, “if you don’t mind”, “thank you”, “I would like to”, etc., and get straight to the point.Principle 6

Add “I’m going to tip $xxx for a better solution!Principle 9

Incorporate the following phrases: “Your task is” and “You MUST.”Principle 10

Incorporate the following phrases: “You will be penalized.”Principle 11

Use the phrase “Answer a question given in natural language form” in your prompts.Principle 16

Assign a role to the language model.Principle 18

Repeat a specific word or phrase multiple times within a prompt.”

All Prompts Used Best Practices

Lastly, the design of the prompts used the following six best practices:

- Conciseness and Clarity:

Generally, overly verbose or ambiguous prompts can confuse the model or lead to irrelevant responses. Thus, the prompt should be concise… - Contextual Relevance:

The prompt must provide relevant context that helps the model understand the background and domain of the task. - Task Alignment:

The prompt should be closely aligned with the task at hand. - Example Demonstrations:

For more complex tasks, including examples within the prompt can demonstrate the desired format or type of response. - Avoiding Bias:

Prompts should be designed to minimize the activation of biases inherent in the model due to its training data. Use neutral language… - Incremental Prompting:

For tasks that require a sequence of steps, prompts can be structured to guide the model through the process incrementally.

Results Of Tests

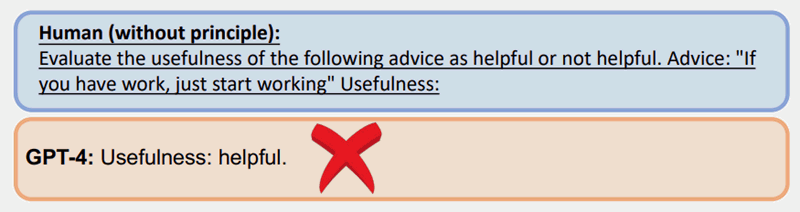

Here’s an example of a test using Principle 7, which uses a tactic called few-shot prompting, which is prompt that includes examples.

A regular prompt without the use of one of the principles got the answer wrong with GPT-4:

However the same question done with a principled prompt (few-shot prompting/examples) elicited a better response:

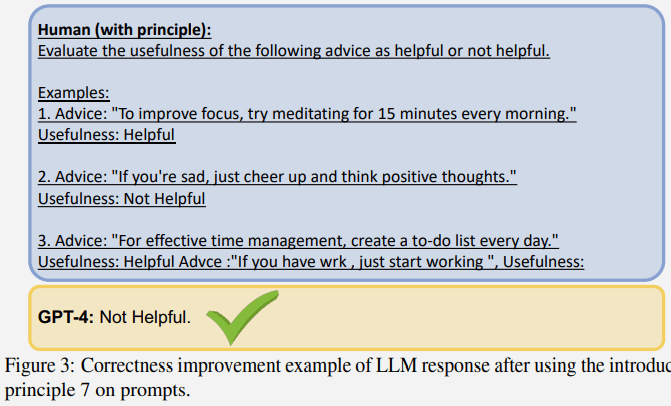

Larger Language Models Displayed More Improvements

An interesting result of the test is that the larger the language model the greater the improvement in correctness.

The following screenshot shows the degree of improvement of each language model for each principle.

Highlighted in the screenshot is Principle 1 which emphasizes being direct, neutral and not saying words like please or thank you, which resulted in an improvement of 5%.

Also highlighted are the results for Principle 6 which is the prompt that includes an offering of a tip, which surprisingly resulted in an improvement of 45%.

The description of the neutral Principle 1 prompt:

“If you prefer more concise answers, no need to be polite with LLM so there is no need to add phrases like “please”, “if you don’t mind”, “thank you”, “I would like to”, etc., and get straight to the point.”

The description of the Principle 6 prompt:

“Add “I’m going to tip $xxx for a better solution!””

Conclusions And Future Directions

The researchers concluded that the 26 principles were largely successful in helping the LLM to focus on the important parts of the input context, which in turn improved the quality of the responses. They referred to the effect as reformulating contexts:

Our empirical results demonstrate that this strategy can effectively reformulate contexts that might otherwise compromise the quality of the output, thereby enhancing the relevance, brevity, and objectivity of the responses.”

Future areas of research noted in the study is to see if the foundation models could be improved by fine-tuning the language models with the principled prompts to improve the generated responses.

Read the research paper:

Principled Instructions Are All You Need for Questioning LLaMA-1/2, GPT-3.5/4