Google has unveiled Gemini, its most advanced and capable artificial intelligence (AI) model, with advanced multimodal capabilities.

This groundbreaking model represents a leap forward in AI technology, offering state-of-the-art performance compared to existing large language models (LLMs).

Sundar Pichai, CEO of Google and Alphabet, emphasized that AI is shaping a profound technological shift, potentially surpassing the impact of the mobile and web revolutions.

He highlighted the significance of AI in driving innovation and economic progress, enhancing human knowledge, creativity, and productivity.

What Is Google Gemini?

Developed by Google DeepMind, led by CEO and co-founder Demis Hassabis, Gemini stands as a testament to Google’s ongoing commitment to being an AI-first company.

I’m very excited to share our work on Gemini today! Gemini is a family of multimodal models that demonstrate really strong capabilities across the image, audio, video, and text domains. Our most-capable model, Gemini Ultra, advances the state of the art in 30 of 32 benchmarks,… pic.twitter.com/sQfxBy9tpT

— Jeff Dean (@🏡) (@JeffDean) December 6, 2023

The model showcases an impressive array of capabilities, particularly in its multimodal understanding – a feature allowing it to process and seamlessly combine different types of information, including text, code, audio, image, and video.

Google Gemini Models: Ultra, Pro, And Nano

Gemini 1.0, the first version of the model, comes in three variants: Gemini Ultra, Gemini Pro, and Gemini Nano.

Screenshot from DeepMind, December 2023

Screenshot from DeepMind, December 2023Each is optimized for specific tasks, with Gemini Ultra designed for highly complex tasks, Gemini Pro for a wide range of tasks, and Gemini Nano for efficient on-device tasks.

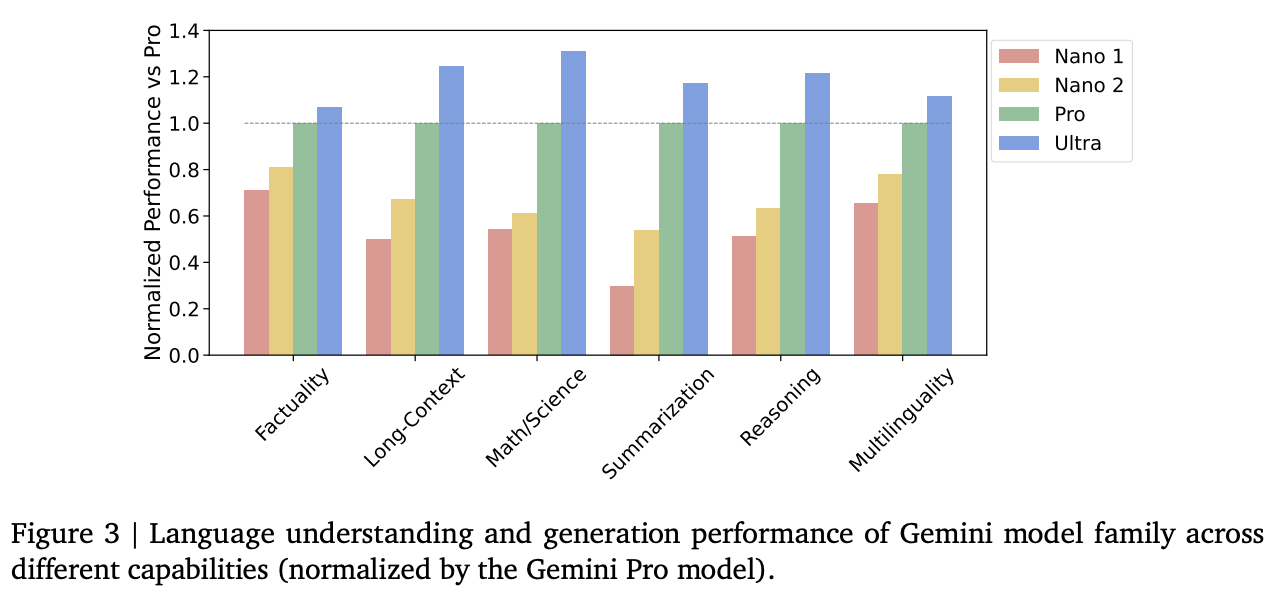

Screenshot from Google, December 2023

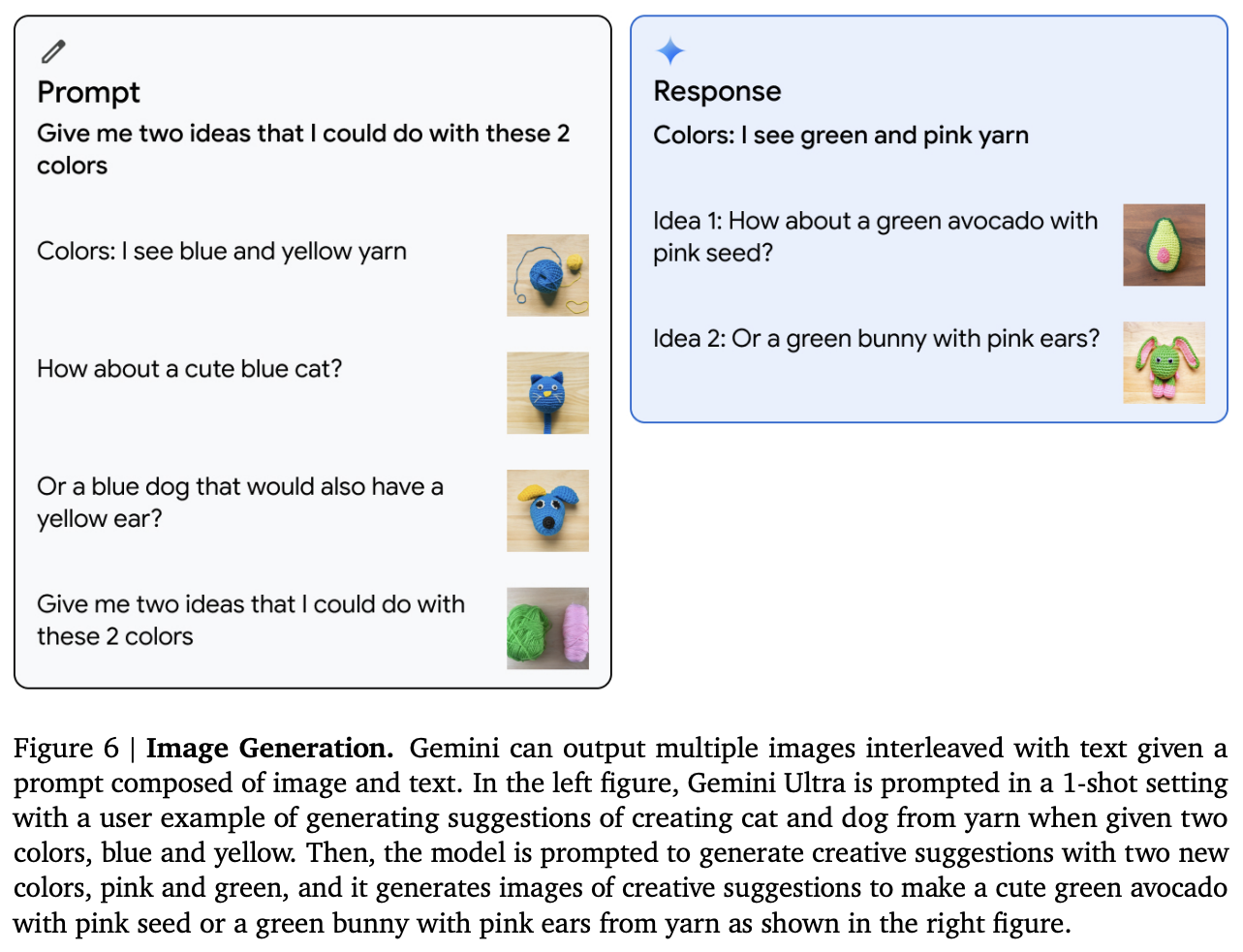

Screenshot from Google, December 2023Google Gemini Performance: Text Benchmarks

The model’s performance is exceptional, surpassing human experts in Massive Multitask Language Understanding (MMLU) with a score of 90.0%.

Additionally, Gemini Ultra outperforms existing models in 30 of the 32 widely used academic benchmarks in large language model research.

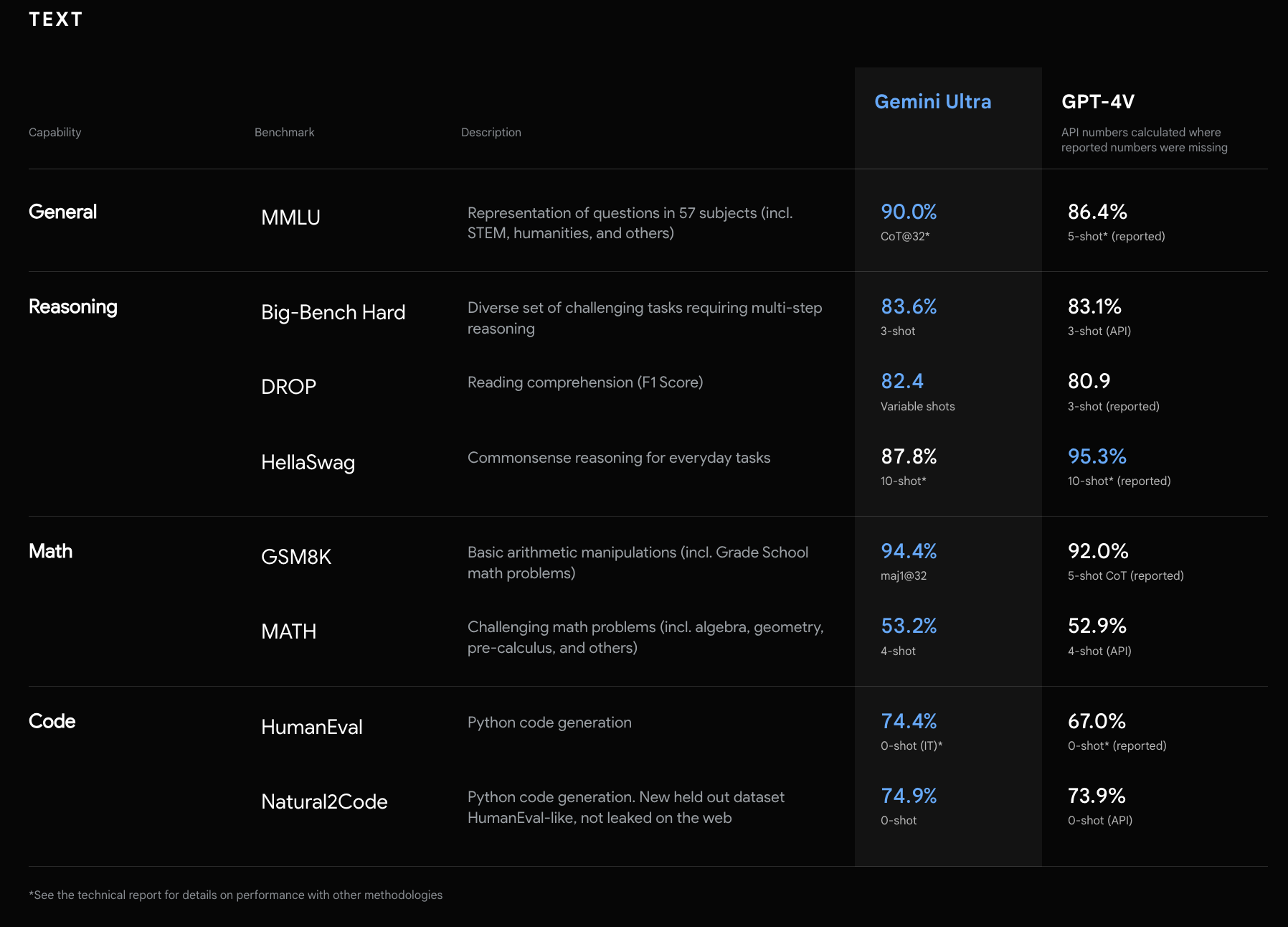

Screenshot from DeepMind, December 2023

Screenshot from DeepMind, December 2023Google Gemini Multimodal Capabilities And Performance

Gemini’s innovative approach to multimodality sets it apart from previous models.

Traditional multimodal models are often limited by their design, which involves training separate components for different modalities and then stitching them together.

In contrast, Gemini was built from the ground up to be natively multimodal, enabling it to understand and reason across various inputs far more effectively.

Screenshot from DeepMind, December 2023

Screenshot from DeepMind, December 2023This capability positions Gemini as a powerful tool in fields ranging from science to finance, where it can uncover insights from vast amounts of data and provide advanced reasoning in complex subjects like math and physics.

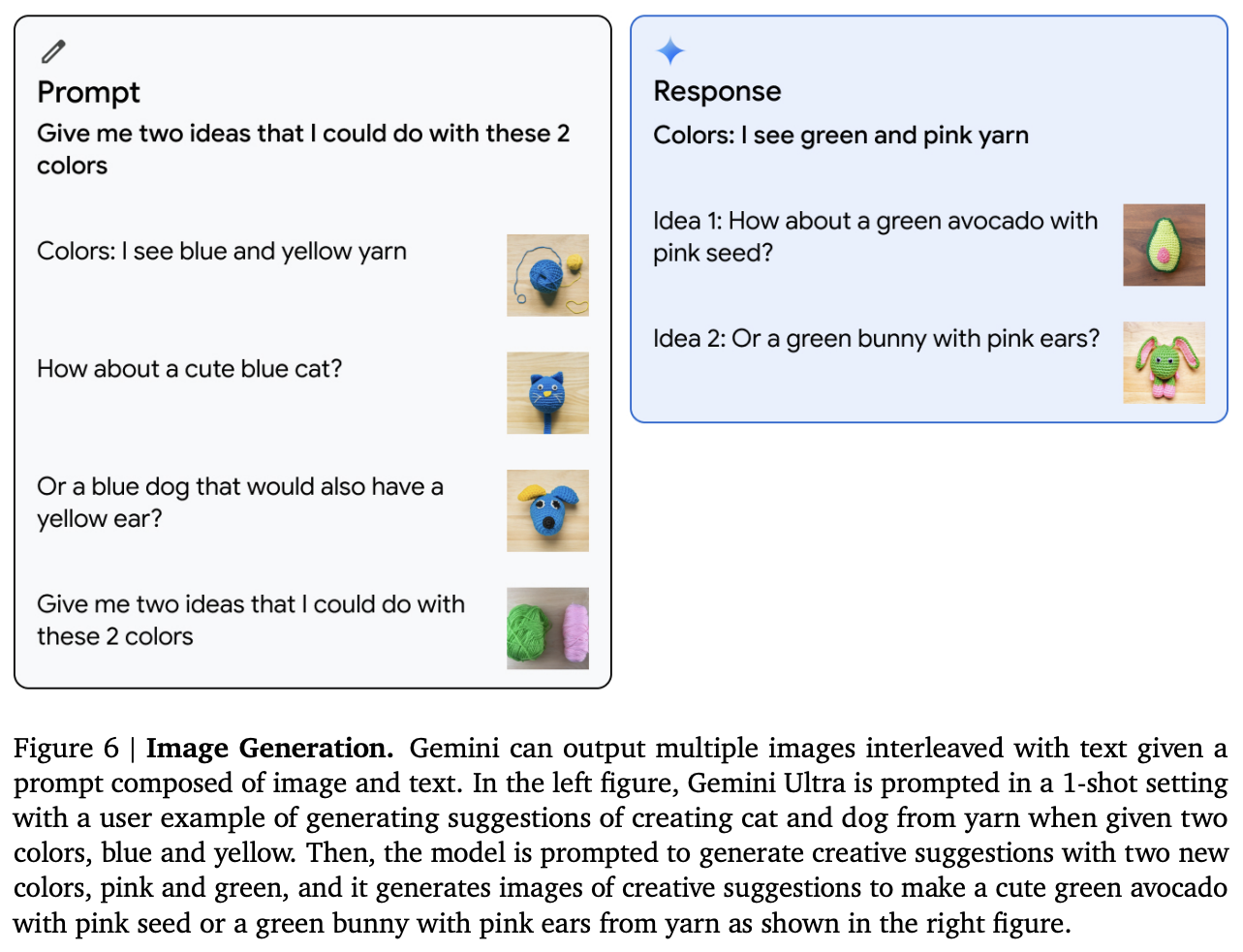

Examples from the Google DeepMind report on Google Gemin showcase Gemini’s multimodal capabilities, such as image generation.

Screenshot from Google, December 2023

Screenshot from Google, December 2023In this video, Google tests Gemini with its Emoji Kitchen.

It also can handle text, image, and audio, as shown below.

Screenshot from Google, December 2023

Screenshot from Google, December 2023This video from Google offers more insight into Gemini’s ability to process raw audio.

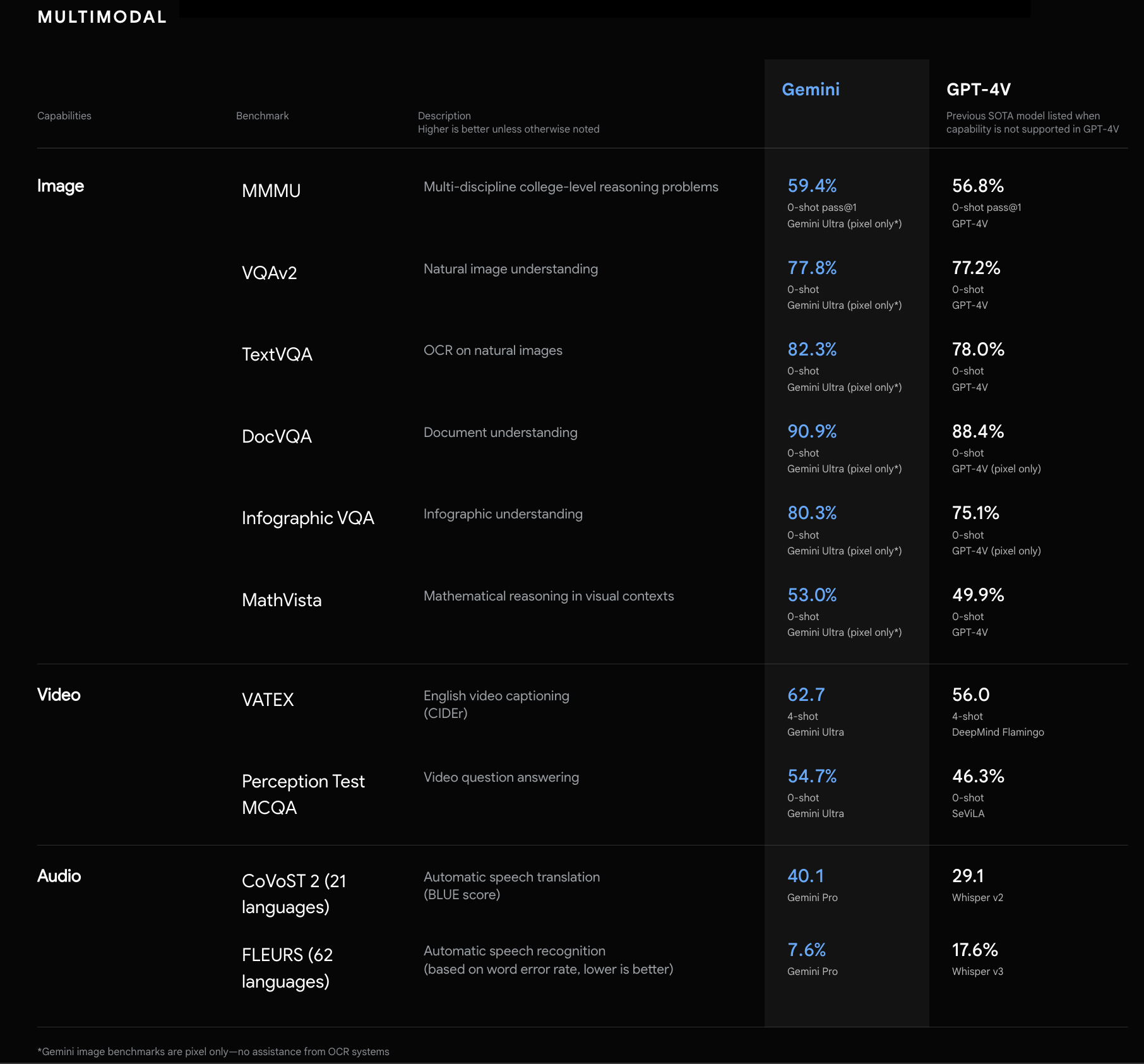

Gemini Benchmarks Against External Competitors

How does Google Gemini stack up to the top AI models from OpenAI, Inflection, Anthropic, Meta, and xAI? The following shows Gemini Ultra and Pro performance on text benchmarks against its competition.

Screenshot from Google, December 2023

Screenshot from Google, December 2023Gemini Excels At Coding

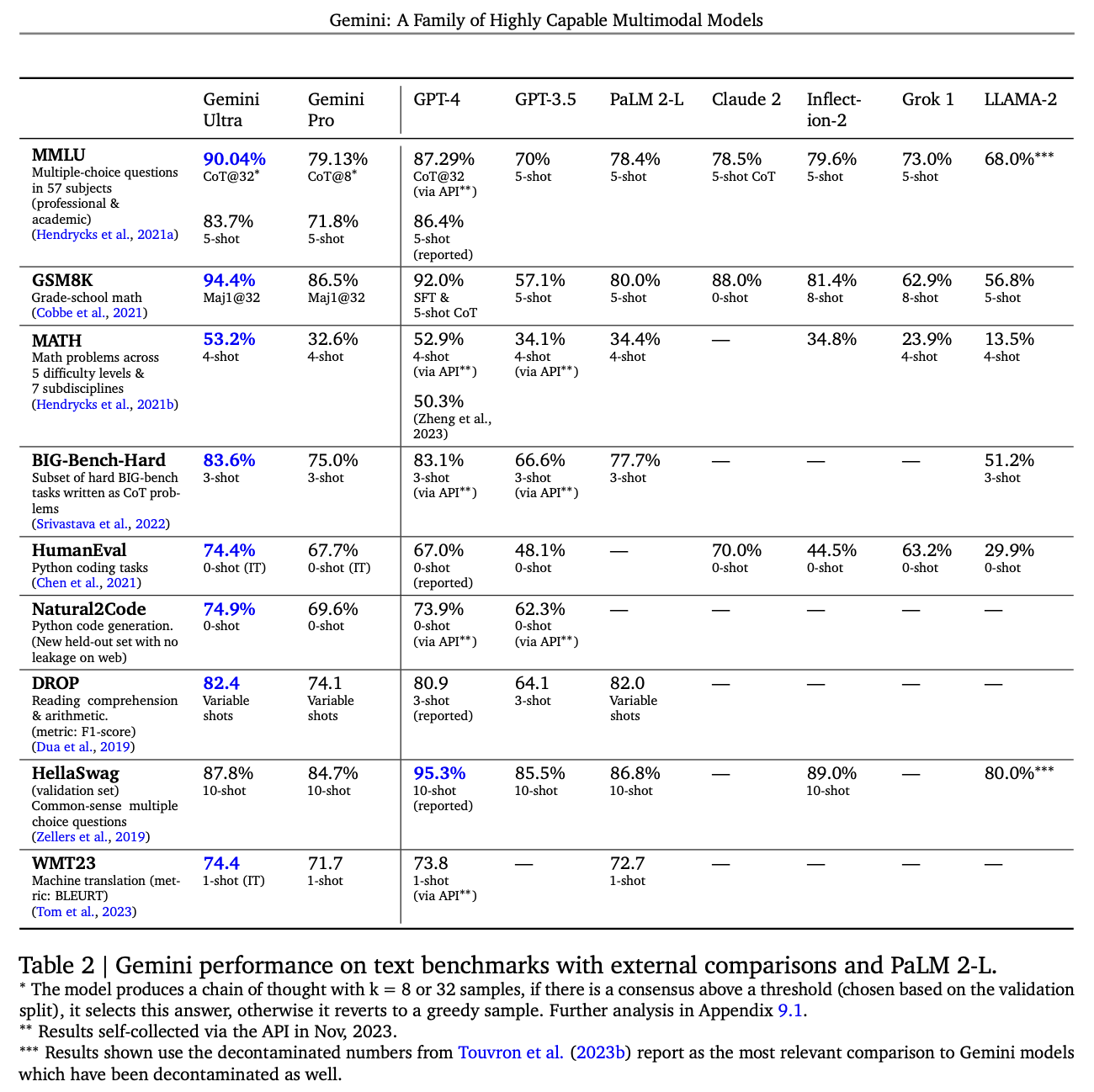

In addition to its multimodal capabilities, Gemini excels in coding tasks. Its ability to understand, explain, and generate high-quality code in multiple programming languages positions it as a leading model for coding.

Screenshot from Google, December 2023

Screenshot from Google, December 2023It also forms the basis for more advanced coding systems, like AlphaCode 2, significantly improving competitive programming problems.

The model’s efficiency and scalability are bolstered by Google’s in-house designed Tensor Processing Units (TPUs) v4 and v5e, making it the most reliable and scalable model to train and serve.

Google Experimenting With Gemini For Search Generative Experience (SGE)

We’re already starting to experiment with Gemini in Search, where it’s making our Search Generative Experience (SGE) faster for users, with a 40% reduction in latency in English in the U.S., alongside improvements in quality.

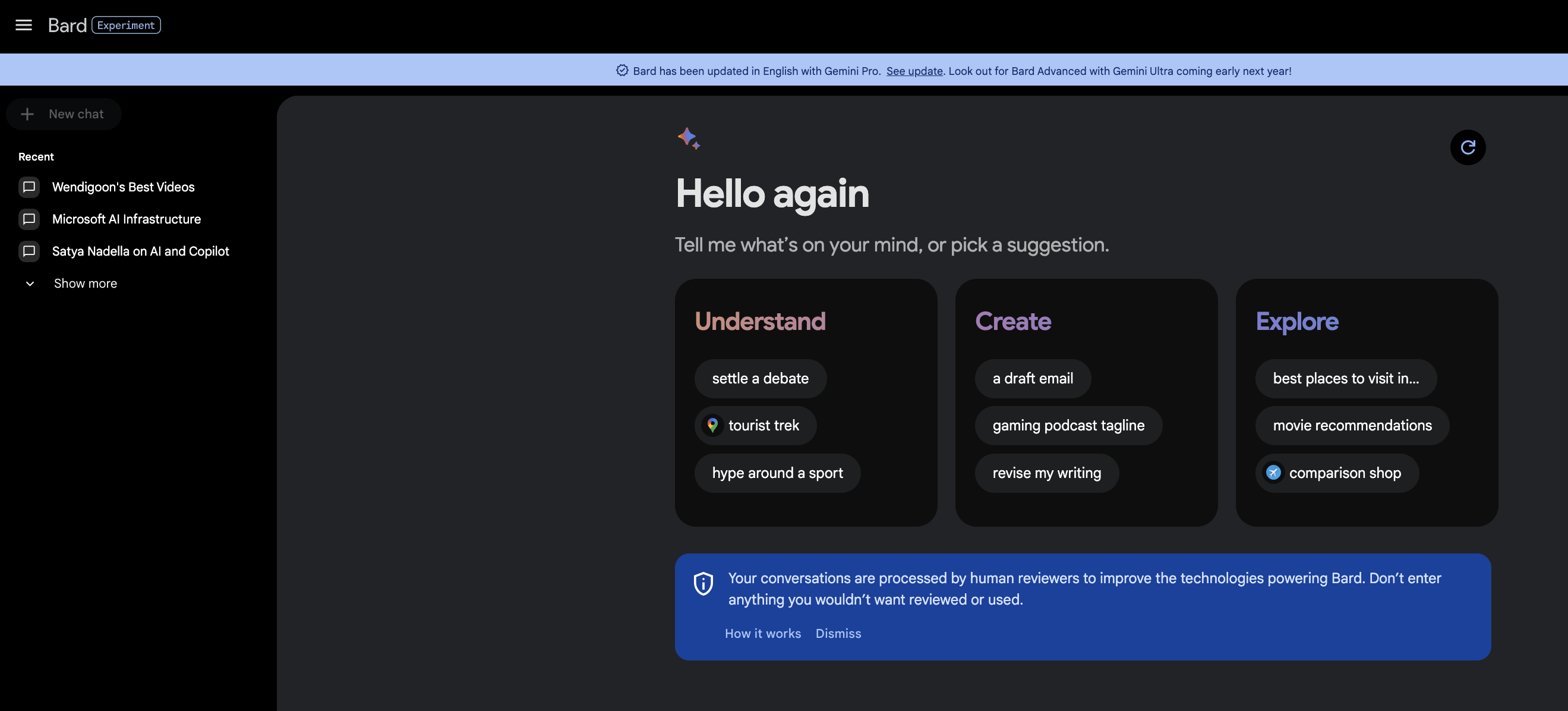

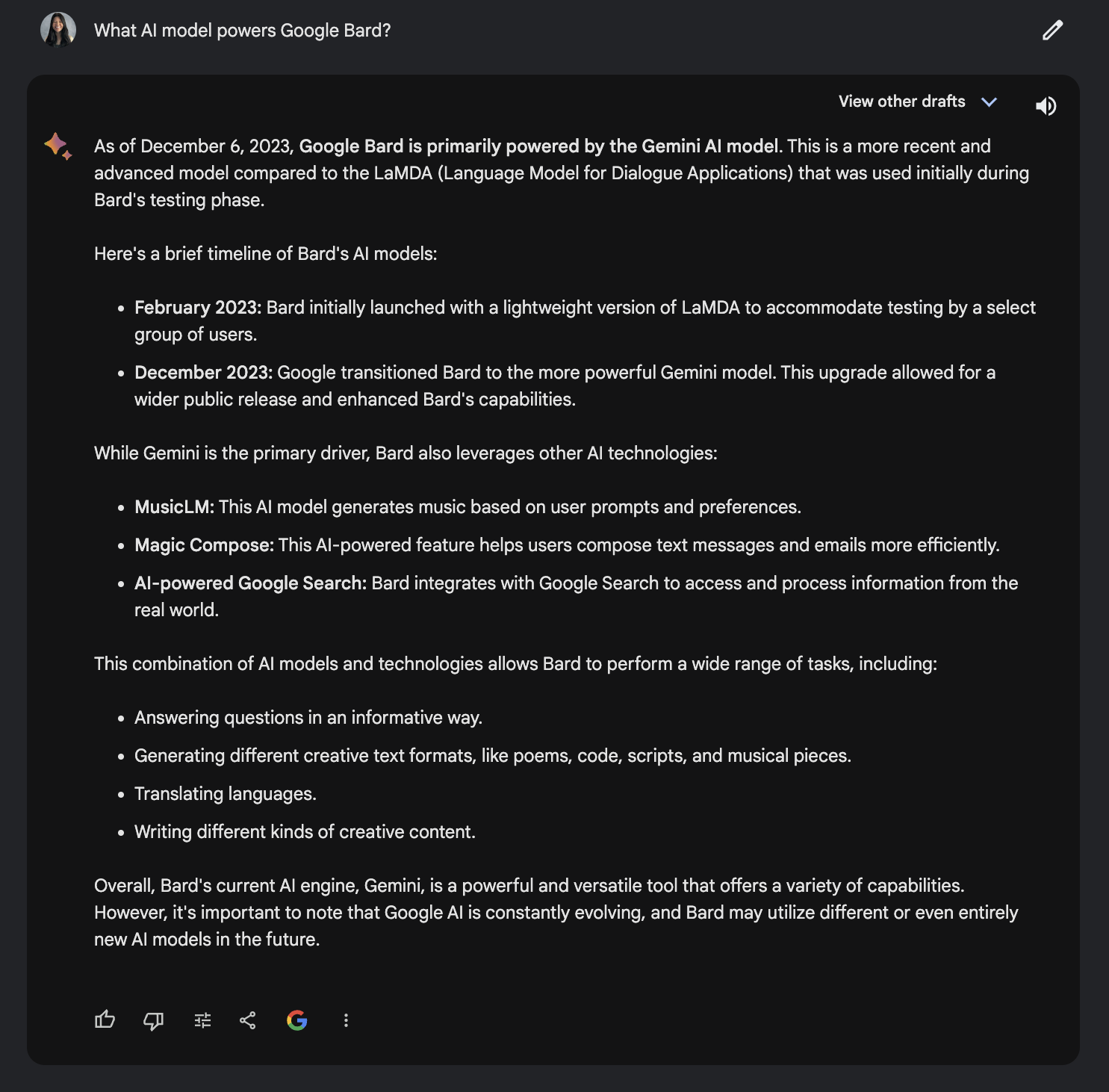

Google Bard Now Powered By Gemini Pro

Google also has announced a significant upgrade to Bard, integrating Gemini Pro to enhance the AI’s capabilities.

Screenshot from Google Bard, December 2023

Screenshot from Google Bard, December 2023This upgrade marks the biggest enhancement Bard has received to date.

Gemini Pro has been fine-tuned within Bard to significantly improve its performance in understanding and summarizing information, reasoning, coding, and planning.

Screenshot from Google Bard, December 2023

Screenshot from Google Bard, December 2023Users can now experience Bard powered by Gemini Pro for text-based interactions, with plans to extend support to other modalities shortly.

Powered by Gemini Pro, @Google Bard shares the best free resources for learning SEO. 📑 pic.twitter.com/HwKqN9m7A7

— Kristi Hines (@kristileilani) December 6, 2023

Initially available in English across more than 170 countries and territories, this upgrade will soon extend to additional languages and regions, including Europe.

Understanding Intent With Gemini For Personalized UX

This video demonstrates Gemini’s capability to understand user intent and create personalized user experiences.

It starts with understanding the user’s goal and gathering relevant information before reasoning and crafting a bespoke interface for exploration.

The user can interact with the interface and receive further information based on their needs, showcasing Gemini’s ability to adapt and deliver a personalized experience.

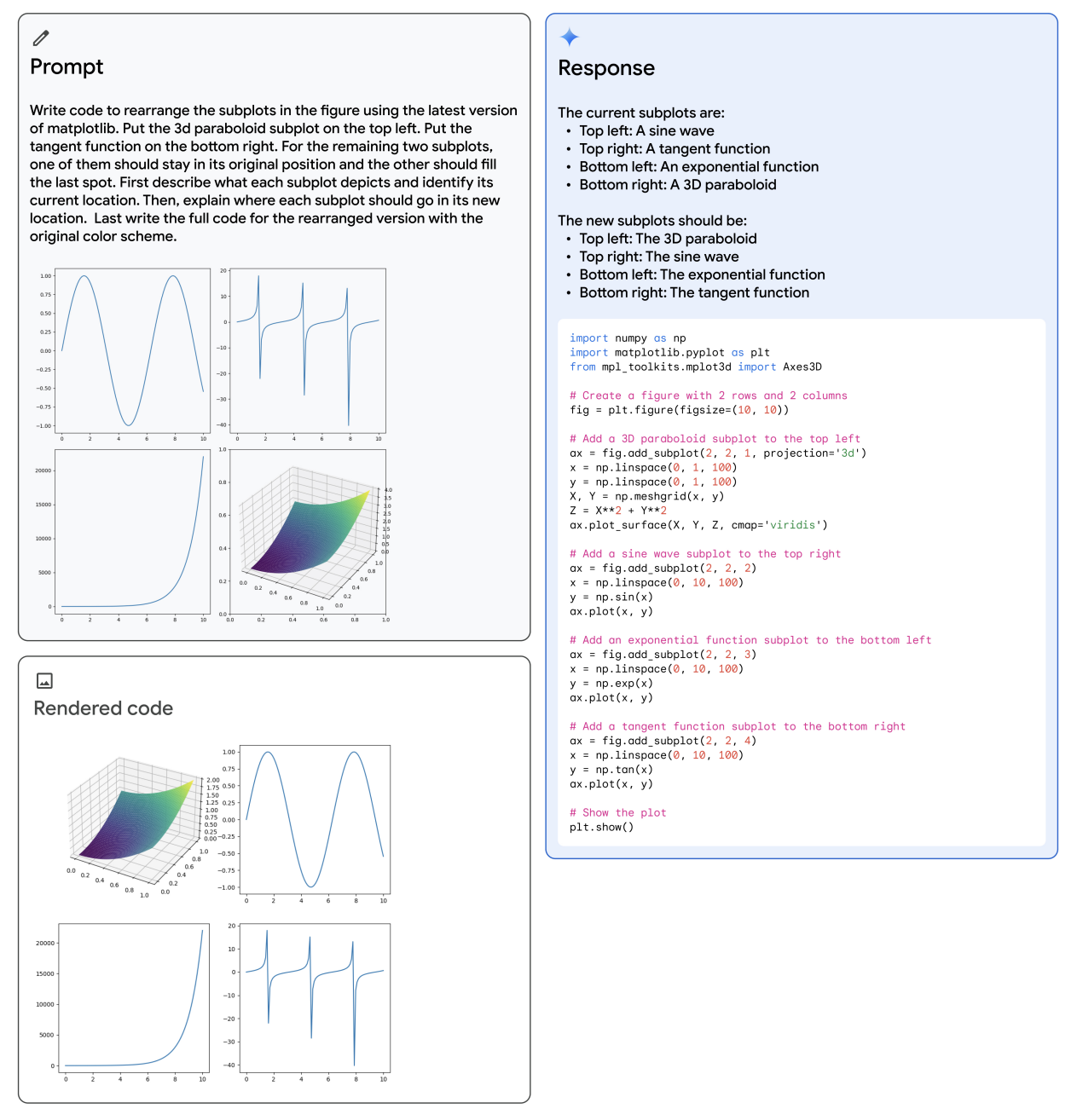

Multimodal Prompting With Gemini

On the Google for Developers blog, you will find examples of multimodal prompting with Gemini in action.

Multimodal promoting is a method of interacting with AI models that involves providing inputs in multiple forms, such as text and images, and receiving predictive responses from the AI.

This prompting method combines text and image prompts to tackle a variety of tasks, from solving logical puzzles to understanding image sequences.

It also helps Gemini become skilled in pattern recognition and improve its reasoning skills.

In areas such as designing games or generating music queries, multimodal promoting assists with writing code and producing both text and image responses.

The integration with other tools and applications shows potential for practical and professional applications, such as in design, coding, and content creation.

Google Pixel 8 Pro: The First Smartphone With Built-In AI Powered By Gemini Nano

Google’s latest update introduces Gemini Nano, an advanced AI model, now integrated into the Pixel 8 Pro smartphone.

This update marks the Pixel 8 Pro as the first phone engineered for AI with Gemini Nano, leveraging Google Tensor G3 technology.

Key features include ‘Summarize in Recorder’ for on-device summarization of audio recordings and ‘Smart Reply in Gboard’ for context-aware text responses. These features enhance user privacy and functionality without needing a network connection.

Additionally, Google announced upcoming enhancements for the Assistant with Bard experience in the Pixel lineup, further expanding AI capabilities.

The update also includes AI-driven improvements in photography and video, like enhanced video stabilization, Night Sight video, and Photo Unblur for clearer pet images.

For productivity, there are new tools like Dual Screen Preview on Pixel Fold, improved video calls using Pixel phones as webcams, and document scan cleaning.

Google Password Manager now supports passkeys, and Pixel devices gain new security features like Repair Mode. The Pixel Watch introduces convenient phone unlocking and call screening features, while the Pixel Tablet offers Clear Calling and spatial audio support.

Google also expands language support in its Recorder app and extends Direct My Call and Hold for Me features to more regions and devices.

Responsible AI Development

Google has prioritized responsible AI development, ensuring comprehensive safety evaluations of Gemini for bias and toxicity.

The company collaborates with diverse external experts and partners to rigorously test the model and address potential risks.

How To Get Gemini

Gemini 1.0 is gradually being integrated across various Google products and platforms and will soon be accessible to developers and enterprise customers via Google AI Studio and Google Cloud Vertex AI.

As part of Google’s commitment to advancing AI responsibly, Gemini Ultra will undergo extensive trust and safety checks before its broader release.

The introduction of Gemini by Google marks a significant milestone in AI development.

Its advanced capabilities, ranging from sophisticated multimodal reasoning to efficient coding, signal the beginning of a new era in AI, opening up remarkable possibilities for innovation across multiple domains.

Featured image: VDB Photos/Shutterstock