Bing announced an enhanced Robots.txt tester tool. The tool fills an important need because getting a robots.txt wrong can result in unexpected SEO outcomes. Producing a perfect robots.txt file is essential and a top priority for SEO.

A robots.txt file is a file that tells search engine crawlers what to do and not do on a website. A robots.txt file is one of the few ways a publisher can exercise control over search engines.

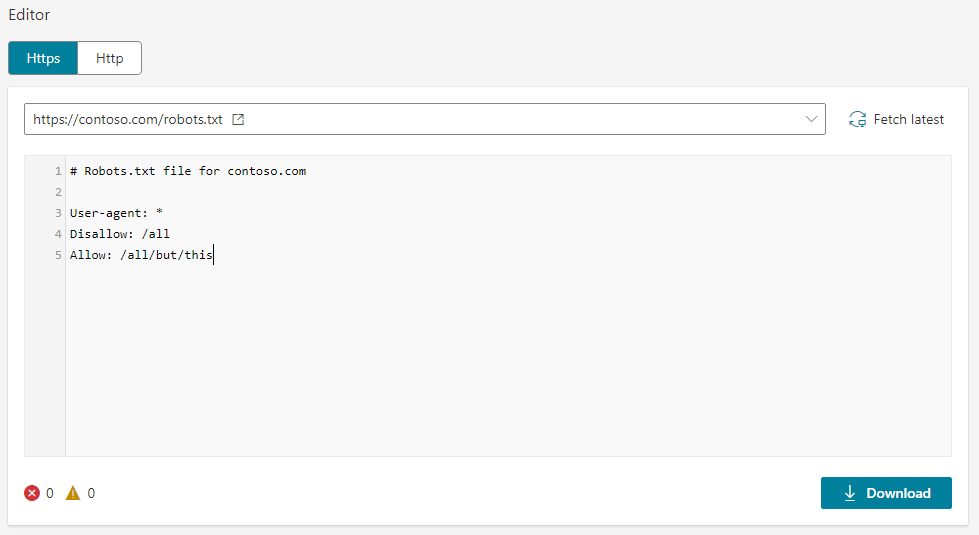

Screenshot of Bing Robots.txt Testing Tool

Even if you don’t need to block a search crawler, it’s still important to have one in order to not generate a needless 404 error log notation.

Mistakes on a Robots.txt file can result in search engines crawling pages they shouldn’t be indexing.

Unintended entries in a robots.txt file can also result in web pages not ranking because they are accidentally blocked.

Errors in a robots.txt file are a common source of search ranking problems. That’s why it is so important that Bing’s enhanced robots.txt tester tool

Be Proactive with Robots.txt Testing and Diagnostics

Publishers can now be proactive about reviewing and testing their Robots.txt files.

Testing to see how a search crawler responds to a robots.txt and diagnosing possible issues are important features that can help a publisher’s SEO.

This tool can also be helpful for search auditing consultants to help them identify possible issues that need correcting.

Bing’s new tool fills an important need, as described by Bing:

“While robots exclusion protocol gives the power to inform web robots and crawlers which sections of a website should not be processed or scanned, the growing number of search engines and parameters have forced webmasters to search for their robots.txt file amongst the millions of folders on their hosting servers, editing them without guidance and finally scratching heads as the issue of that unwanted crawler still persists.”

These are the actions that the Bing robots.txt tool takes to provide actionable information:

- Analyze robots.txt

- Identify problems

- Guides publishers through the fetch and uploading process.

- Checks allow/disallow statements

Related: Google SEO 101: Blocking Special Files in Robots.txt

According to Bing:

“The Robots.txt tester helps webmasters to not only analyse their robots.txt file and highlight the issues that would prevent them from getting optimally crawled by Bing and other robots; but, also guides them step-by-step from fetching the latest file to uploading the same file at the appropriate address.

Webmasters can submit a URL to the robots.txt Tester tool and it operates as Bingbot and BingAdsBot would, to check the robots.txt file and verifies if the URL has been allowed or blocked accordingly.”

A useful feature of the robots.txt tester tool is that it displays four versions of the robots.txt in the editor that correspond to the secure and insecure version of with and without the WWW prefix, i.e.

- http://

- https://

- http://www

- https://www.

Read the official announcement:

Bing Webmaster Tools Makes it Easy to Edit and Verify Your Robots.txt