Generative AI is becoming the foundation of more content, leaving many questioning the reliability of their AI detector.

In response, several studies have been conducted on the efficacy of AI detection tools to discern between human and AI-generated content.

We will break these studies down to help you learn more about how AI detectors work, show you an example of AI detectors in action, and help you decide if you can trust the tools – or the studies.

Are AI Detectors Biased?

Researchers uncovered that AI content detectors – those meant to detect content generated by GPT – might have a significant bias against non-native English writers.

The study found that these detectors, designed to differentiate between AI and human-generated content, consistently misclassify non-native English writing samples as AI-generated while accurately identifying native English writing samples.

Using writing samples from native and non-native English writers, researchers found that the detectors misclassified over half of the latter samples as AI-generated.

Interestingly, the study also revealed that simple prompting strategies, such as “Elevate the provided text by employing literary language,” could mitigate this bias and effectively bypass GPT detectors.

Screenshot from Arxiv.org, July 2023

Screenshot from Arxiv.org, July 2023The findings suggest that GPT detectors may unintentionally penalize writers with constrained linguistic expressions, underscoring the need for increased focus on the fairness and robustness within these tools.

That could have significant implications, particularly in evaluative or educational settings, where non-native English speakers may be inadvertently penalized or excluded from global discourse. It would otherwise lead to “unjust consequences and the risk of exacerbating existing biases.”

Researchers also highlight the need for further research into addressing these biases and refining the current detection methods to ensure a more equitable and secure digital landscape for all users.

Can You Beat An AI Detector?

In a separate study on AI-generated text, researchers document substitution-based in-context example optimization (SICO), allowing large language models (LLMs) like ChatGPT to evade detection by AI-generated text detectors.

The study used three tasks to simulate real-life usage scenarios of LLMs where detecting AI-generated text is crucial, including academic essays, open-ended questions and answers, and business reviews.

It also involved testing SICO against six representative detectors – including training-based models, statistical methods, and APIs – which consistently outperformed other methods across all detectors and datasets.

Researchers found that SICO was effective in all of the usage scenarios tested. In many cases, the text generated by SICO was often indistinguishable from the human-written text.

However, they also highlighted the potential misuse of this technology. Because SICO can help AI-generated text evade detection, maligned actors could also use it to create misleading or false information that appears human-written.

Both studies point to the rate at which generative AI development outpaces that of AI text detectors, with the second emphasizing a need for more sophisticated detection technology.

Those researchers suggest that integrating SICO during the training phase of AI detectors could enhance their robustness and that the core concept of SICO could be applied to various text generation tasks, opening up new avenues for future research in text generation and in-context learning.

Do AI Detectors Lean Towards Human Classification?

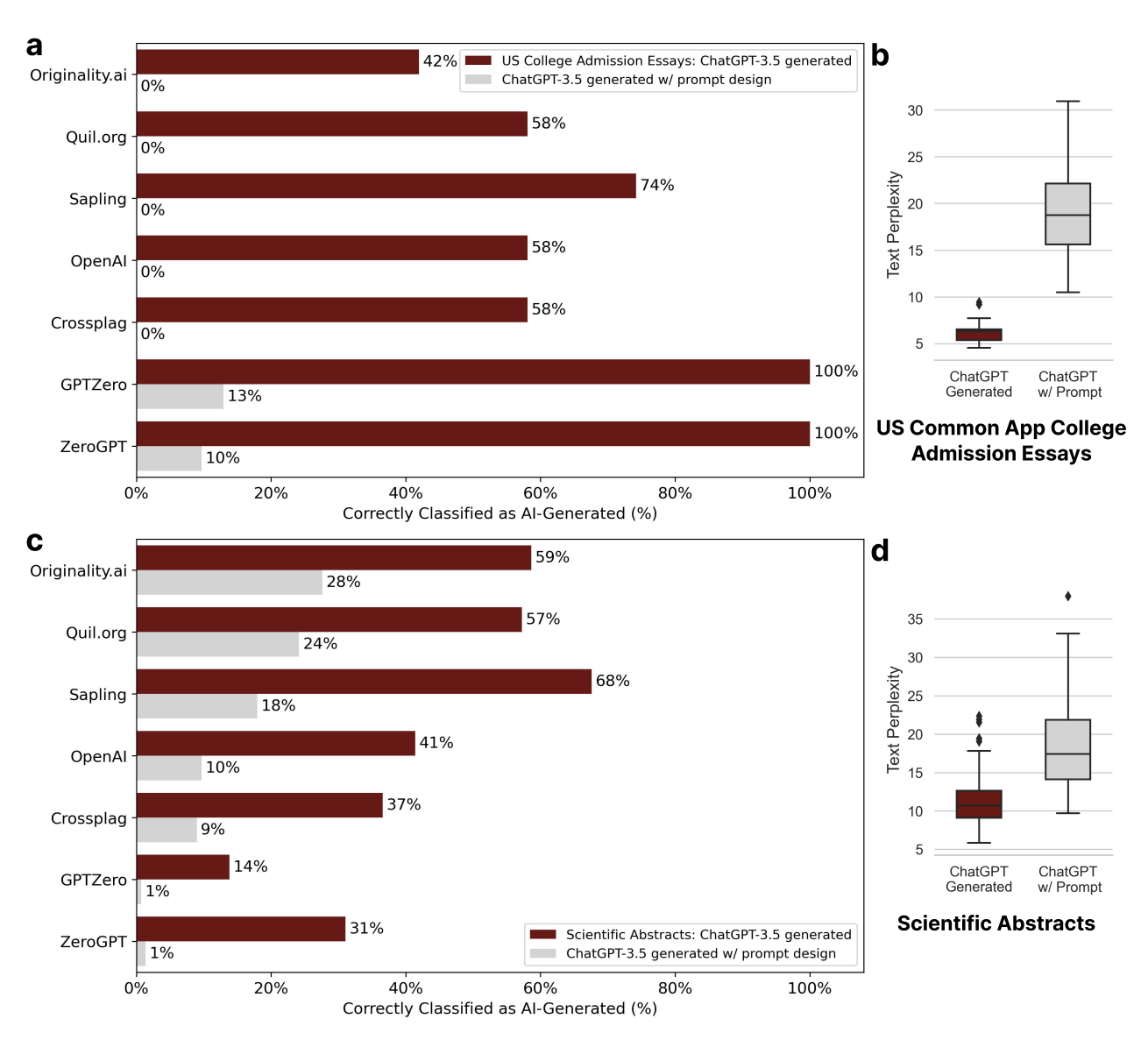

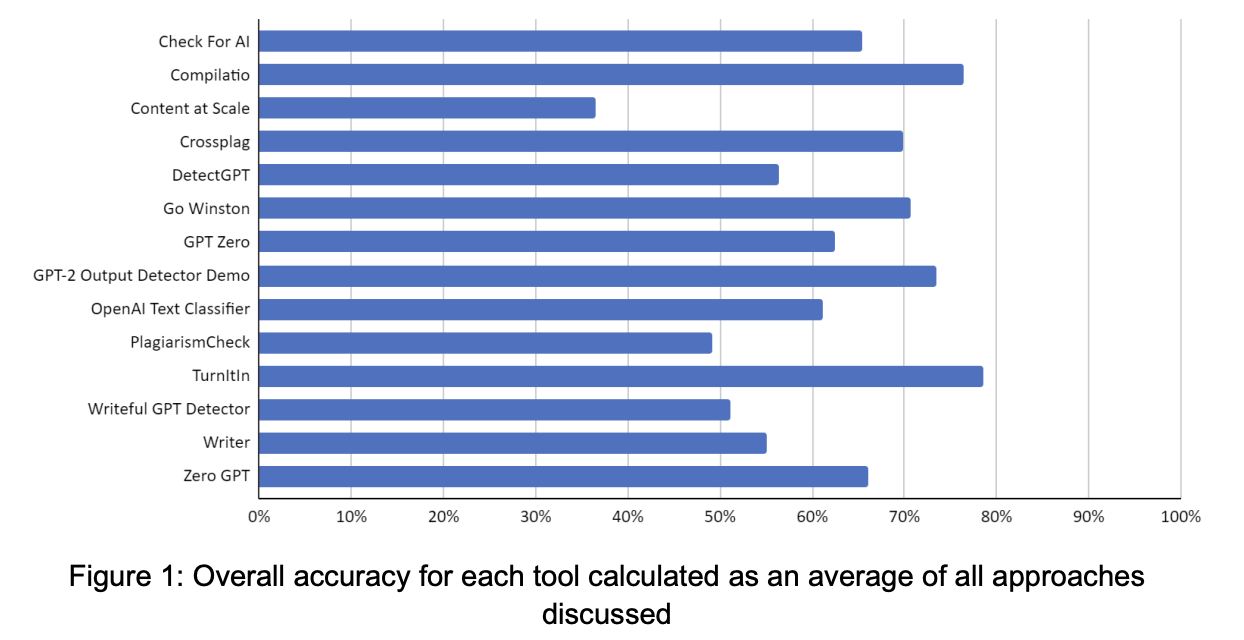

Researchers of a third study compiled previous studies on the reliability of AI detectors, followed by their data, publishing several findings about these tools.

- Aydin & Karaarslan (2022) revealed that iThenticate, a popular plagiarism detection tool, found high match rates with ChatGPT-paraphrased text.

- Wang et al. (2023) found that it is harder to detect AI-generated code than natural language content. Moreover, some tools exhibited bias, leaning towards identifying text as AI-generated or human-written.

- Pegoraro et al. (2023) found that detecting ChatGPT-generated text is highly challenging, with the most efficient tool achieving a success rate of less than 50%.

- Van Oijen (2023) revealed that the overall accuracy of tools in detecting AI-generated text was only around 28%, with the best tool achieving just 50% accuracy. Conversely, these tools were more effective (about 83% accuracy) in detecting human-written content.

- Anderson et al. (2023) observed that paraphrasing notably reduced the efficacy of the GPT-2 Output Detector.

Using 14 AI-generated text detection tools, researchers created several dozen test cases in different categories, including:

- Human-written text.

- Translated text.

- AI-generated text.

- AI-generated text with human edits.

- AI-generated text with AI paraphrasing.

These tests were evaluated using the following:

Screenshot from Arxiv.org, July 2023

Screenshot from Arxiv.org, July 2023Turnitin emerged as the most accurate tool across all approaches, followed by Compilatio and GPT-2 Output Detector.

However, most of the tools tested showed bias toward classifying human-written text accurately, compared to AI-generated or modified text.

While that outcome is desirable in academic contexts, the study and others highlighted the risk of false accusations and undetected cases. False positives were minimal in most tools, except for GPT Zero, which exhibited a high rate.

Undetected cases were a concern, particularly for AI-generated texts that underwent human editing or machine paraphrasing. Most tools struggled to detect such content, posing a potential threat to academic integrity and fairness among students.

The evaluation also revealed technical difficulties with tools.

Some experienced server errors or had limitations in accepting certain input types, such as computer code. Others encountered calculation issues, and handling results in some tools proved challenging.

Researchers suggested that addressing these limitations will be crucial for effectively implementing AI-generated text detection tools in educational settings, ensuring accurate detection of misconduct while minimizing false accusations and undetected cases.

How Accurate Are These Studies?

Should you trust AI detection tools based on the results of these studies?

The more important question might be whether you should trust these studies about AI detection tools.

I sent the third study mentioned above to Jonathan Gillham, founder of Originality.ai. He had a few very detailed and insightful comments.

To begin with, Originality.ai was not meant for the education sector. Other AI detectors tested may not have been created for that environment either.

The requirement for the use within academia is that it produces an enforceable response. This is part of why we explicitly communicate (at the top of our homepage) that our tool is for Digital Marketing and NOT Academia.

The ability to evaluate multiple articles submitted by the same writer (not a student) and make an informed judgment call is a far better use case than making consequential decisions on a single paper submitted by a student.

The definition of AI-generated content may vary between what the study indicates versus what each AI-detection tool identifies. Gillham included the following as reference to various meanings of AI and human-generatedcontent.

- AI-Generated and Not Edited = AI-Generated text.

- AI-Generated and Human Edited = AI-Generated text.

- AI Outline, Human Written, and heavily AI Edited = AI-Generated text.

- AI Research and Human Written = Original Human-Generated.

- Human Written and Edited with Grammarly = Original Human-Generated.

- Human Written and Human Edited = Original Human-Generated.

Some categories in the study tested AI-translated text, expecting it to be classified as human. For example, on page 10 of the study, it states:

For the second category (called 02-MT), around 10.000 characters (including spaces) were written in Bosnian, Czech, German, Latvian, Slovak, Spanish, and Swedish. None of this texts may have been exposed to the Internet before, as for 01-Hum. Depending on the language, either the AI translation tool DeepL (3 cases) or Google Translate (6 cases) was used to produce the test documents in English.

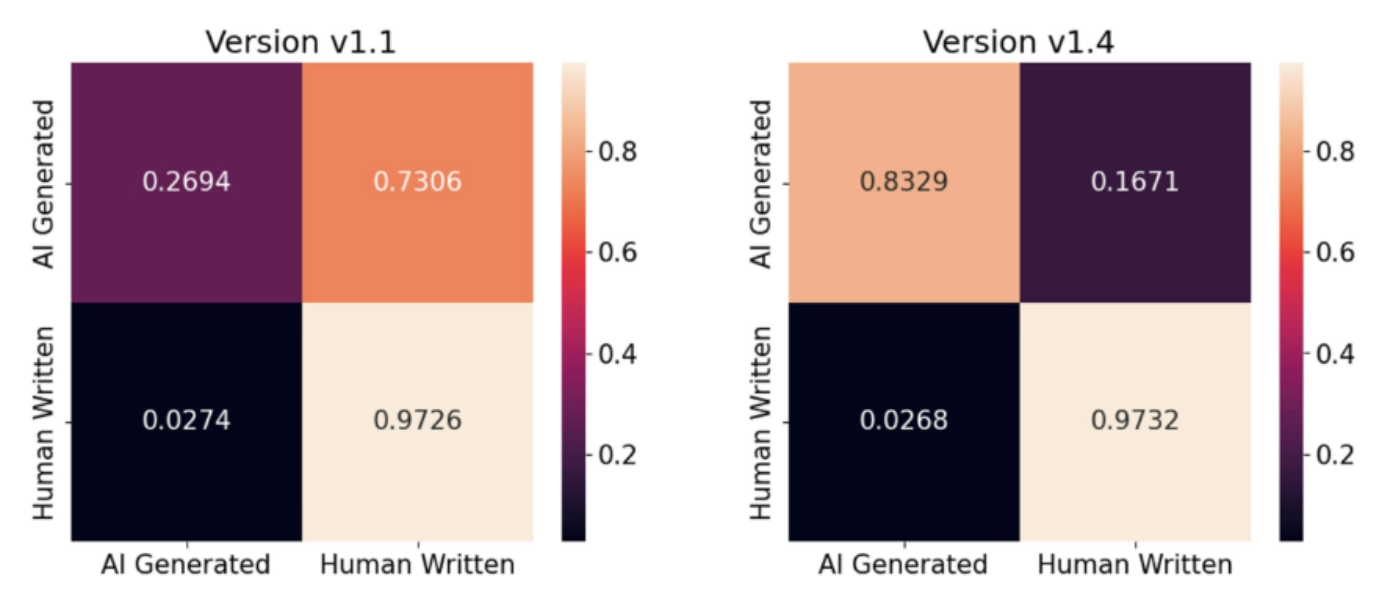

During the two-month experimentation period, some tools would have made tremendous advancements. Gillham included a graphic representation of the improvements within two months of version updates.

Screenshot from Originality.ai, July 2023

Screenshot from Originality.ai, July 2023Additional issues with the study’s analysis that Gillham identified included a small sample size (54), incorrectly classified answers, and the inclusion of only two paid tools.

The data and testing materials should have been available on the URL included at the end of the study. A request for the data made over two weeks remains unanswered.

What AI Experts Had To Say About AI-Detection Tools

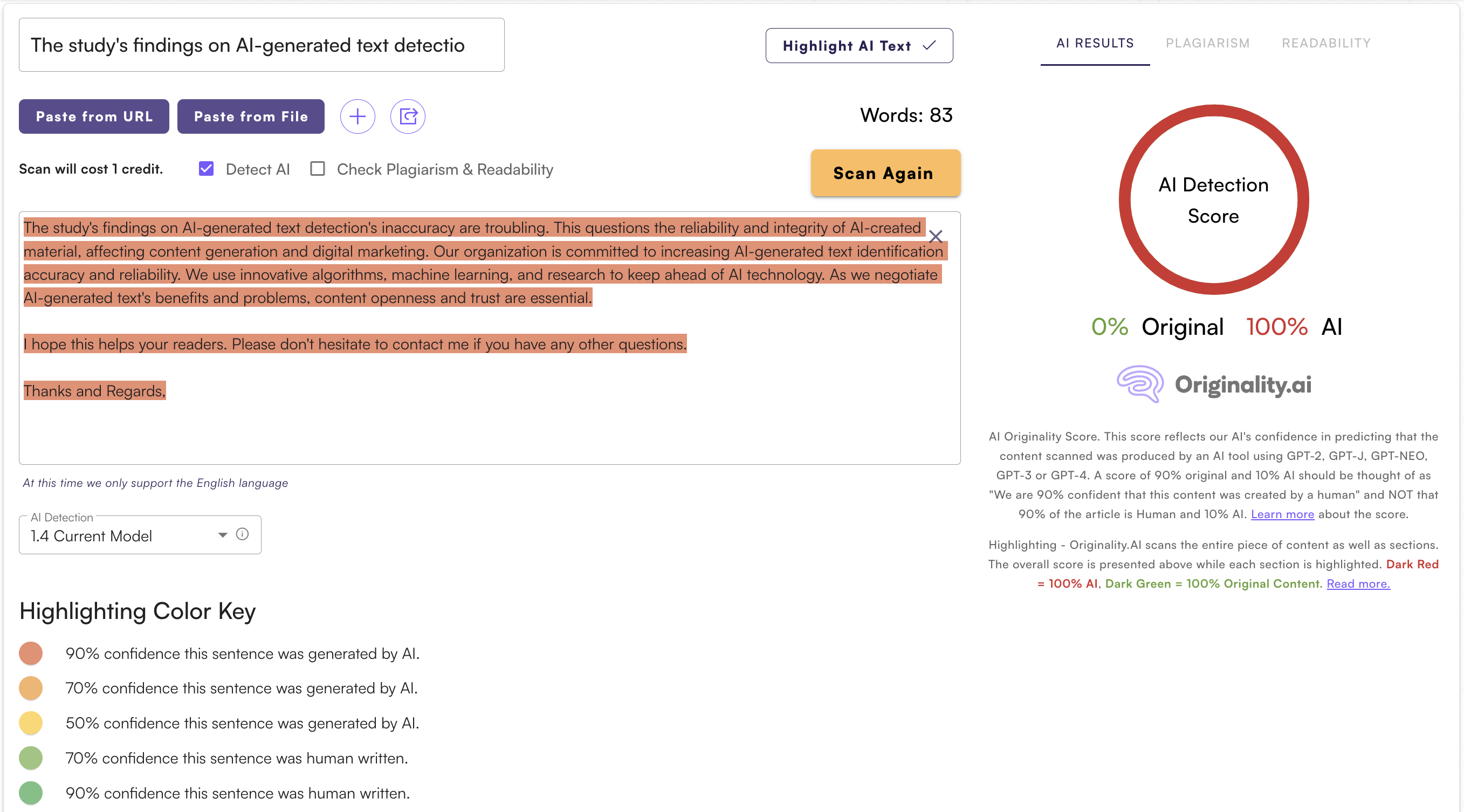

I queried the HARO community to find out what others had to say about their experience with AI detectors, leading to an unintentional study of my own.

At one point, I received five responses in two minutes that were duplicate answers from different sources, which seemed suspicious.

I decided to use Originality.ai on all of the HARO responses I received for this query. Based on my personal experience and non-scientific testing, this particular tool appeared tough to beat.

Screenshot from Originality.ai, July 2023

Screenshot from Originality.ai, July 2023Originality.ai detected, with 100% confidence, that most of these responses were AI-generated.

The only HARO responses that came back as primarily human-generated were one-to-two-sentence introductions to potential sources I might be interested in interviewing.

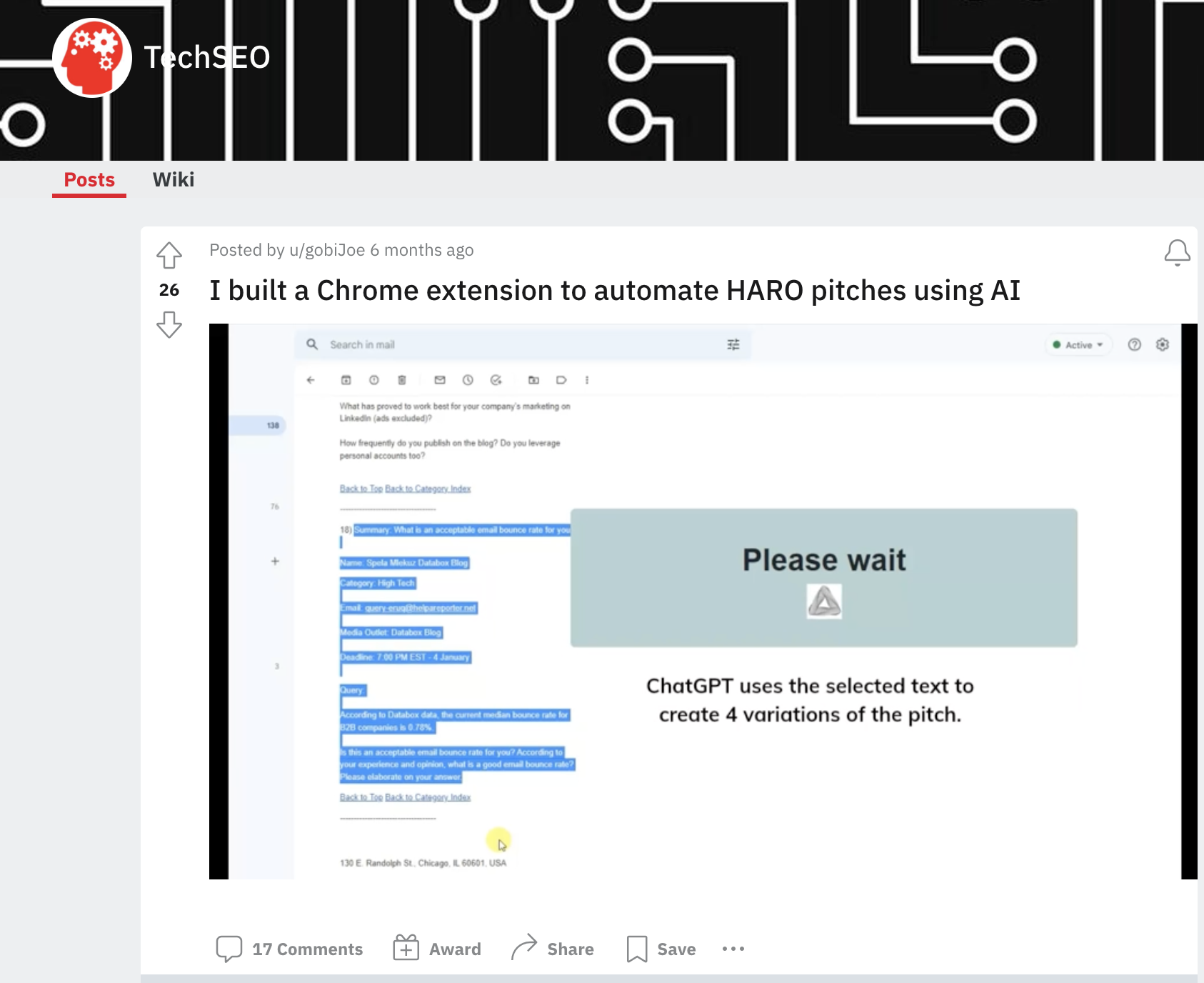

Those results weren’t a surprise because there are Chrome extensions for ChatGPT to write HARO responses.

Screenshot from Reddit, July 2023

Screenshot from Reddit, July 2023What The FTC Had To Say About AI-Detection Tools

The Federal Trade Commission cautioned companies against overstating the capabilities of AI tools for detecting generated content, warning that inaccurate marketing claims could violate consumer protection laws.

Consumers were also advised to be skeptical of claims that AI detection tools can reliably identify all artificial content, as the technology has limitations.

The FTC said robust evaluation is needed to substantiate marketing claims about AI detection tools.

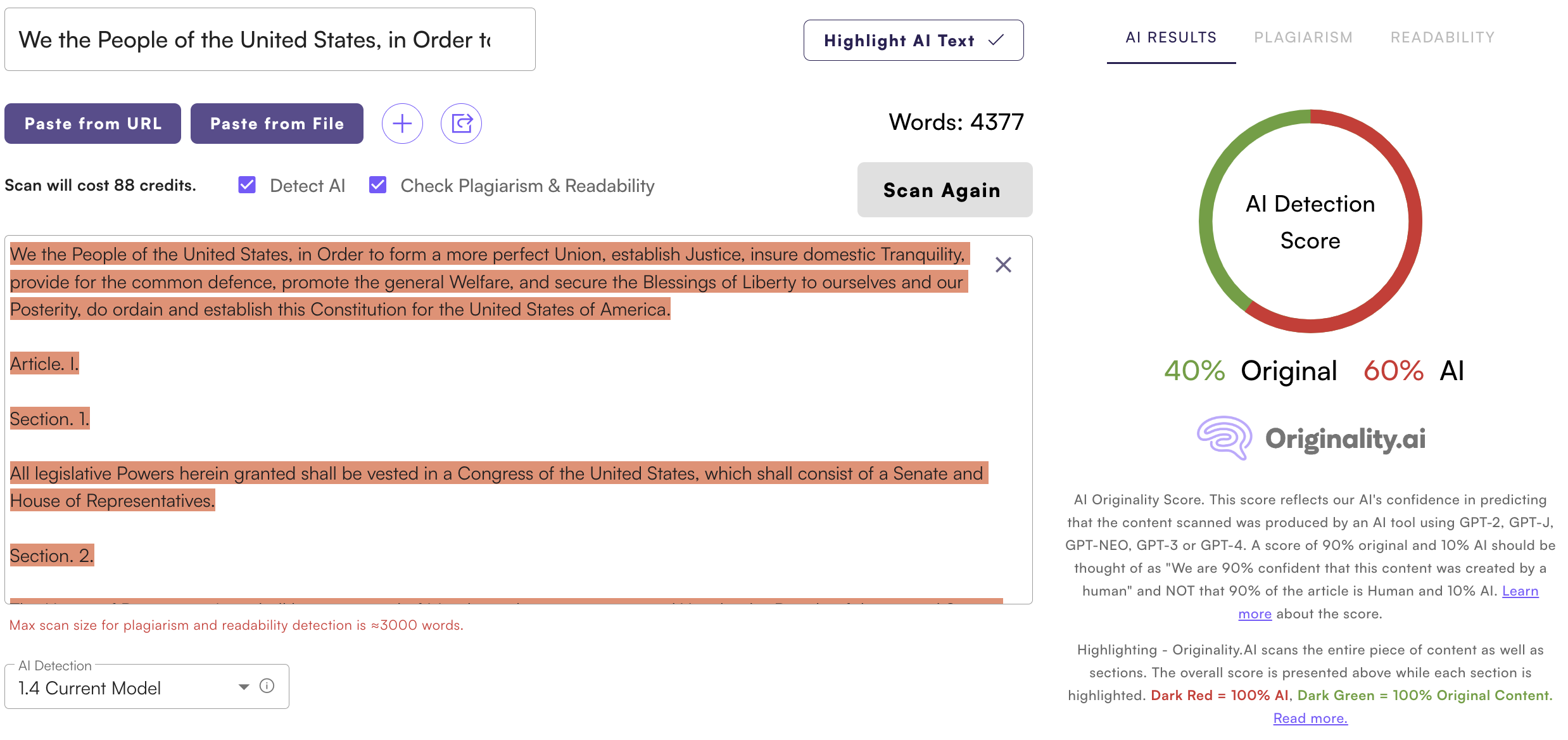

Was AI Used To Write The Constitution?

AI-detection tools made headlines when users discovered there was a possibility that AI wrote the United States Constitution.

Screenshot from Originality.ai, July 2023

Screenshot from Originality.ai, July 2023A post on Ars Technica explained why AI writing detection tools often falsely identify texts like the US Constitution as AI-generated.

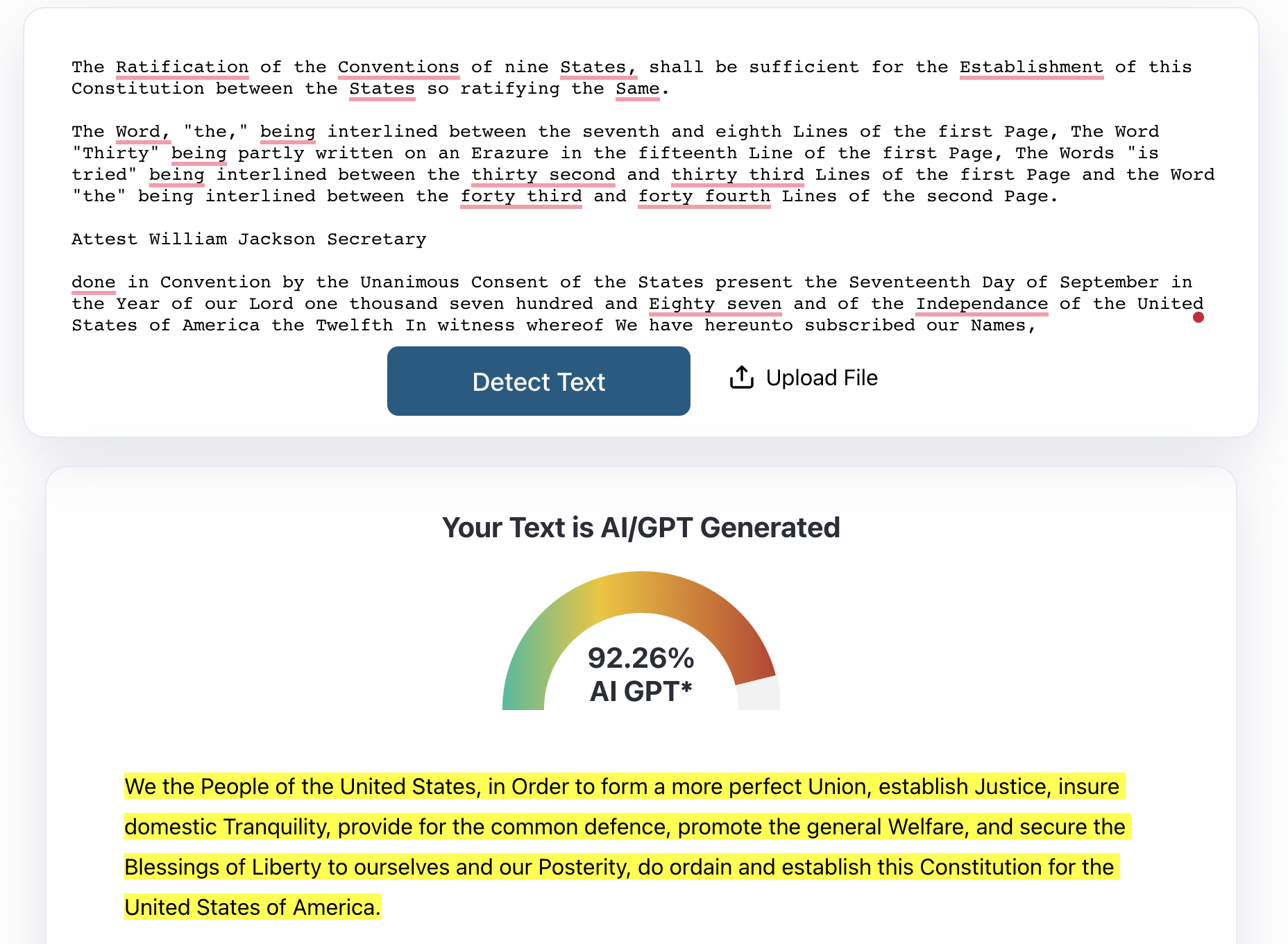

Screenshot from ZeroGPT, July 2023

Screenshot from ZeroGPT, July 2023Historical and formal language often gives low “perplexity” and “burstiness” scores, which they interpret as indicators of AI writing.

Screenshot from GPTZero, July 2023

Screenshot from GPTZero, July 2023Human writers can use common phrases and formal styles, resulting in similar scores.

This exercise further proved the FTC’s point that consumers should be skeptical of AI detector scores.

Strengths And Limitations

The findings from various studies highlight the strengths and limitations of AI detection tools.

While AI detectors have shown some accuracy in detecting AI-generated text, they have also exhibited biases, usability issues, and vulnerabilities to evasion techniques.

But the studies themselves could be flawed, leaving everything up for speculation.

Improvements are needed to address biases, enhance robustness, and ensure accurate detection in different contexts.

Continued research and development are crucial to fostering trust in AI detectors and creating a more equitable and secure digital landscape.

Featured image: Ascannio/Shutterstock