With the resurgence of the Black Lives Matter movement on social media, many marketers are taking new notice of issues that BIPOC (an acronym that stands for Black, Indigenous, and People of Color) and allies have been talking about for years.

The most notable of these is how data – which we traditionally deem as unbiased and “just the numbers” – is in fact very influenced by the biases of the engineers, marketers, developers, and data scientists who are programming, inputting, and manipulating that data.

While some may just now notice this phenomenon, it’s actually nothing new.

In this piece, we’ll talk about:

- What implicit bias is.

- How it affects marketing data and technology.

- Why it’s important to recognize it in our SEO and marketing.

- What marketers can do.

What Is Implicit Bias?

To truly understand how these models encode bias it’s important to understand how implicit bias works.

According to research from The Ohio State University:

“[I]mplicit bias refers to the attitudes or stereotypes that affect our understanding, actions, and decisions in an unconscious manner. These biases, which encompass both favorable and unfavorable assessments, are activated involuntarily and without an individual’s awareness or intentional control.”

To paraphrase, implicit bias is essentially the thoughts about people you didn’t know you had.

These biases can come from how we were raised, values that our families hold, individual experiences, and more.

And they’re not always bad stereotypes, but still, we know that individuals are individuals and stereotypes are broad and sweeping generalizations – which don’t belong in machine learning and supposedly unbiased statistics.

Think you’re immune to implicit bias?

Check out one of Harvard’s Implicit Association Tests (IATs) to see where your own implicit biases lie.

Remember that implicit bias doesn’t make you a bad person. It’s something the majority of people have.

It’s how we respond to it that matters!

How Does Implicit Bias Affect Algorithms & Marketing Data?

Algorithms and machine learning are only as good as the information we feed these models.

As engineers often say: “garbage in, garbage out.”

A few years ago at MozCon, Britney Muller demonstrated how she created an easy machine-learning algorithm to distinguish pictures of her snake, Pumpkin, from pictures of Moz Founder Rand Fishkin.

The key to developing her ML program was submitting pictures of her snake and manually marking them as “Pumpkin” and doing the same for Fishkin.

Eventually, the program was able to distinguish the reptile from the CEO.

From this, you can see where the manual aspect of machine learning comes into play – and where human biases (implicit or explicit) can affect the data we’re receiving.

Essentially, the human providing the inputs chooses how the algorithm learns.

If she switched the snake photos with Fishkin photos, the “unbiased” algorithm would call Fishkin the snake and the snake a CEO.

Stock Photo Sites

A study called Being Black in Corporate America found that “only 8 percent of people employed in white-collar professions are Black, and the proportion falls sharply at higher rungs of the corporate ladder, especially when jumping from middle management to the executive level.”

This is despite the fact that “Black professionals are more likely than their white counterparts to be ambitious in their careers and to aspire to a top job.”

As marketers, it’s important to ask ourselves, how does the data we tag and put out on our websites affect what’s happening in real life?

It might be a case of the chicken and the egg – should representation follow actual workplace statistics or can workplace statistics be affected by representation even in “innocuous” places like stock photo websites?

Even anecdotally, the latter proves true.

When talking to friends in HR, they’ve confirmed time and again that job interview candidates are more likely to take a position when they see people who look like them at the company, in positions of leadership, and on the interview team.

Representation has always been an issue on stock photography websites, so much so that specialty photo sites have recently cropped up in response featuring exclusively BIPOC, people with disabilities, and people in larger bodies.

Even now (late-June 2020), a search for “woman working” on a popular stock photo site results in over 100 images on their first-page search. Of those 100+ photos…

- 10 feature BIPOC-appearing individuals in the background or in a group.

- 11 focus solely on BIPOC as the main person.

- 4 images show older adults in a professional workplace.

- 0 images show people in bigger bodies.

- 0 images show individuals with disabilities.

This data is just based on a quick search and a rough count, but it shows that the representation is lacking.

As marketers, it’s key that we use and demand imagery that is diverse and inclusive.

If your website photography is all white people high-fiving, it’s time for a change.

Google Data

In her book, “Algorithms of Oppression,” Safiya Noble, Ph.D. talks about how the people we trust to make the unbiased algorithm and machine learning models for Google and other search engines are not unbiased themselves.

In fact, it made news a few years ago that a Google employee went on an anti-diversity rant (which received hundreds of upvotes from other employees) and was subsequently fired only after media attention.

We have to ask ourselves, “How many others working on algorithms share similar biased and anti-diversity feelings?”

But how does this lack of awareness around these known and unknown biases actually play out in search?

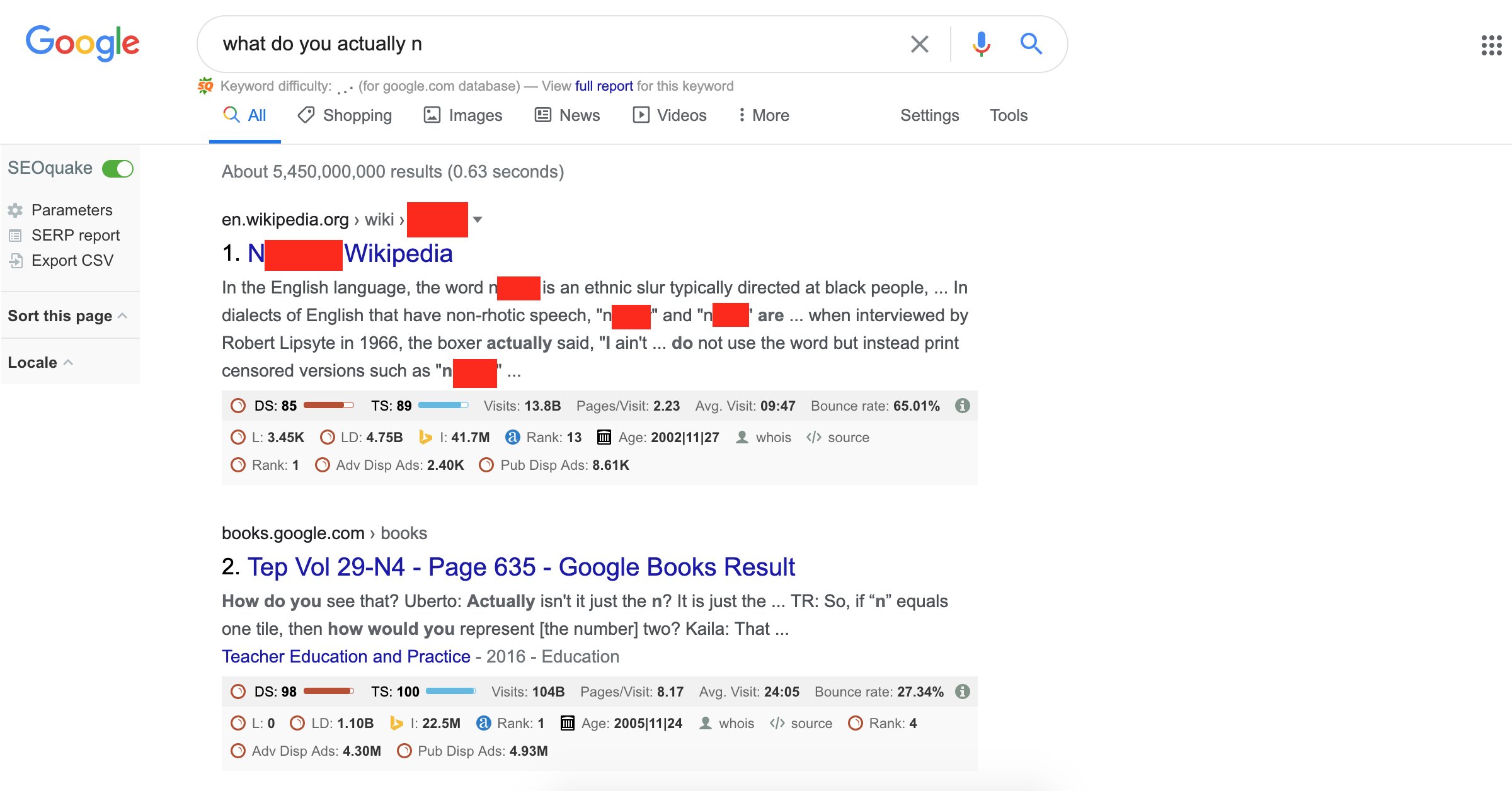

My spouse loves to bake and recently we were looking for more details about a recipe we didn’t have all the ingredients for.

Incredulous that we needed to make another trip to the grocery store, I went to Google to ask, “What do you actually need for lemon bars?”

In my haste to prove myself right, I hit the enter button too early and only got through “What do you actually n.”

To my horror and absolute dismay, this is what my top result was:

As a marketer, my immediate thought was, “What in my search history would indicate to Google that this was the correct result for me?”

I felt shame and guilt and honestly like I was a terrible ally.

And then I thought about a Black kid searching for a recipe or a school project and coming upon this – and how absolutely heart wrenching that could be.

When I called it out on Twitter, I was surprised at the number of people defending the result.

Again, the same cry, “It’s just the data.”

And, “it makes sense to get this result given the query.”

As marketers, we don’t spend enough time analyzing the input data and the people who trained the machines.

We assume data is impartial and equitable – that Google is a meritocracy of results.

When it is actually our jobs to ensure that it isn’t.

We work to sway search results in our companies’ and clients’ directions – for their own profit and economic gain.

Why does it make sense for Google to return that result – that having the letter “n” by itself warrants that search result in any context?

(Why doesn’t Google show information about nitrogen – the gas that is represented by N on the periodic table?)

The reason is the data, all the data they have about that letter told them this is most relevant to searchers.

As Jonathan Wilson puts it:

“It also makes sense due to semantic search. Over time, Google has seen that (white) people say, like in this article, “the n-word” [instead of the full expletive]. It’s the same as using ‘pop’ interchangeably with ‘soda.’ It’s more a focus of the vast racism in America than an algorithm in this case, although someone [at Google] could manually override the result.”

Facebook Ad Algorithms

In the spring of 2019, the U.S. Department of Housing and Urban Development sued Facebook over the way it allowed advertisers to target ads based on race, gender, and religion (these are all protected classes under U.S. law).

Facebook claimed to have gotten rid of this manual option for advertisers to discriminate, but data proved that their algorithms took up where manual discrimination had left off.

The study found that job ads for janitors and taxi drivers are shown to a higher fraction of minorities and job ads for nurses and secretaries are shown to a higher fraction of women.

According to Karen Hao of the MIT Technology Review:

“Bias occurs during data collection when the training data reflects existing prejudices. Facebook’s advertising tool bases its optimization decisions on the historical preferences that people have demonstrated. If more minorities engaged with ads for rentals in the past, the machine-learning model will identify that pattern and reapply it in perpetuity. Once again, it will … plod down the road of employment and housing discrimination—without being explicitly told to do so.”

Facial Recognition Models

A recent NYTimes article demonstrates how facial recognition also falls short based on the data that is fed into the model.

Robert Julian-Borchak Williams was wrongfully arrested due to this lack of diversity in the way these models are trained (think back to Pumpkin the snake vs. Fishkin):

“In 2019, algorithms from both companies were included in a federal study of over 100 facial recognition systems that found they were biased, falsely identifying African-American and Asian faces 10 times to 100 times more than Caucasian faces.”

Studies show that “while the technology works relatively well on white men, the results are less accurate for other demographics, in part because of a lack of diversity in the images used to develop the underlying databases.”

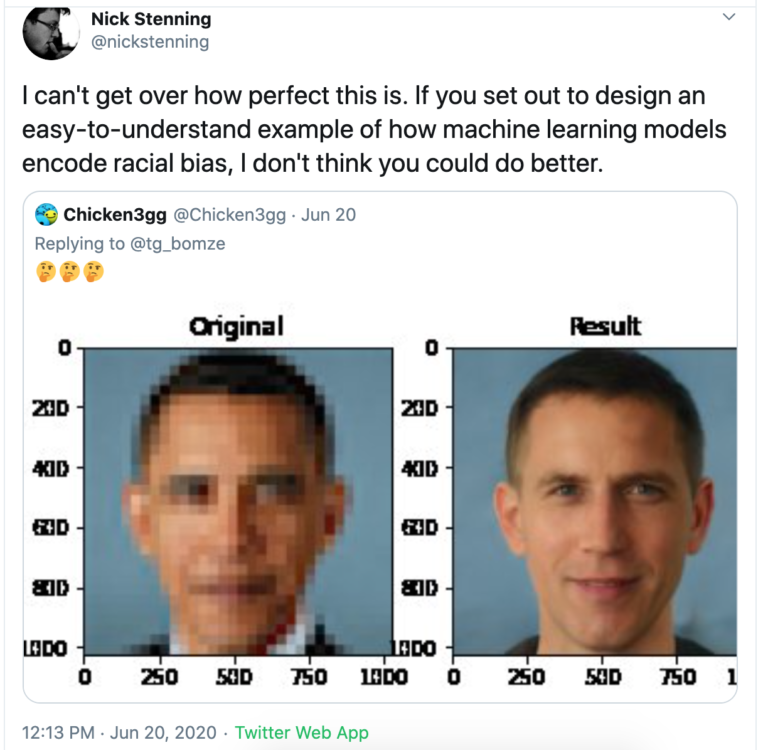

This was proven even further on a recent “for-fun” Twitter post about submitting a low-resolution image into a program and having a model create a high-res, realistic picture of a person from that initial pixelated picture.

When multiple people submitted a very well-known picture of President Barack Obama, the results were consistently white men (even though most everyone could recognize who the pixelated image was actually of).

Again, this is another failure of input data in machine learning models and a lack of examination of our implicit biases when engineering, programming, coding, executive goal setting (if no there’s no goal to improve Black recognition, it’s never built), prioritization, quality assurance, and marketing.

Uber & Lyft Algorithms

Yet another study shows that the algorithms we program – that even we think could not be affected by bias – definitely are.

The data shows that ride-hailing apps like Uber and Lyft disproportionately charge more per mile for rides to and from destination with “a higher percentage of non-white residents, low-income residents or high education residents.”

The study authors explained that:

“Unlike traditional taxi services, fare prices for ride-hailing services are dynamic, calculated using both the length of the requested trip as well as the demand for ride-hailing services in the area. Uber determines demand for rides using machine learning models, using forecasting based on prior demand to determine which areas drivers will be needed most at a given time. While the use of machine learning to forecast demand may improve ride-hailing applications’ ability to provide services to their riders, machine learning methods have been known to adopt policies that display demographic disparity in online recruitment, online advertisements, and recidivism prediction.”

This isn’t the first time, though, either.

In 2016, data showed that people with Black-sounding names were more likely to have their riders cancel, Black passengers waited longer than white riders, and women were driven unnecessarily further than men.

Not only does this affect people’s everyday lives, but the compiling effect of data means that these biases continually compound on each other – proving and reproving themselves – to solidify into our subconsciousness as humans and keep affirming (or even creating more) implicit bias.

An additional outcome is showing up as a new response where humans now ‘pass the blame’ since the perception is that we are not responsible, it’s the data.

This affects how we run our businesses, live our lives, market our products and services, and more. It’s in the fabric of our society – and algorithms are only intensifying that effect.

Marketing Conferences & Panels

There’s no easier way to see the ramifications of implicit bias in our everyday lives as marketers than to look at industry leaders, go-to conference speakers, lists of experts, and more.

While we’re all now very hyper-aware of how white (and often how male) most panels or lists of experts are, there still seems to be little pressure applied to organizations to diversify their lineups.

And, if there is pressure, some events seem to always believe there are no easy-to-find BIPOC speakers, experts, or panelists.

This is obviously not true and another example of our own confirmation and implicit biases.

A CNN article from 2015 talks about quick ways to determine if you are displaying hidden bias in everyday life.

The first way to introspect on this is to look at your inner circle – does everyone look the same?

The same can be said for conferences and event organizers.

If your pool of speaker and expert candidates always looks the same, it’s a you problem – not someone else’s.

If your conference has the same, single Black speaker every year, it’s critical that we do the work to find the non-white experts in each area.

How do we get the younger BIPOC opportunities to build their craft? They exist.

They just might not exist in your circle.

Why Is This Important for SEO & Marketing?

Knowing about the implicit bias of data and algorithms is critical – now more than ever – because more people are using technology like Google search and social media as their main information-gathering tools.

We no longer go to libraries and other free sources of information to be guided by a professional with advanced degrees in information science.

(Though even those professionals had their own biases which served as gatekeeping mechanisms for information–which is why so many Americans are just now learning about Juneteenth in 2020.)

Instead, most people rely on the internet to provide them the same type of research processes.

Not only is the internet a vast and unregulated source, but the basis of search engine optimization and paid advertising means that many searchers and web users don’t know how to tell factual truth from the opinions of amateurs.

And with the advent of news on social media, anyone can be a “news source” and users aren’t often taught to examine these in the context of social.

In “Algorithms of Oppression”, Safiya Noble, Ph.D., also points out that:

“Google biases search to its own economic interests for its profitability and to bolster its market dominance at any expense.”

This means that these tech giants are not functioning in the best interests of the people who use these platforms but in their own business interests.

If Google and Facebook (and other information tech giants) are going to make money off of continually corroborating our own confirmation biases (a.k.a., telling us what we want to hear and keeping us in our own echo chambers of information), then we’re stuck in a cycle of being at the whim of both the algorithmic and our own and others’ personal biases.

What Can Marketers Do?

Search engines are not just programmed by engineers back at Google or Bing – they rely on the pages and data that website owners, SEOs, and marketers are putting into websites.

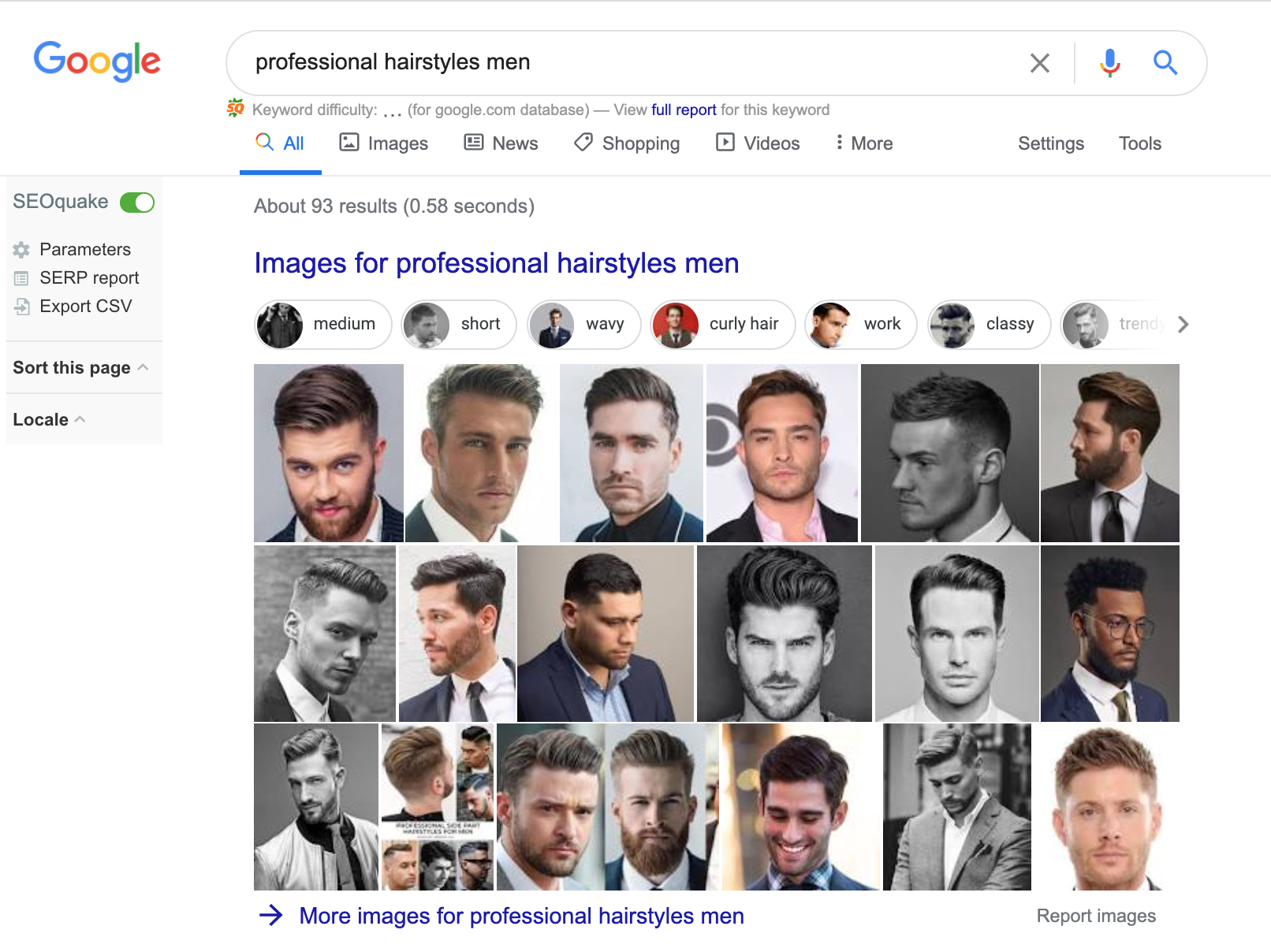

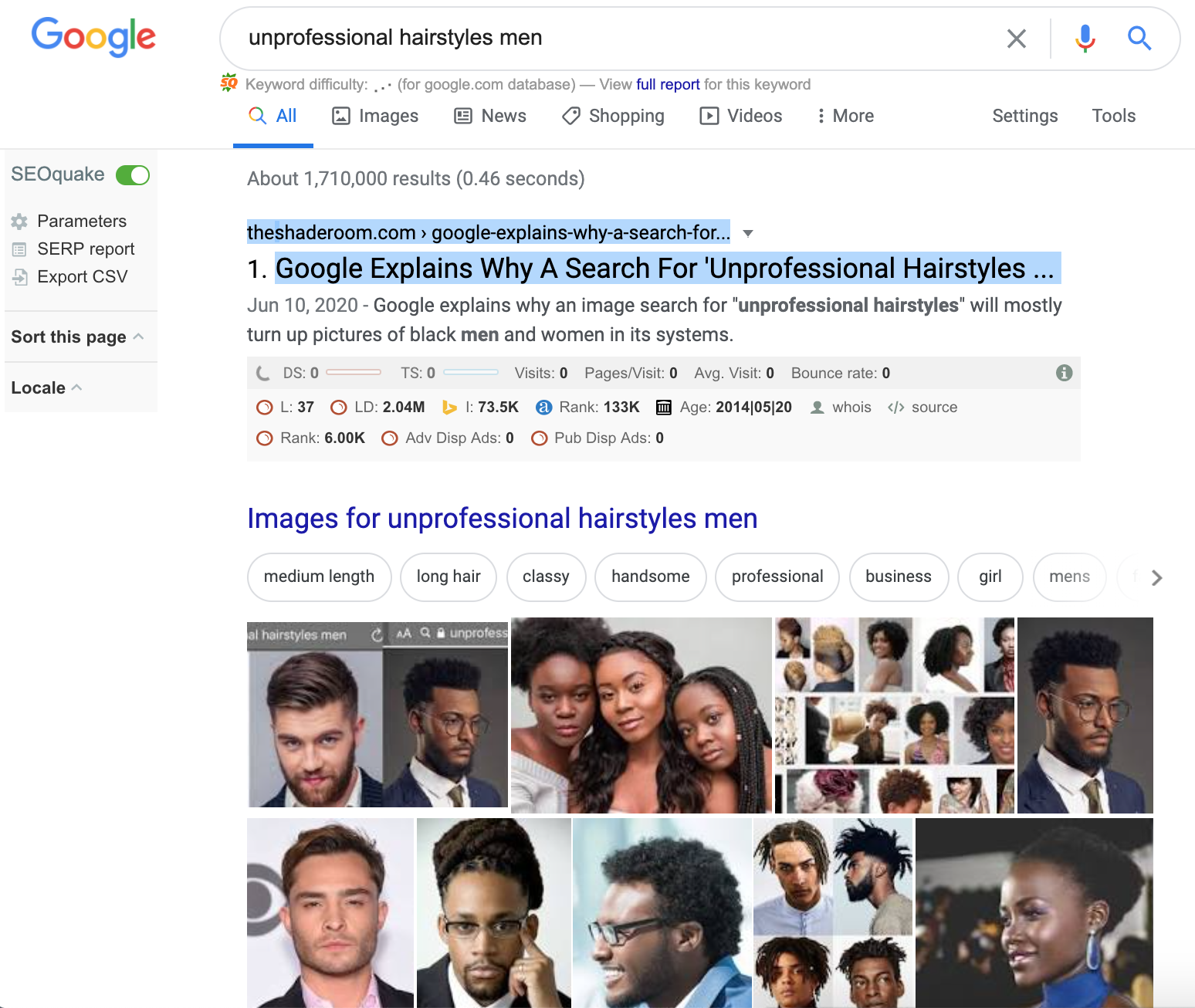

A popular example includes the search “professional hairstyles men” and “unprofessional hairstyles men.”

Google claims that the onus of responsibility isn’t on their model, but on the website and alt text data that site developers and SEOs are using for these images.

When you click on the first result in the second image, it takes you to an article that is calling out Google for this exact act, which is re-feeding the same images back to Google for unprofessional hairstyles.

As marketers, we need to recognize this is part of the problem!

Google is notorious for not taking responsibility for bias in search, so how can we step up as marketers?

We can control alt text, how files are named, the language surrounding the files on the sites, and more.

Protect Users’ Data

Modern digital marketing is based on people’s data.

Algorithms and machine learning models capitalize on this data to continually affirm the biases they’re built on.

If there are reasonable ways to protect your target audiences’ data, we should be working on those.

A roundtable by The Brookings Institute found that people believe “operators of algorithms must be more transparent in their handling of sensitive information” and that if there are ways to use that data for positive outcomes – machine learning models should take them into account (where legal and applicable):

“There was also discussion that the use of sensitive attributes as part of an algorithm could be a strategy for detecting and possibly curing intended and unintentional biases.”

Having these discussions and working toward a solution for people’s private and protected data is the first step.

If this personal identifying information isn’t a part of the algorithm or machine learning process, it has the possibility to prevent this data from being used in biased ways.

Diversify Your Marketing Staff & Leadership

Marketing as an industry is still very white and male while our target audiences are very much diverse in innumerable aspects.

It’s a mistake to think that a single type of person’s perspective can cover and understand the experiences of all.

By diversifying our marketing staff and especially our leadership, we can see where our own implicit biases come into play in how we market our products and services.

Ways to do this include:

- Removing names from resumes.

- Doing phone interviews before face-to-face or video to reduce bias.

- Have more diversity on your interviewing team.

- Provide mentorship opportunities to new hires.

- Make sure you’re looking into bias in annual reviews.

- Promote salary transparency across your organization.

- Support an intern class.

- Post jobs in more diverse locations.

Listen Without Defensiveness

This one is hard, especially right now.

But it’s a key to understanding how our marketing affects others beyond ourselves.

Listen when your BIPOC colleagues tell you that something in your marketing makes them uncomfortable or isn’t sensitive to the experiences of others.

I was at a conference once where a woman stood up to share her experience of working on an all-white marketing team for a campaign.

The whole time, she felt uncomfortable with the diversity in the video the team worked on – the entire cast was white.

She casually mentioned it here and there, but everyone on the team ignored her.

Finally, when the video was presented to the CEO, an Indian man, his first comment was, “Why is everyone in the video white?”

It’s critical that we listen to the experiences of others openly and without defensiveness about our own implicit biases and mistakes – it makes us better people and better at our jobs, too.

Lift Others Above Yourself When You Can

This is probably the hardest one.

Oftentimes we feel like we work super hard to get where we are and want to receive our just benefits for everything we’ve put in.

However, there comes a point in time where our privilege as white marketers along with the cumulative effect of popularity and following means opportunities can present themselves for us that others may not have.

An example of this would be getting to a point where you no longer have to pitch yourself as a speaker at marketing conferences and events, but are asked or can just ask to be a speaker.

Meanwhile, others still have to navigate the pitch process and are at the whim of event organizers.

Lifting others up in this situation would include:

- Ensuring that events plan to have a diverse and equitable speaker lineup before agreeing to speak.

- Making certain that BIPOC and women speakers are being paid for their appearances.

- Even offering your spot to an underrepresented expert in the same space.

It’s not easy to give up something that it feels like you’ve worked hard for, but doing so shows that we understand the extra burden that BIPOC experts in the same space have to carry to achieve the same results we’ve reached.

Calling out and limiting your own implicit biases is hard.

And it’s even more difficult when algorithms, data modeling, machine learning, and tech are compounded against us and reinforcing our biases.

But in the long run, it’s a way that marketers can work together to make our space a more equitable, inclusive, and representative arena for everyone.

Special Thanks

Special thanks and SEO/marketing hat tip to Jonathan Wilson, Krystal Taing, Rohan Jha, and Jamar Ramos for editing support, sending links to extra news sources/data, and direction on this piece.

More Resources:

- Elevating Women in SEO: The Industry Still Has a Long Way to Go

- Understanding Biases in Search & Recommender Systems

- What Is Ethical SEO?

Image Credits

All screenshots taken by author, June 2020