Three years ago, I wrote about a Google Patent that looked at a searcher’s reaction to search results to rank those results, in the post Biometric Parameters as a Ranking Signal in Google Search Results?

Since then, I’ve been keeping an eye out for patent filings from Google that used a smartphone camera to look at the expression of a user of that device in order to try to understand the emotions of that person better.

A patent application about such a process has been filed. I’m wondering if most people would feel comfortable using the process described in this new patent filing.

The summary background for this new patented approach is one of the shortest I have seen, telling us:

“Some computing devices (e.g., mobile phones, tablet computers, etc.) provide graphical keyboards, handwriting recognition systems, speech-to-text systems, and other types of user interfaces (“UIs”) for composing electronic documents and messages. Such user interfaces may provide ways for a user to input text as well as some other limited forms of media content (e.g., emotion icons or so-called “emoticons”, graphical images, voice input, and other types of media content) interspersed within the text of the documents or messages.”

The patent application is:

Graphical Image Retrieval Based On Emotional State of a User of a Computing Device

Inventors: Matthias Grundmann, Karthik Raveendran, and Daniel Castro Chin

US Patent Application: 20190228031

Published: July 25, 2019

Filed: January 28, 2019Abstract

A computing device is described that includes a camera configured to capture an image of a user of the computing device, a memory configured to store the image of the user, at least one processor, and at least one module. The at least one module is operable by the at least one processor to obtain, from the memory, an indication of the image of the user of the computing device, determine, based on the image, a first emotion classification tag, and identify, based on the first emotion classification tag, at least one graphical image from a database of pre-classified images that has an emotional classification that is associated with the first emotion classification tag. The at least one module is further operable by at least one processor to output, for display, at least one graphical image.

Image Retrieval Based Upon a Person’s Emotional State

I’ve noticed an Apple commercial recently showing someone lounging in a tropical setting when their iPhone rings. They ease themselves up to look at the phone, which captures their face, and displays notices about the phone call, and previous notices.

It is the Face ID feature that iPhones or iPad Pros now come with. It is sounding like the Google Pixel 4 may come with a Face ID feature as well.

Such a face ID isn’t mentioned in this patent about retrieving images based upon a user’s emotional state.

But it does sound like something that might get people comfortable with their smartphone camera taking their pictures and using it to do something with that picture.

With Face ID, it would be to make the phone more secure.

With the Biometric approach, which I wrote about three years ago, it could play a role in ranking search results.

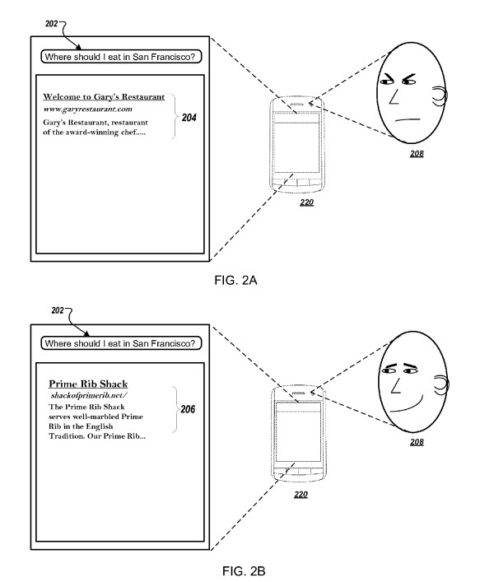

The Biometric patent told us that it would potentially change the rankings of results based upon searchers’ reactions to the search results they were seeing.

This patent about returning graphical images based upon emotional states seems like a good way to test people’s emotions, to make such a biometric approach possible.

A Snippet from the Biometric patent that I’ve quoted before tells us that there are specific things that may trigger boosts or reductions in rankings based on search results:

“The actions include providing a search result to a user; receiving one or more biometric parameters of the user and a satisfaction value; and training a ranking model using the biometric parameters and the satisfaction value. Determining that one or more biometric parameters indicate likely negative engagement by the user with the first search result comprises detecting:

- Increased body temperature

- Pupil dilation

- Eye twitching

- Facial flushing

- Decreased blink rate

- Increased heart rate.”

This new patent application provides more details on how it may understand the emotion of a user of a smartphone camera:

“The computing device may analyze facial features and other characteristics of the photo that may change depending on a person’s emotional state to determine one or more emotion classification tags. Examples of emotion classification tags include, for instance, eye shape, mouth opening size, nostril shape, eyebrow position, and other facial features and attributes that might change based on human emotion. The computing device may then identify one or more graphical images with an emotional classification (also referred to herein as “a human emotion”) that is associated with the one or more emotion classification tags. Examples of emotional classifications include anger, fear, anxiety, happiness, sadness, and other emotional states. Using an emotional classification for an image, the computing device can find a graphical image with emotional content similar to the image that was captured by the camera.”

In addition to telling us about the range of emotions that could be classified, this patent provides more details about the classification itself, as if they have been trying to understand how best to capture such information:

“For example, the computing device may determine that a captured image includes certain facial elements that define first-order emotion classification tags, such as wide open eyes and flared nostrils, and may match the first-order emotion classification tags with a second order emotional classification such as shock or fear. Based on the second-order emotional classification, the computing device may identify one or more graphical images that may similarly depict the emotion likely being expressed by the user in the captured image. For example, the computing device may retrieve a graphical image of a famous actor or actress that has been classified as having a shocked or fearful facial expression.”

This process involving returning an image in response to the photos of the user of smartphones would allow people to select aspects of the graphical image returned – which would be a way to have them help train the identification of emotions in the images they submit in this process.

Takeaways

When I first wrote about the biometric ranking patent process using a smartphone camera three years ago, I didn’t expect anyone to be comfortable that Google might use a person’s reaction to search results to influence rankings for those results.

I also questioned Google’s ability to recognize the emotions behind a searcher’s reaction to search results. I find myself still questioning whether there might be such a comfort among searchers and capability from the search engine.

Then I started seeing videos from Apple, about using a smartphone camera with a Face ID to unlock that phone. And it appears that Google may add such a capability to Pixel Phones.

Would that make people feel a little more comfortable about a smartphone camera reading their faces and trying to understand their emotions as they search in order to possibly rank search results?

This patent would attempt to understand the emotions on people’s faces and return graphic images that show off those emotions in response. Is this something that people would use and feel comfortable about?

The internet has introduced us to new ways to interact with the world – turning many of us into authors who publish at places like blogs and social networks daily.

We submit photographs from our daily lives at those blogs and social networks and services such as Instagram.

People record videos of themselves every day to places such as YouTube.

Will we be OK with Google using our emotional reactions to search results from our smartphone camera to influence the rankings of WebPages?

More Resources:

- Google Image Search Labels Becoming More Semantic?

- What Is Rank Transition & What Does It Mean for SEO?

- Exploring the Role of Content Groups & Search Intent in SEO

Image Credits

Screenshot taken by author, July 2019