Unique, and original, content drives the Internet (so we’re constantly told), and we SEOs spend much of of our time producing and honing that content in the hopes of making the all mighty Google happy as a clam.

Unfortunately, there has always been an ongoing problem with spammy websites lifting existing content verbatim, and passing it off as their own creation. These scraper sites not only devalue the original content they have stolen, but they can also impact the page rankings of the original page.

Many of us have been affected by scraper sites over the years, and there is nothing quite as nerve wracking as having your hard work stolen, and then watching it earn a better piece of SERP real estate. So what is Google doing about scraper sites, and what can we do to protect ourselves and our websites?

What Are Scraper Sites?

If you’ve been lucky enough to avoid scraper sites thus far, a short primer will put you in the picture. A scraper site is a website that lifts original content from another site and passes it off as it’s own. We’re not talking about paraphrasing or quoting here. Scrapers steal whole blocks of original content, copying and pasting it onto their own websites. They do this to generate revenue, to divert traffic from the original site, and to manipulate page rankings. Up until recently, there was little a webmaster could do about scraper sites that had stolen their content. That may be starting to change. Though I stress the phrase ‘may be’.

Google, Matt Cutts, and Scraper Sites

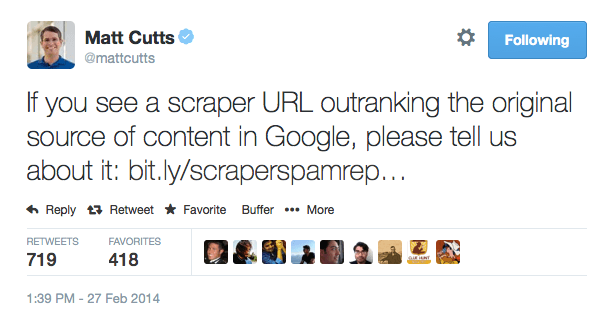

Over the last few years, Google has attempted to address the scraping problem through their various search algorithms. Unfortunately, that has had little effect on either scraper sites or their ability to gain ground in a given SERP. Just a few months back in February, Google’s Matt Cutts sent out a tweet announcing a new feature that allows webmasters to report scraper sites (shown above).

It’s not too much to say that Cutts’ tweet was met with consternation, some derision, and a fair bit of ill humor. It even prompted a response tweet that has since gone viral, but the less said about that the better. Whether you are a fan of Cutts and Google or not, it is clear that they have scraper sites in their cross-hairs and are working on a plan to address the situation.

Reporting a Scraper Site

Reporting a scraper site is easy, and only takes a few moments. Simply go to Google’s Scraper Report page, enter the URL of original site and the URL of the scraper site. The report page also asks that you enter the search results URL that demonstrates the problem. Finally, before you can submit your report, you must confirm that the original website is following Google’s webmaster guidelines, and that it has not received any recent manual penalties.

Google has yet to say what they are going to do with this information, and submitting a report is no guarantee that your site will improve in the page rankings or that the scraper site will be immediately penalized. But clearly, Google is gathering this information to help them form a suitable attack strategy to combat scraper sites.

Protecting Your Website’s Rankings

If your website has fallen pray to a scraper, there is not much you can do at the moment about the thievery itself. If your site is still outranking the scraper site, then you are in good shape as far as the SERPs go. However, if your site is being outranked by a scraper site, there are a few things you can do to help your site’s performance.

- Check your website for technical errors that may be preventing the search engine bots from accurately crawling and evaluating your content. This includes checking your XML sitemap and robots.txt files and any other crawl errors using GWT or similar.

- Optimize your title, description, and content so that search engines can better establish its topical relevance.

- Build better back links and deep links to indicate the popularity and relevance of your content. Yes, you absolutely must make outreach a large part of you SEO efforts to garner high value links.

- Remove any bad links that may be effecting your page ranking. Well, remove the ones you can, document the rest for possible disavow, and focus more effort on outreach over removal.

Despite the critical backlash against Matt Cutts’ February tweet, it is clear that Google is taking a fresher look at scraper sites. While reporting a scraper site may not result in any immediate action, the fact that Google is gathering this information is at least a step in the right direction. If you are being outranked by your own content, file a report and then take to your own website and look for ways to improve your optimization strategies. At some point, our content efforts and following all the rules has to get rewarded, right?

Featured image screenshot of tweet published Feb 2014