In the beginning, search engines were crap.

I don’t mean to knock the pioneers, but they simply relied too heavily on what webmasters said their websites were about.

That’s why porn sites ranked for searches like, “the whitehouse.”

People are shameless – if they can scam their way into money, you’d better believe they’ll do it. Follow the incentives.

When Google came onto the scene, touting founder Larry Page’s new PageRank metric, things changed.

PageRank was a way to measure websites not by how relevant their webmasters said they were – but by how relevant and authoritative other webmasters said they were.

Since then, links have been central to getting sites to rank in search results. It’s nearly impossible to rank without them. PageRank is definitely not a tell-all metric, but one of its core theories still holds true:

Not all links are created equal.

If you’re getting into the SEO game now you probably already know you need links to rank. And you’ve probably been run through the gamut on how you can build/attract them.

This post assumes manual link building (i.e. everything other than linkbait) is at least part of your strategy.

Link metrics essentially answer (or attempt to answer) this question: how strong is the page where the link will be published?

The stronger the page, the stronger the link it passes.

What follows is an introductory guide to metrics we can use to evaluate links.

PageRank

To learn the basics of PageRank it’s a good idea to read Larry Page and Sergey Brin’s thesis paper, The Anatomy of a Large-scale Hypertextual Web Search Engine, from their PhD work at Stanford. Yes, it’s academic writing, so you may want to stab your eyes out with a pretzel at some point, but this document formed the basis of one of the biggest technology revolutions in modern history, so buck up.

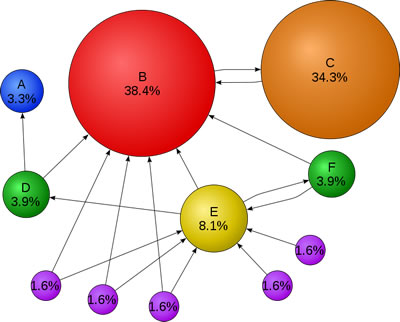

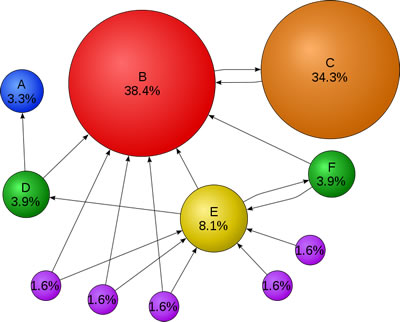

Alright, I know probably 95% of you won’t read the paper – so have a look at this graphic. It gives you the basic idea. (Arrows represent links.)

Things have changed since PageRank was first conceived (quite a bit), but the basics are still in play.

PageRank is basically a 1-10 score for a page based on how many links it has (and how strong those links are). It’s logorithmic, meaning it’s 10x harder to get from 2 to 3 as it is to get from 1 to 2.

It generally follows that the higher the PageRank of a particular page, the more PageRank (or “link juice”) that page can pass to other pages through its links.

While most SEOs worth their salt will tell you to ignore PageRank, they still secretly check it when nobody’s looking.

How can you collect PageRank data?

- With a toolbar (Google Toolbar, SEOQuake)

- With live SERP displays (SEOmoz Toolbar does it, SEOQuake)

mozRank & mozTrust(from SEOmoz)

SEOmoz, based in Seattle, moved from their original model of an SEO agency to helping SEOs do their jobs better by providing tools, resources and education. You’ve probably heard of them.

In October of 2008 SEOmoz launched something exciting: Linkscape.

Linkscape was the culmination of a pretty bold endeavor on the part of SEOmoz and their engineering team: they’d created a link graph of the web. They crawled about a billion URLs and collected link information on each one, storing it in a rather massive index.

I was at SMX East in New York City the morning Linkscape was announced, and I can confirm that Rand Fishkin, CEO and founder, was chased through the expo hall aisles by a squadron of screaming SEO geeks.

Along with the launch of Linkscape and it’s reporting tools, SEOmoz launched (and continues to expand) an impressive set of tools that use their link index to evaluate links and pages.

In order for this data to be actionable, it needed to be organized and evaluated in the same way searches engines handle their own link metrics.

Thus mozRank was modeled after PageRank, and mozTrust was modeled after TrustRank (a metric cited in a Yahoo! research paper as a potential means to measure the “trustworthiness” of a given page or website).

Essentially, mozTrust measures the flow of trust from core “seed” sites (whitehouse.gov, for example) out to the rest of the web. The idea: the closer a page/site is associated with a trusted seed (via links from that seed), the more mozTrust it has (and, we’re to assume, ranking power).

How can you collect mozRank and mozTrust data?

- OpenSiteExplorer.org – a new SEOmoz project that allows users to enter a URL (or two, for comparison) and returns all kinds of juicy link data

- SEOmoz Toolbar (“PRO” members only)

- Various other SEOmoz tools (“PRO members only)

Note: OpenSiteExplorer.org does give you a decent amount of data without being a logged-in PRO member, but to really dive in deep you’ll need to join. I’m a long-time paying member of SEOmoz (I think they owe me some beers), but I’m not being paid to say that – and there are some other great toolsets and educational sites out there, such as Raven SEO Tools and SEOBook, that are well worth a look.

ACRank (Majestic SEO)

Majestic SEO actually was on the scene with a competitive link index well before SEOmoz launched Linkscape.

In terms of a qualitative metric, Majestic features ACRank, which is, according to their website, a “very simple measure of how important a particular page is…depending on number of unique referring ‘short domains'” (a “short domain” is Majestic’s term for what many refer to as a “root domain”).

Majestic’s index of the web is reportedly larger than SEOmoz’s by quite a bit. Majestic’s latest estimate was 1.5 trillion URLs indexed – compared with SEOmoz’s “43+ billion.”

It’s worth noting, however, that there probably aren’t 1.5 trillion relevant pages on the web. There’s a lot of crap out there, and most of it is totally worthless to search engines and users.

For a full comparison of Majestic SEO to SEOmoz’s Linkscape, check out this post from Dixon Jones which provides a pretty thorough analysis.

How can you collect data on ACRank?

- Sign up for Majestic SEO

- Install the SEOBook toolbar (it displays the number of in-linking domains according to Majestic)

- Sign up for Raven SEO Tools(Raven and Majestic entered into a partnership earlier this year)

A Great Big Grain of Salt

While all of the metrics above are solid places to start when evaluating links, they each come with gotchas.

Since the value of links became common knowledge search engines (especially Google) have been fighting the tactics that allow people to “game” their way to the top by building links easily and scalably.

In short, Google doesn’t like to look stupid. It hurts their brand (and their feelings).

Some link tactics that made Google look stupid (and brought down their wrath):

- Excessive reciprocal or three-way links

- Paid links (at least, links directly paid for – there are ways to pay for links without “paying” for them)

- Questionable linkbait tactics

- Free-for-all link directories

Because of tactics like this (especially paid links) Google created the “nofollow” attribute – which is an attribute that can be added to an anchor tag (<a href=”… ), or the entire page itself (via the Meta Robots tag) that tells search engines to “ignore this link” or “ignore all links on this page.”

Any nofollowed link is essentially worthless from an SEO standpoint. It doesn’t pass ranking power.

Additionally, Google and those other search engines take manually action to penalize websites for selling links or otherwise being manipulative or violating their webmaser guidelines.

Sometimes, the website in question drops significantly in rankings. Sometimes it’s booted entirely from the index. Sometimes its ability to pass value through its links is stripped.

The reason you need to know all this when you start evaluating links using the metrics above: these metrics don’t always tell the story.

For instance: if a site has had its ability to pass link value stripped, the PageRank may still look solid. But the links themselves pass no value.

Sometimes an individual page on a site (a “links” or “resources” page) will be penalized – and its PageRank will be stripped. You can see this by using a toolbar or another means to check the page.

One way to check for penalties: compare the mozRank or ACRank of a page to its PageRank. If there’s a great discrepancy, and the mozRank/ACRank is high while the PageRank is very low or zero, this is a good sign the page may have been hit by a Google penalty.

The bigger question here is how reliable 3rd party metrics are since they can never be a true representation of a search engine’s link graph. When you throw manual penalties into the mix, the entire graph shifts. One powerful website stripped of its ability to pass link juice then impacts all the sites it links to, their value drops as result, as does the value of the links they pass, and so on.

Still, Google isn’t going to open up their true link graph to the public. So we’ve got to take what we can get – but we should be aware of these issues.

More reading

“Nofollow”

To learn more about the “nofollow” attribute and juiceless links check out this excellent guide written by Paul Teitelman over at Search Engine People.

Other link metrics

This post from Jordan Kasteler at Search & Social explains one of the often-overlooked link metrics: link placement.

This post from Big Dave Snyder provides some great tips on evaluating links that aren’t covered in this post.

Have your own link metrics you rely on for your link building? Share them in the comments.