Google search console provides data necessary to monitor website performance in search and improve search rankings, information that is exclusively available through Search Console.

This makes it indispensable for online business and publishers that are keen to maximize success.

Taking control of your search presence is easier to do when using the free tools and reports.

Jump to:

What Is Google Search Console?

Google Search Console is a free web service hosted by Google that provides a way for publishers and search marketing professionals to monitor their overall site health and performance relative to Google search.

It offers an overview of metrics related to search performance and user experience to help publishers improve their sites and generate more traffic.

Search Console also provides a way for Google to communicate when it discovers security issues (like hacking vulnerabilities) and if the search quality team has imposed a manual action penalty.

Important features:

- Monitor indexing and crawling.

- Identify and fix errors.

- Overview of search performance.

- Request indexing of updated pages.

- Review internal and external links.

It’s not necessary to use Search Console to rank better nor is it a ranking factor.

However, the usefulness of the Search Console makes it indispensable for helping improve search performance and bringing more traffic to a website.

How To Get Started

The first step to using Search Console is to verify site ownership.

Google provides several different ways to accomplish site verification, depending on if you’re verifying a website, a domain, a Google site, or a Blogger-hosted site.

Domains registered with Google domains are automatically verified by adding them to Search Console.

The majority of users will verify their sites using one of four methods:

- HTML file upload.

- Meta tag

- Google Analytics tracking code.

- Google Tag Manager.

Some site hosting platforms limit what can be uploaded and require a specific way to verify site owners.

But, that’s becoming less of an issue as many hosted site services have an easy-to-follow verification process, which will be covered below.

How To Verify Site Ownership

There are two standard ways to verify site ownership with a regular website, like a standard WordPress site.

- HTML file upload.

- Meta tag.

When verifying a site using either of these two methods, you’ll be choosing the URL-prefix properties process.

Let’s stop here and acknowledge that the phrase “URL-prefix properties” means absolutely nothing to anyone but the Googler who came up with that phrase.

Don’t let that make you feel like you’re about to enter a labyrinth blindfolded. Verifying a site with Google is easy.

HTML File Upload Method

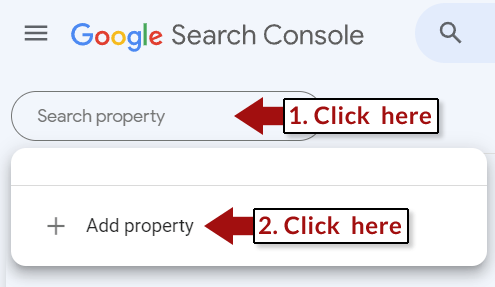

Step 1: Go to the Search Console and open the Property Selector dropdown that’s visible in the top left-hand corner on any Search Console page.

Screenshot by author, May 2022

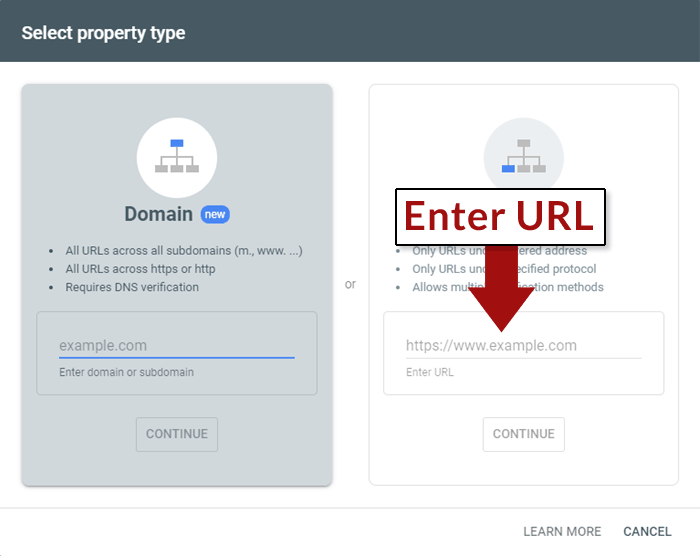

Screenshot by author, May 2022Step 2: In the pop-up labeled Select Property Type, enter the URL of the site then click the Continue button.

Screenshot by author, May 2022

Screenshot by author, May 2022Step 3: Select the HTML file upload method and download the HTML file.

Step 4: Upload the HTML file to the root of your website.

Root means https://example.com/. So, if the downloaded file is called verification.html, then the uploaded file should be located at https://example.com/verification.html.

Step 5: Finish the verification process by clicking Verify back in the Search Console.

Verification of a standard website with its own domain in website platforms like Wix and Weebly is similar to the above steps, except that you’ll be adding a meta description tag to your Wix site.

Duda has a simple approach that uses a Search Console App that easily verifies the site and gets its users started.

Troubleshooting With GSC

Ranking in search results depends on Google’s ability to crawl and index webpages.

The Search Console URL Inspection Tool warns of any issues with crawling and indexing before it becomes a major problem and pages start dropping from the search results.

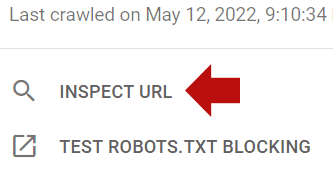

URL Inspection Tool

The URL inspection tool shows whether a URL is indexed and is eligible to be shown in a search result.

For each submitted URL a user can:

- Request indexing for a recently updated webpage.

- View how Google discovered the webpage (sitemaps and referring internal pages).

- View the last crawl date for a URL.

- Check if Google is using a declared canonical URL or is using another one.

- Check mobile usability status.

- Check enhancements like breadcrumbs.

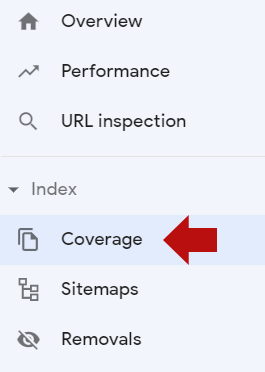

Coverage

The coverage section shows Discovery (how Google discovered the URL), Crawl (shows whether Google successfully crawled the URL and if not, provides a reason why), and Enhancements (provides the status of structured data).

The coverage section can be reached from the left-hand menu:

Screenshot by author, May 2022

Screenshot by author, May 2022Coverage Error Reports

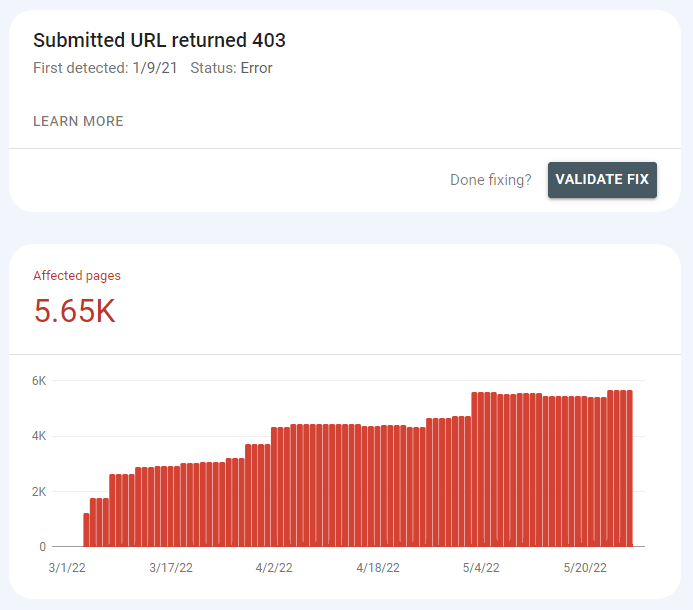

While these reports are labeled as errors, it doesn’t necessarily mean that something is wrong. Sometimes it just means that indexing can be improved.

For example, in the following screenshot, Google is showing a 403 Forbidden server response to nearly 6,000 URLs.

The 403 error response means that the server is telling Googlebot that it is forbidden from crawling these URLs.

Screenshot by author, May 2022

Screenshot by author, May 2022The above errors are happening because Googlebot is blocked from crawling the member pages of a web forum.

Every member of the forum has a member page that has a list of their latest posts and other statistics.

The report provides a list of URLs that are generating the error.

Clicking on one of the listed URLs reveals a menu on the right that provides the option to inspect the affected URL.

There’s also a contextual menu to the right of the URL itself in the form of a magnifying glass icon that also provides the option to Inspect URL.

Screenshot by author, May 2022

Screenshot by author, May 2022Clicking on the Inspect URL reveals how the page was discovered.

It also shows the following data points:

- Last crawl.

- Crawled as.

- Crawl allowed?

- Page fetch (if failed, provides the server error code).

- Indexing allowed?

There is also information about the canonical used by Google:

- User-declared canonical.

- Google-selected canonical.

For the forum website in the above example, the important diagnostic information is located in the Discovery section.

This section tells us which pages are the ones that are showing links to member profiles to Googlebot.

With this information, the publisher can now code a PHP statement that will make the links to the member pages disappear when a search engine bot comes crawling.

Another way to fix the problem is to write a new entry to the robots.txt to stop Google from attempting to crawl these pages.

By making this 403 error go away, we free up crawling resources for Googlebot to index the rest of the website.

Google Search Console’s coverage report makes it possible to diagnose Googlebot crawling issues and fix them.

Fixing 404 Errors

The coverage report can also alert a publisher to 404 and 500 series error responses, as well as communicate that everything is just fine.

A 404 server response is called an error only because the browser or crawler’s request for a webpage was made in error because the page does not exist.

It doesn’t mean that your site is in error.

If another site (or an internal link) links to a page that doesn’t exist, the coverage report will show a 404 response.

Clicking on one of the affected URLs and selecting the Inspect URL tool will reveal what pages (or sitemaps) are referring to the non-existent page.

From there you can decide if the link is broken and needs to be fixed (in the case of an internal link) or redirected to the correct page (in the case of an external link from another website).

Or, it could be that the webpage never existed and whoever is linking to that page made a mistake.

If the page doesn’t exist anymore or it never existed at all, then it’s fine to show a 404 response.

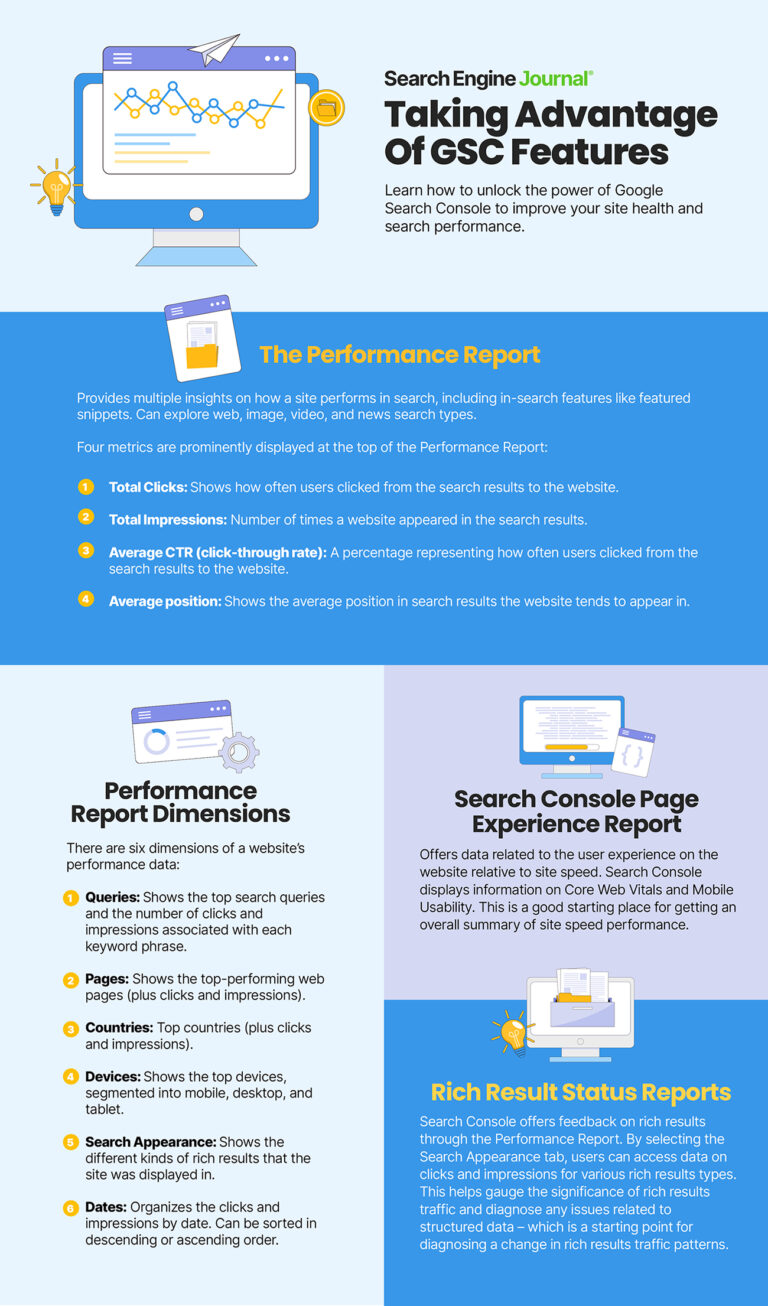

Taking Advantage Of GSC Features

The Performance Report

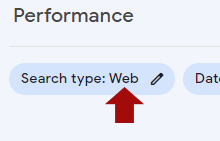

The top part of the Search Console Performance Report provides multiple insights on how a site performs in search, including in search features like featured snippets.

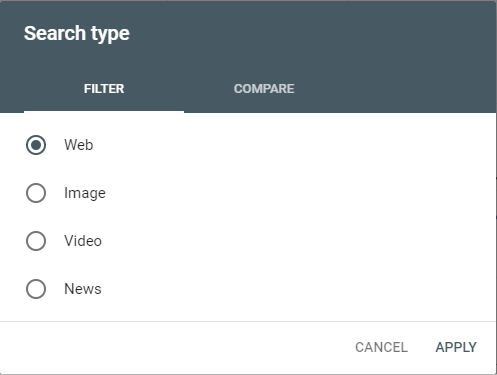

There are four search types that can be explored in the Performance Report:

- Web.

- Image.

- Video.

- News.

Search Console shows the web search type by default.

Change which search type is displayed by clicking the Search Type button:

Screenshot by author, May 2022

Screenshot by author, May 2022A menu pop-up will display allowing you to change which kind of search type to view:

Screenshot by author, May 2022

Screenshot by author, May 2022A useful feature is the ability to compare the performance of two search types within the graph.

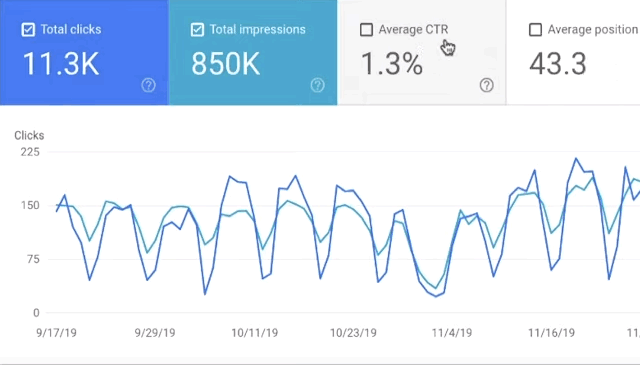

Four metrics are prominently displayed at the top of the Performance Report:

- Total Clicks.

- Total Impressions.

- Average CTR (click-through rate).

- Average position.

Screenshot by author, May 2022

Screenshot by author, May 2022By default, the Total Clicks and Total Impressions metrics are selected.

By clicking within the tabs dedicated to each metric, one can choose to see those metrics displayed on the bar chart.

Impressions

Impressions are the number of times a website appeared in the search results. As long as a user doesn’t have to click a link to see the URL, it counts as an impression.

Additionally, if a URL is ranked at the bottom of the page and the user doesn’t scroll to that section of the search results, it still counts as an impression.

High impressions are great because it means that Google is showing the site in the search results.

But, the meaning of the impressions metric is made meaningful by the Clicks and the Average Position metrics.

Clicks

The clicks metric shows how often users clicked from the search results to the website. A high number of clicks in addition to a high number of impressions is good.

A low number of clicks and a high number of impressions is less good but not bad. It means that the site may need improvements to gain more traffic.

The clicks metric is more meaningful when considered with the Average CTR and Average Position metrics.

Average CTR

The average CTR is a percentage representing how often users clicked from the search results to the website.

A low CTR means that something needs improvement in order to increase visits from the search results.

A higher CTR means the site is performing well.

This metric gains more meaning when considered together with the Average Position metric.

Average Position

Average Position shows the average position in search results the website tends to appear in.

An average in positions one to 10 is great.

An average position in the twenties (20 – 29) means that the site is appearing on page two or three of the search results. This isn’t too bad. It simply means that the site needs additional work to give it that extra boost into the top 10.

Average positions lower than 30 could (in general) mean that the site may benefit from significant improvements.

Or, it could be that the site ranks for a large number of keyword phrases that rank low and a few very good keywords that rank exceptionally high.

In either case, it may mean taking a closer look at the content. It may be an indication of a content gap on the website, where the content that ranks for certain keywords isn’t strong enough and may need a dedicated page devoted to that keyword phrase to rank better.

All four metrics (Impressions, Clicks, Average CTR, and Average Position), when viewed together, present a meaningful overview of how the website is performing.

The big takeaway about the Performance Report is that it is a starting point for quickly understanding website performance in search.

It’s like a mirror that reflects back how well or poorly the site is doing.

Performance Report Dimensions

Scrolling down to the second part of the Performance page reveals several of what’s called Dimensions of a website’s performance data.

There are six dimensions:

1. Queries: Shows the top search queries and the number of clicks and impressions associated with each keyword phrase.

2. Pages: Shows the top-performing web pages (plus clicks and impressions).

3. Countries: Top countries (plus clicks and impressions).

4. Devices: Shows the top devices, segmented into mobile, desktop, and tablet.

5. Search Appearance: This shows the different kinds of rich results that the site was displayed in. It also tells if Google displayed the site using Web Light results and video results, plus the associated clicks and impressions data. Web Light results are results that are optimized for very slow devices.

6. Dates: The dates tab organizes the clicks and impressions by date. The clicks and impressions can be sorted in descending or ascending order.

Keywords

The keywords are displayed in the Queries as one of the dimensions of the Performance Report (as noted above). The queries report shows the top 1,000 search queries that resulted in traffic.

Of particular interest are the low-performing queries.

Some of those queries display low quantities of traffic because they are rare, what is known as long-tail traffic.

But, others are search queries that result from webpages that could need improvement, perhaps it could be in need of more internal links, or it could be a sign that the keyword phrase deserves its own webpage.

It’s always a good idea to review the low-performing keywords because some of them may be quick wins that, when the issue is addressed, can result in significantly increased traffic.

Links

Search Console offers a list of all links pointing to the website.

However, it’s important to point out that the links report does not represent links that are helping the site rank.

It simply reports all links pointing to the website.

This means that the list includes links that are not helping the site rank. That explains why the report may show links that have a nofollow link attribute on them.

The Links report is accessible from the bottom of the left-hand menu:

Screenshot by author, May 2022

Screenshot by author, May 2022The Links report has two columns: External Links and Internal Links.

External Links are the links from outside the website that points to the website.

Internal Links are links that originate within the website and link to somewhere else within the website.

The External links column has three reports:

- Top linked pages.

- Top linking sites.

- Top linking text.

The Internal Links report lists the Top Linked Pages.

Each report (top linked pages, top linking sites, etc.) has a link to more results that can be clicked to view and expand the report for each type.

For example, the expanded report for Top Linked Pages shows Top Target pages, which are the pages from the site that are linked to the most.

Clicking a URL will change the report to display all the external domains that link to that one page.

The report shows the domain of the external site but not the exact page that links to the site.

Sitemaps

A sitemap is generally an XML file that is a list of URLs that helps search engines discover the webpages and other forms of content on a website.

Sitemaps are especially helpful for large sites, sites that are difficult to crawl if the site has new content added on a frequent basis.

Crawling and indexing are not guaranteed. Things like page quality, overall site quality, and links can have an impact on whether a site is crawled and pages indexed.

Sitemaps simply make it easy for search engines to discover those pages and that’s all.

Creating a sitemap is easy because more are automatically generated by the CMS, plugins, or the website platform where the site is hosted.

Some hosted website platforms generate a sitemap for every site hosted on its service and automatically update the sitemap when the website changes.

Search Console offers a sitemap report and provides a way for publishers to upload a sitemap.

To access this function click on the link located on the left-side menu.

The sitemap section will report on any errors with the sitemap.

Search Console can be used to remove a sitemap from the reports. It’s important to actually remove the sitemap however from the website itself otherwise Google may remember it and visit it again.

Once submitted and processed, the Coverage report will populate a sitemap section that will help troubleshoot any problems associated with URLs submitted through the sitemaps.

Search Console Page Experience Report

The page experience report offers data related to the user experience on the website relative to site speed.

Search Console displays information on Core Web Vitals and Mobile Usability.

This is a good starting place for getting an overall summary of site speed performance.

Rich Result Status Reports

Search Console offers feedback on rich results through the Performance Report. It’s one of the six dimensions listed below the graph that’s displayed at the top of the page, listed as Search Appearance.

Selecting the Search Appearance tabs reveals clicks and impressions data for the different kinds of rich results shown in the search results.

This report communicates how important rich results traffic is to the website and can help pinpoint the reason for specific website traffic trends.

The Search Appearance report can help diagnose issues related to structured data.

For example, a downturn in rich results traffic could be a signal that Google changed structured data requirements and that the structured data needs to be updated.

It’s a starting point for diagnosing a change in rich results traffic patterns.

Search Console Is Good For SEO

In addition to the above benefits of Search Console, publishers and SEOs can also upload link disavow reports, resolve penalties (manual actions), and security events like site hackings, all of which contribute to a better search presence.

It is a valuable service that every web publisher concerned about search visibility should take advantage of.

Here’s a visual breakdown of how to take advantage of Google Search Console features. Be sure to save a copy for future reference.

More Resources:

- New Google Blog Series About Search Console & Data Studio

- Google I/O Search Console Update: New Report For Indexed Videos

- How to Do an SEO Audit: The Ultimate Checklist

Featured Image: bunny pixar/Shutterstock