Creating and updating your XML sitemap is an essential yet overlooked SEO practice.

Sitemaps are important for your sites and search engines.

For search engines, sitemaps are an easy and straightforward way to get information about your website’s structure and pages.

XML sitemaps provide some crucial meta data as well, like:

- How often each page is updated.

- When they were last changed.

- How important pages are in relation to each other.

However, there are certain best practices for using a sitemap to your maximum advantage.

Ready to learn how to optimize XML sitemaps?

What follows are 13 best practices to get the most SEO bang for your buck.

1. Use Tools & Plugins to Generate Your Sitemap Automatically

Generating a sitemap is easy when you have the right tools, such as auditing software with a built-in XML Sitemap generator or popular plugins like Google XML Sitemaps.

In fact, WordPress websites that are already using Yoast SEO can enable XML Sitemaps directly in the plugin.

Alternatively, you could manually create a sitemap by following XML sitemap code structure.

Technically, your sitemap doesn’t even need to be in XML format – a text file with a new line separating each URL will suffice.

However, you will need to generate a complete XML sitemap if you want to implement the hreflang attribute, so it’s much easier just to let a tool do the work for you.

Visit the official Google and Bing pages for more information on how to manually set up your sitemap.

2. Submit Your Sitemap to Google

You can submit your sitemap to Google from your Google Search Console.

From your dashboard, click Crawl > Sitemaps > Add Test Sitemap.

Test your sitemap and view the results before you click Submit Sitemap to check for errors that may prevent key landing pages from being indexed.

Ideally, you want the number of pages indexed to be the same as the number of pages submitted.

Note that submitting your sitemap tells Google which pages you consider to be high quality and worthy of indexation, but it does not guarantee that they’ll be indexed.

Instead, the benefit of submitting your sitemap is to:

- Help Google understand how your website is laid out.

- Discover errors you can correct to ensure your pages are indexed properly.

3. Prioritize High-Quality Pages in Your Sitemap

When it comes to ranking, overall site quality is a key factor.

If your sitemap directs bots to thousands of low-quality pages, search engines interpret these pages as a sign that your website is probably not one people will want to visit – even if the pages are necessary for your site, such as login pages.

Instead, try to direct bots to the most important pages on your site.

Ideally, these pages are:

- Highly optimized.

- Include images and video.

- Have lots of unique content.

- Prompt user engagement through comments and reviews.

4. Isolate Indexation Problems

Google Search Console can be a bit frustrating if it doesn’t index all of your pages because it doesn’t tell you which pages are problematic.

For example, if you submit 20,000 pages and only 15,000 of those are indexed, you won’t be told what the 5,000 “problem pages” are.

This is especially true for large ecommerce websites that have multiple pages for very similar products.

SEO Consultant Michael Cottam has written a useful guide for isolating problematic pages.

He recommends splitting product pages into different XML sitemaps and testing each of them.

Create sitemaps that will affirm hypotheses, such as “pages that don’t have product images aren’t getting indexed” or “pages without unique copy aren’t getting indexed.”

When you’ve isolated the main problems, you can either work to fix the problems or set those pages to “noindex,” so they don’t diminish your overall site quality.

In 2018, Google Search Console was updated in terms of Index Coverage.

In particular, the problem pages are now listed and the reasons why Google isn’t indexing some URLs are provided.

5. Include Only Canonical Versions of URLs in Your Sitemap

When you have multiple pages that are very similar, such as product pages for different colors of the same product, you should use the “link rel=canonical” tag to tell Google which page is the “main” page it should crawl and index.

Bots have an easier time discovering key pages if you don’t include pages with canonical URLs pointing at other pages.

6. Use Robots Meta Tag Over Robots.txt Whenever Possible

When you don’t want a page to be indexed, you usually want to use the meta robots “noindex,follow” tag.

This prevents Google from indexing the page but it preserves your link equity, and it’s especially useful for utility pages that are important to your site but shouldn’t be showing up in search results.

The only time you want to use robots.txt to block pages is when you’re eating up your crawl budget.

If you notice that Google is re-crawling and indexing relatively unimportant pages (e.g., individual product pages) at the expense of core pages, you may want to use robots.txt.

7. Create Dynamic XML Sitemaps for Large Sites

It’s nearly impossible to keep up with all of your meta robots on huge websites.

Instead, you should set up rules to determine when a page will be included in your XML sitemap and/or changed from noindex to “index, follow.”

You can find detailed instructions on exactly how to create a dynamic XML sitemap but, again, this step is made much easier with the help of a tool that generates dynamic sitemaps for you.

8. Do Use XML Sitemaps & RSS/Atom Feeds

RSS/Atom feeds notify search engines when you update a page or add fresh content to your website.

Google recommends using both sitemaps and RSS/Atom feeds to help search engines understand which pages should be indexed and updated.

By including only recently updated content in your RSS/Atom feeds you’ll make finding fresh content easier for both search engines and visitors.

9. Update Modification Times Only When You Make Substantial Changes

Don’t try to trick search engines into re-indexing pages by updating your modification time without making any substantial changes to your page.

In the past, I’ve talked at length about the potential dangers of risky SEO.

I won’t reiterate all my points here, but suffice it to say that Google may start removing your date stamps if they’re constantly updated without providing new value.

10. Don’t Include ‘noindex’ URLs in Your Sitemap

Speaking of wasted crawl budget, if search engine robots aren’t allowed to index certain pages, then they have no business being in your sitemap.

When you submit a sitemap that includes blocked and “noindex” pages, you’re simultaneously telling Google “it’s really important that you index this page” and “you’re not allowed to index this page.”

Lack of consistency is a common mistake.

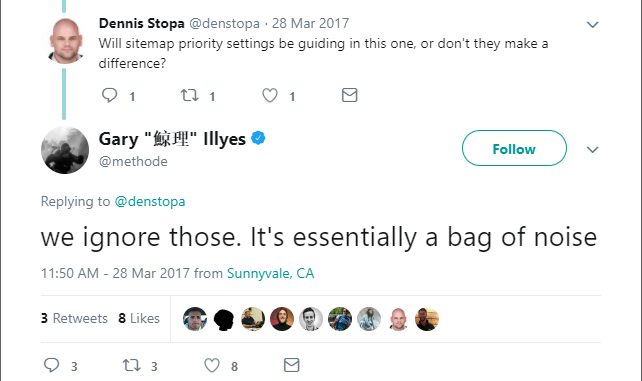

11. Don’t Worry Too Much About Priority Settings

Some Sitemaps have a “Priority” column that ostensibly tells search engines which pages are most important.

Whether this feature actually works, however, has long been debated.

Back in 2017, Google’s Gary Illyes tweeted that Googlebot ignores priority settings while crawling.

12. Don’t Let Your Sitemap Get Too Big

The smaller your sitemap, the less strain you’re putting on your server.

Google and Bing both increased the size of accepted sitemap files from 10 MB to 50 MB and 50,000 URLs per sitemap.

While this is more than enough for most sites, some webmasters will need to split their pages into two or more sitemaps.

For example, if you’re running an online shop with 200,000 pages – you’ll need to create five separate sitemaps to handle all that.

Here’s advice from Google’s John Mueller on how to add sitemaps for more than 50,000 URLs. TLDR: Create a sitemap file for sitemaps.

13. Don’t Create a Sitemap If You Don’t Have to

Remember that not every website needs a sitemap.

Google can find and index your pages pretty accurately.

According to Mueller, sitemap itself doesn’t bring SEO value for everybody equally.

Basically, if your website is a portfolio/one-pager, or if it’s an organization website that you rarely update, sitemap is not really needed.

However, if you publish a lot of new content and want it indexed as soon as possible, or if you have hundreds of thousands of pages (running an ecommerce website, for instance), then a sitemap is still a great way to give information directly to Google.

More Resources:

- Google: Only Worry About Sitemaps if Your Site Meets This Criteria

- 7 Reasons Why an HTML Sitemap Is a Must-Have

- Advanced Technical SEO: A Complete Guide

Image Credits

All screenshots taken by author, October 2020