Google announced a major change to how nofollow links are counted. Previously nofollow links were treated as a directive, meaning Google obeyed the nofollow, period. Starting today, for ranking purposes, Google is treating nofollow as a hint. This means that Google will decide whether to use the link for ranking purposes or not. This change impacts on-page SEO, content marketing, link building and link spam.

What is Nofollow?

Nofollow is an HTML attribute that is added to links. It tells Google that a link is not trusted. It was originally designed to combat blog comment spam. It evolved for use on advertising links and for user generated links that couldn’t be 100% trusted.

Google’s Says Will Treat Nofollow as a Hint

This is Google’s official announcement of the change in nofollow links:

“When nofollow was introduced, Google would not count any link marked this way as a signal to use within our search algorithms. This has now changed.

All the link attributes — sponsored, UGC and nofollow — are treated as hints about which links to consider or exclude within Search.

We’ll use these hints — along with other signals — as a way to better understand how to appropriately analyze and use links within our systems.”

What’s In it For Publishers?

There is no discernible upside for publishers to introduce these new nofollow attributes. In fact, there many reasons not to add the new link attributes.

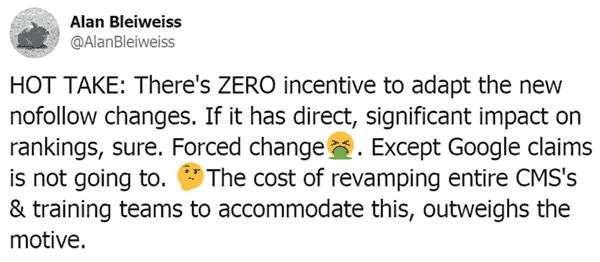

Forensic SEO Audit consultant, Alan Bleiweiss tweeted:

“There’s ZERO incentive to adapt the new nofollow changes. If it has direct, significant impact on rankings, sure. Forced change. Except Google claims is not going to.

The cost of revamping entire CMS’s & training teams to accommodate this, outweighs the motive.”

Google Treating the Nofollow Attribute as a Hint Gives Zero Benefit to Publishers

Alan raises three important points:

- No incentive for publishers to use the new link attributes

- No benefit to publishers in the form of ranking boost

- Cost of implementing change outweighs any perceived benefit (which at this point is zero)

Digital Marketing Community Still Absorbing Changes

Mark Traphagen, VP Content Strategy for AimClear commented on how the search marketing community is trying to understand the changes:

“Because Google not only redefined how it uses nofollow, but also added two new link attributes (sponsored and UGC), I can understand confusion among SEOs about what needs to be done, if anything. “

Nofollow Hint Change May Help Sites Get Link Equity they Deserve

Link building expert, Julie Joyce the founder of Link Fish Media, brought up an interesting viewpoint.

It’s well known that websites automatically place nofollow links on all links. This creates what some say is an unfair situation in that these links should count. Google’s change opens up the possibility of websites obtaining the link equity and a ranking boost they deserve.

Here is how Julie explained it:

“…some sites automatically nofollow all links and that’s just their policy but it’s not good to just refuse to endorse what you’re linking out to.”

I asked Cyrus Shepard for his opinion and he offered his typically unique and nuanced view:

“I think this is good news for link builders, and the link graph overall, but a potential headache for publishers.

Even though most links still won’t “count”, this won’t stop folks from rushing to create comments, UGC, and Wikipedia edits with the hope that they might count, no matter how slim the possibility.

Rumors and misinformation will abound. Folks are already confused.

Good for Google for doing this, but the industry is going to need more clarification before this thing shakes out.”

Google Responds to Criticism

With HTTPS, Google forced adoption of HTTPS by demoting sites that didn’t adopt it and giving a ranking boost to those who did adopt it.

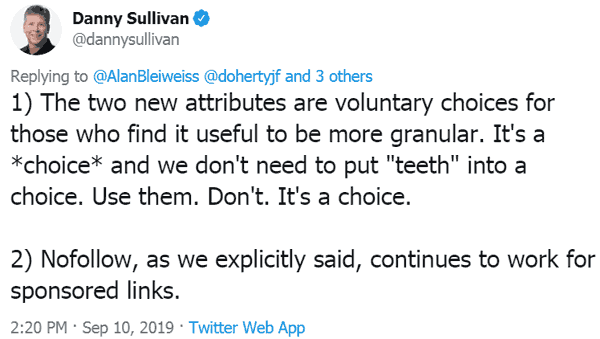

Google’s SearchLiaison, Danny Sullivan, responded on Twitter by saying that Google will not force anyone to use the new nofollow link attributes.

“The two new attributes are voluntary choices for those who find it useful to be more granular. It’s a *choice* and we don’t need to put “teeth” into a choice. Use them. Don’t. It’s a choice.”

What’s in it for Google?

Google introduced two new nofollow link attributes. One is for indicating that a nofollow link is from user generated content (UGC). The second one is to indicate that a nofollow link is on sponsored content.

Both of those attributes benefit Google because they can help Google with link calculations.

The sponsored link nofollow gives Google the strong hint to not give any PageRank. It also contributes to Google’s understanding of the web page itself, that the page sells sponsored links.

Mark Traphagen shared how the sponsored link nofollow attribute might benefit Google:

“I guess a “sponsor” link more directly expresses why the link might not pass authority than “nofollow.” …It seems clear that “nofollow” was being used for too many diverse purposes.”

Why Would Google Trust Nofollow UGC Links?

Google posted that UGC nofollow links, as of today (September 10, 2019), will be treated as hints.

“All the link attributes, sponsored, ugc and nofollow, now work today as hints for us to incorporate for ranking purposes.”

The UGC nofollow gives Google the hint that these links might be useful for ranking purposes. So Google may decide to pass ranking signals for forum nofollow links, for example.

Forums are an expert source of information because people share their personal experiences with products, services and advice in the form of answers.

The UGC nofollow attribute can provide a strong hint that a link posted in a forum may be useful for ranking purposes because it’s an honest and expert endorsement.

A link from a forum can be considered a trustworthy link.

Google Search Quality Raters Guidelines (QRG) makes reference to the expertise of forum content:

“…there are high E-A-T pages and websites of all types, even gossip websites, fashion websites, humor websites, forum and Q&A pages, etc. In fact, some types of information are found almost exclusively on forums and discussions, where a community of experts can provide valuable perspectives on specific topics.”

As you can see from the Search Quality Raters Guidelines, forum content can be considered a valuable perspective.

Given that blogging is dead, forums are one of the last sources of honest editorially given links on the Internet, perhaps even more meaningful than social media links where people may tend to link for viral reasons like cultural issues and politics.

It may be reasonable to assume that links from forums could also be considered valuable.

By adding the UGC nofollow link, a forum owner would be helping Google make that determination.

But there is no incentive for the forum owner to do that. But Google probably can already tell that the content is on a forum and may choose to ignore the regular nofollow attribute that may accompany outbound forum links added by users.

Why Would Google Change Nofollow to a Hint?

Many people have said that there are less opportunities for links because nobody’s creating links anymore.

1. Forum traffic is down. There are less people on forums creating links.

2. Blogging is down. There are less bloggers publishing and creating links.

3. Video and Audio content does not generate links.

It could be that the link signal has become progressively weaker. In fact, Google confirmed that the nofollow hint change was about the link signal.

How Will Google Use Wikipedia Nofollow Links?

Wikipedia links could be viewed as a high value link. In general they are seen as high quality links because of tight editorial standards.

Adding Wikipedia links to Google’s ranking calculations could introduce a new way to improve the link signal, which could then trickle down and make the link calculations stronger.

Andrea Volpini, of AI Powered SEO for WordPress company WordLift said this about Wikipedia links :

“Most of today’s NLP/NLU (Natural Language Processing/Natural Language Understanding) is based on models trained on Wikipedia, links included.

Some of the NLP we use is also dependent on links found on Wikipedia’s article. The value of these links is strategic.

here is an example of how Wikipedia links can be used to extract knowledge patterns, Encyclopedic Knowledge Patterns from Wikipedia Links”

Wikipedia Nofollow Links for Ranking Purposes?

I asked Bill Slawski, Director of SEO Research at Go Fish Digital about the possibility of Google using Wikipedia links for ranking purposes:

“I hope not. They already do well enough. It wouldn’t be good seeing them spammed more.”

The Search Marketing Community Responds

Change May Incentivize Commerce in Nofollow Links

Discussing this with Bill Slawski, he brought a good point about how this change may cause an increase in the business of selling nofollow links.

“I don’t think it’s a good idea. I’m afraid that comment spammers will see an announcement like that as an invitation to spam.”

That’s a good insight. I’m a consultant and my business site is constantly under attack by spammers. I also publish several forums and informational sites and they too are under constant attack by spammers.

Nofollow links are already a commodity that is sold by people who insist they help sites rank. It’s possible that this change may fuel an increase in the commerce of nofollow spam links, especially blog, article, Wikipedia and forum spam.

Some Say Nofollow Hint Update May Lead to More Spam

UK digital marketing expert Gordon Campbell shared a similar opinion to Bill.

“If you have worked with large sites, especially eCommerce sites with lots of filtered pages, you’ll know that Google can be pretty bad at recognising the canonical URL.

If this proves to be the same with nofollow links, and Google aren’t accurate when it comes to understanding which nofollow links pass link equity, I suspect this will give a green light to blackhat SEOs and we will see a sharp increase in the use of techniques such as comment and forum spam.”

Nofollow Hints Takeaway: SEO Will Be Impacted

Google’s announcement changes how links for ranking purposes are calculated. The change introduces fairness because links that were arbitrarily nofollowed may now count. But the change may also introduce an increase in link spam. It’s certainly an opportunity for some to begin selling nofollow links.

Read Google’s official announcement

Evolving “nofollow” – new ways to identify the nature of links