Keeping up with Google ranking factors has never been more challenging.

Google is updating its algorithm at unprecedented rates.

Something that didn’t impact search rankings yesterday might be a significant ranking factor today.

The opposite is also true. Ranking factors are not set in stone.

On top of that, the amount of misinformation you have to sift through makes it difficult to know what to believe.

You’ll often run into articles where the writer backs up their ranking factor claims with opinion or anecdotal evidence.

That’s why Search Engine Journal’s editorial team tackled and analyzed 88 of the most talked-about potential ranking factors in their Google Ranking Factors: Fact or Fiction guide (free to download).

And in this article, we’re going to take another approach, going right to the source – Google.

We’ll explore the major ranking factors Google has confirmed, and explain what they mean for search and our SEO efforts.

Before we get to that, I have to address what I believe is the biggest myth about ranking factors.

Myth: Google Has 200 Ranking Factors

OK, I can’t really prove without a doubt that there aren’t actually 200 ranking factors but let’s just consider one thing.

The “200” figure seems to have originated around 2009, when Google’s Matt Cutts mentioned there were “over 200 variables” in the Google algorithm.

Remember, this was over a decade ago. That’s before:

- The HTTPS boost.

- The mobile-first index.

- Hummingbird.

- A whole litany of updates and changes, including the introduction of machine learning into the algorithm with RankBrain.

Things have changed quite a bit.

Let’s assume that even if there was a nice round 200 as the number of ranking factors back then, that Google has probably added at least one or two factors into the mix since.

So – the first legit fact we’ve covered here is that there aren’t 200 ranking factors.

Google has come a long way since then.

Something else we need to consider is that most of the more than 200 factors have a variety of states or values that apply.

Ranking signals aren’t all in an on-or-off, good-or-bad state (though some, like a site being in HTTPS or not, may be).

Further, some ranking factors may rely on others to trigger.

For example, a spam factor may not kick in until a threshold of links has been acquired in a specific period. The signal is absent from the algorithm until it’s triggered and so the question could be posed: Is it a factor all the time or isn’t it?

But let’s leave that discussion to the philosophers, shall we?

With this understanding of the myth of the 200 factors and how they’re applied (or not covered), let’s carry on to other known factors.

While I’ve noted above that the mass number of factors can’t be readily understood that doesn’t mean they’re not knowable.

We know the sun exists but there’s a lot we don’t understand about how it functions.

Nonetheless, knowing that it exists and some of the core outputs of it has proved quite helpful over the years. Search ranking factors are no different.

So, while we can’t necessarily understand the impact or nuances of how their calculations work or how they might influence other aspects of the overall algorithms, there are factors that are known and the knowing provides confirmation that an area is worth working on.

As far as confirmed facts, here are what we know for sure to be ranking factors:

1. Content As A Google Ranking Factor

Content is the foundation of Google Search. It’s the very reason search engines were invented – to make web content easier to find.

Without content, there is no Google. It goes without saying content is a major ranking factor.

This is confirmed in Google’s “How Search Works” resource, which explains how its algorithms work in easy to understand language:

“… algorithms analyze the content of webpages to assess whether the page contains information that might be relevant to what you are looking for.”

There’s the proof, but it doesn’t mean go out and create content just for the sake of having more URLs for Google to index.

Google cares about two things when it comes to ranking content: quality and relevance.

In other words, is the content well written and mostly free of spelling and grammar errors?

If yes, does the content relate to the searcher’s query?

Tick those boxes and you’ll have a much greater chance of achieving high rankings in Google. Its algorithms can tell the difference between high-value content and something that was thrown together without much effort.

2. Core Web Vitals As A Google Ranking Factor

In the hierarchy of ranking factors, website usability almost goes hand in hand with content.

I say “almost” because content relevance will supersede any other ranking factor in this list. Though Google would much prefer to send searchers to pages that offer an exceptional user experience.

Google measures user experience by analyzing three metrics known as the Core Web Vitals. These were introduced in 2020 and became a factor for rankings in 2021.

An official Google blog post states:

“Today we’re announcing that the page experience signals in ranking will roll out in May 2021. The new page experience signals combine Core Web Vitals with our existing search signals including mobile-friendliness, HTTPS-security, and intrusive interstitial guidelines.”

Google plans to update the Core Web Vitals metrics every year based on what it considers essential to providing a good user experience on the web.

At the time Core Web Vitals were integrated into search rankings, they included:

- Largest Contentful Paint (LCP): Measures how long it takes to load the largest image or block of text in the viewport.

- First Input Delay (FID): Measures how long it takes for the browser to respond when a user engages with the page.

- Cumulative Layout Shift (CLS): Measures visual stability to determine whether there is a major shift in the content on-screen while elements are loading.

For more on how to measure these metrics, see: How You Can Measure Core Web Vitals.

3. Site Speed As A Google Ranking Factor

Thankfully, you can put this one in the “fact” category. Google announced it as a ranking factor back in 2010 when they stated:

“You may have heard that here at Google we’re obsessed with speed, in our products and on the web. As part of that effort, today we’re including a new signal in our search ranking algorithms: site speed.”

Interestingly, it wasn’t until July of this year that they started using it as a ranking factor for mobile.

Presumably, Google relied on desktop page speed until then, and the rollout of the mobile-first index has resulted in them adding in speed as a factor there.

4. Mobile-Friendly As A Google Ranking Factor

Having a mobile-optimized site is, to say the least, a ranking factor.

The only proof I think I need to include here is the rollout of the mobile-first index.

5. Title Tags As A Google Ranking Factor

Coming as no surprise is that title tags are a confirmed ranking factor.

We all knew it, but it makes the list of facts.

Google’s John Mueller confirmed it in the following hangout a couple years back. The video starts where he discusses the point:

Make sure you read Google is Rewriting Title Tags in SERPs for more recent developments, too.

6. Links as a Google Ranking Factor

Links are a confirmed ranking factor. Links have been confirmed as a ranking factor many times over the years.

From Matt Cutts mentioning in 2014 that they were likely to be around for many more years to its placement as a top-three ranking signal shortly after RankBrain rolled out, links are a confirmed factor.

At some point in the future, the link calculations may be replaced by entity reference calculations, but that day is not today. At that time, the “fact” of links will simply become a “fact” of entities.

7. Anchor Text As A Ranking Factor

I won’t be including aspects of links that are “a given” as facts, such as a link from an authority site being worth more than a link from a low-value directory or a new site. These are discussed in the link discussions as a whole and confirmed there.

One signal that needs to be discussed, however, is anchor text.

Whether anchor text is used as a signal has been debated by some – and certainly, the overuse of it can be detrimental (which unto itself should reinforce that it’s used as a signal).

However, what can’t be ignored is that anchor text is still mentioned in Google’s SEO Starter Guide – and it has been for years.

Also, in an Office Hours Hangout, Mueller advised using anchor text internally that reinforces the topic of the page thus confirming it as a signal.

Google recommends strategic use of anchor text. That means you shouldn’t link to pages using generic phrases such as “click here” or “see this page.”

Anchor text should describe the page that’s being linked to so users have some idea of what they’re about to land on. This also gives Google more context about a page, which can assist with rankings.

However, over-optimized anchor text can be the death of otherwise great content.

If a majority of links pointing to your site have keyword-rich anchor text, Google is going to recognize that as a spam signal and may demote or deindex your site’s content.

Learn more about anchor text as a ranking signal in Google Ranking Factors: Fact or Fiction.

8. User Intent/Behavior As A Google Ranking Factor

User intent is more a grouping of signals than a signal itself, but we let that slide a bit above with links and we’ll have to do that same here.

The reason for grouping them together is that they are factual as a group, but the individual signals within that group are, for the most part, unconfirmed and in some cases unknowable.

For evidence on user intent as a signal, one simply needs to consider RankBrain.

RankBrain is often considered a signal. Personally, I consider it more of an algorithm that interprets signals but that’s a semantic discussion.

What Google has said of RankBrain is:

“If RankBrain sees a word or phrase it isn’t familiar with, the machine can make a guess as to what words or phrases might have a similar meaning and filter the result accordingly, making it more effective at handling never-before-seen search queries.”

So, its purpose is not to act as a signal as we generally think of them, but rather to act as an interpreter between the search engine and the searcher, passing to the search engine the meaning of a query where the keywords themselves leave some ambiguity.

Either way, user intent is being factored in.

Looking at aspects of user behavior from the context of CTR, pogo-sticking (which is confirmed not a direct signal), etc. Google has not confirmed any of them as factors to the best of my knowledge.

This is not to say that they’re not used but we’re talking about facts in this article, not scenarios we’re 99% sure of or that they have patents around, as those aren’t facts.

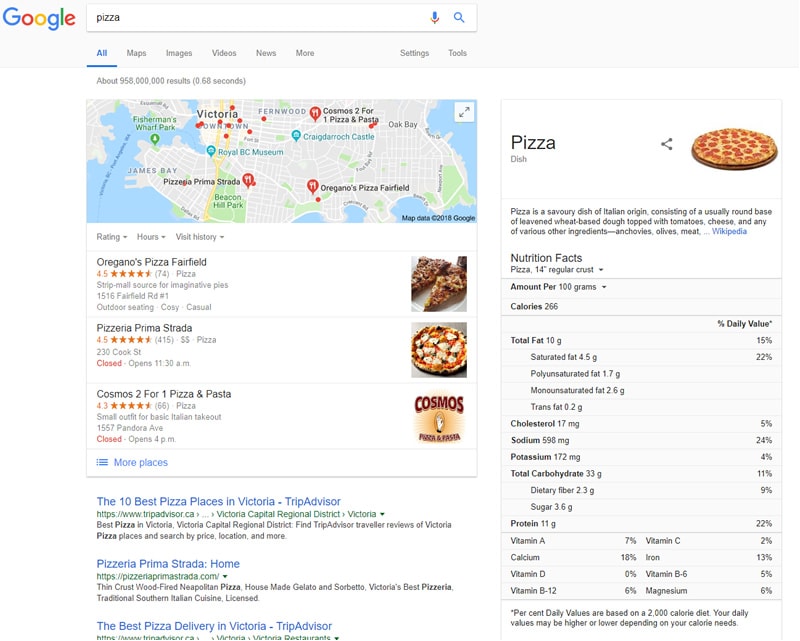

9. Geolocation As A Google Ranking Factor

I could link to a wide array of discussions and statements about geolocation and the idea that where you are in space and time impacts your results.

Or I could simply post the following image of a search I performed while hungry.

Screenshot from search for [pizza], Google, September 2021

Screenshot from search for [pizza], Google, September 2021Enough said.

10. HTTPS As A Google Ranking Factor

It’s not a large factor but it’s an easy one to confirm as Google did that for us on August 6, 2014, when they wrote in their blog:

“… we’re starting to use HTTPS as a ranking signal. For now, it’s only a very lightweight signal – affecting fewer than 1% of global queries, and carrying less weight than other signals such as high-quality content – while we give webmasters time to switch to HTTPS. But over time, we may decide to strengthen it …”

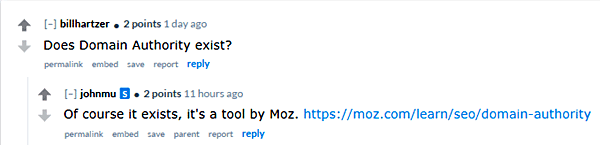

11. Domain Authority As A Google Ranking Factor

If you follow the news and Googley statements as closely as I do you might be right now questioning the accuracy of this whole article.

After all, Mueller stated on Reddit:

Screenshot from Reddit.com, September 2021

Screenshot from Reddit.com, September 2021So why would I list domain authority as a fact when clearly Google is implying that it is not?

Because they’re calling a tom-A-to a tom-AH-to.

What Bill Hartzer obviously was asking in the question was not about the Moz metric but about the idea that a domain carries authority and with it the strength to rank its pages.

Mueller dodged the question by referencing a Moz metric and it was taken as a rebut of the idea as a whole.

In a Google Hangout, however, Mueller states:

“So that’s something where there’s a bit of both when it comes to ranking. It’s the pages individually, but also the site overall.”

That quote refers Google assessing pages on their own to determine where to place them in search, and also assessing the website as a whole.

Google does reward websites that have a history of being authoritative sources that consistently produce outstanding content.

I get that Mueller was trying to be a bit tongue-in-cheek in the Reddit AMA and don’t blame him for having fun with his reply.

It’s up to us to dig into the facts and thankfully they’re available if you do the research.

Now you know yet another fact about Google’s “200″ ranking factors.

Them’s The Facts

In parting, let’s keep in mind that anything you think you know about the number of ranking factors is becoming more and more invalid with each step toward full integration of machine learning into Google’s algorithms.

It may be that presently machine learning is only adjusting factors that are programmed by engineers, but it can’t be long before they are given the task of looking for ranking factors that are not yet considered and weigh them in.

Basically, looking for common traits of a known-good result (or known-bad) and beginning to use them in its calculations.

At that time the number of factors will not just be unknown, but unknowable.

And that’s a fact.

Featured Image: Irina Strelnikova/Shutterstock