Within Google Search Console, hidden gems abound.

But, there are five special hidden gems that will help make your SEO life easier.

Most webmasters are familiar with Google Search Console.

It is a toolbox created by Google to help uncover technical issues.

If you are considering buying another analytics tool, I highly recommended you explore GSC first.

You may not need another tool at all if the issues on your site are restricted to those found in GSC.

It is not the most intuitive.

While many features of the old version of the tool have failed to be added to the new version (the robots.txt tester, and crawl stats, among others), it is still useful.

I don’t know about you but I always used the robots.txt tester and the crawl statistics tools.

Not quite as useful as it could be, but still good.

Let’s take a tour of five hidden gems in GSC that may not be obvious from the outset.

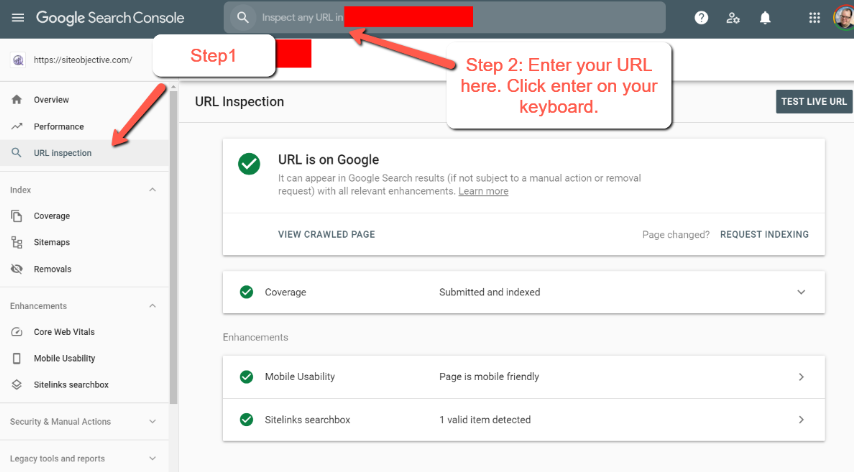

1. URL Inspector Tool

This is GSC’s answer to the old Google Webmaster Tools’ “Fetch as Googlebot”.

It performs the same function and allows you to submit your URLs for indexing.

Are you tired of waiting for Google to come back and crawl your site after an update?

Enter GSC’s URL Inspection Tool.

This little gem allows you to have Google recrawl a page at your demand.

It also allows you to see any web page just as Google sees it.

It’s a great tool to find problematic pages.

For example, if your site has been hacked, and is being used to display information that is part of another website (a form of cloaking – not to be confused with iFrames), then the URL inspection tool will allow you to see indexation issues, other issues, and more.

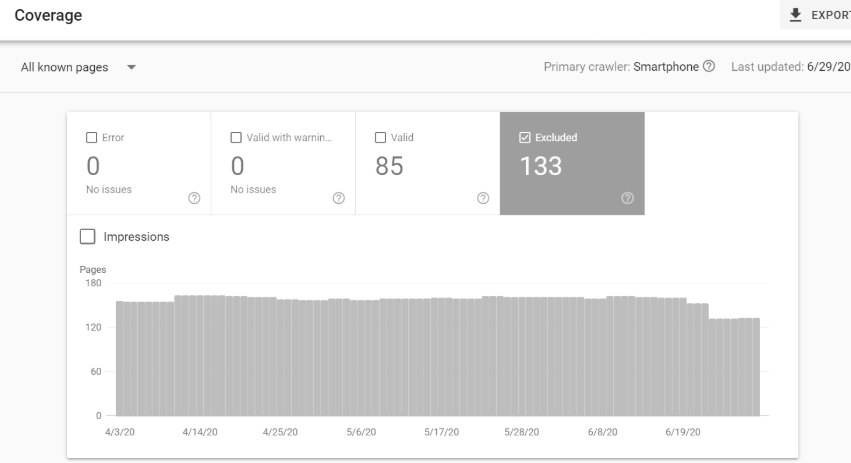

2. Find Obscure URL Errors Like Soft 404s

While the coverage tab will help you uncover issues with pages that have errors, did you know that there are other advanced errors when you dig deeper?

Yep.

You can find soft 404 errors that are generated on your site.

These types of errors occur when you have a page that is serving up blank content.

They otherwise show up as 200 OK status and are not always easy to spot unless they are labeled.

You would have to visit the page itself to find the specific page that is being served in this fashion.

Also, if you are experiencing ranking and indexation issues, the coverage area will allow you to find other errors such as:

- Pages labeled “noindex”.

- Pages with redirects.

- Pages that have been crawled but not currently indexed.

- Crawl anomalies (which will require you to dig deeper to figure out what’s causing the crawl issue).

- And other significant issues.

If you are experiencing ranking and indexation problems, these sections will help you better diagnose problem areas on your site that arise due to mismanagement.

In addition, you can find deeper issues when you untoggle “Error,” “Valid with warnings,” and “Valid.”

Make sure “Excluded” is checked.

When using this data, always pay attention to your indexed pages.

Say you have 1,343 pages on your site. But the pages indexed reads double that number.

You may have issues with indexing due to a site search plugin that you installed that’s generating hundreds of thousands of URLs from a single search.

As you can imagine, this is not a good thing.

It can lead to serious duplicate content issues that can also lead to serious ranking problems.

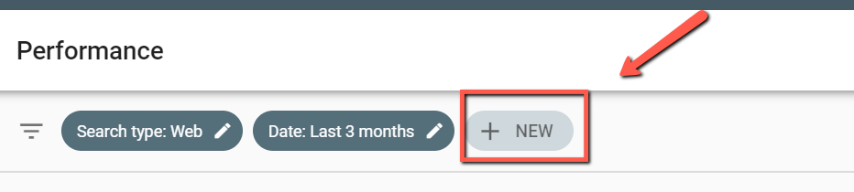

3. Perform Deeper Keyword Analysis on Ranking Pages

Did you know that you can dig deeper and find information on pages specific to a query that you are ranking for?

And that you can then strategize performance fixes and improvements based on these categories?

Yep.

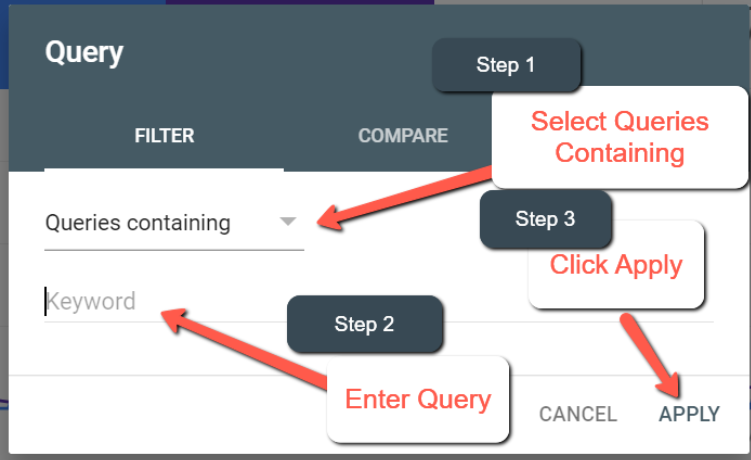

Go to Performance > + NEW, and choose the information you want to uncover.

Let’s say we want to find information on pages containing a specific query that Google is actually ranking.

Click on Query.

Follow the steps below.

Your next screen will then display all the pages that are ranking for that query.

If none show up, you may not be ranking.

And, you can even toggle between Pages, Countries, Devices, and Search Appearance.

Diving deeper into these selections will help you uncover data and assess the pages on your site that you want to improve and why.

It will help you develop a completely tailored search experience on your site for queries and pages for your users.

This is a great way to find keyword data that benefits you, as it helps you make strategic decisions for your site that will be most effective.

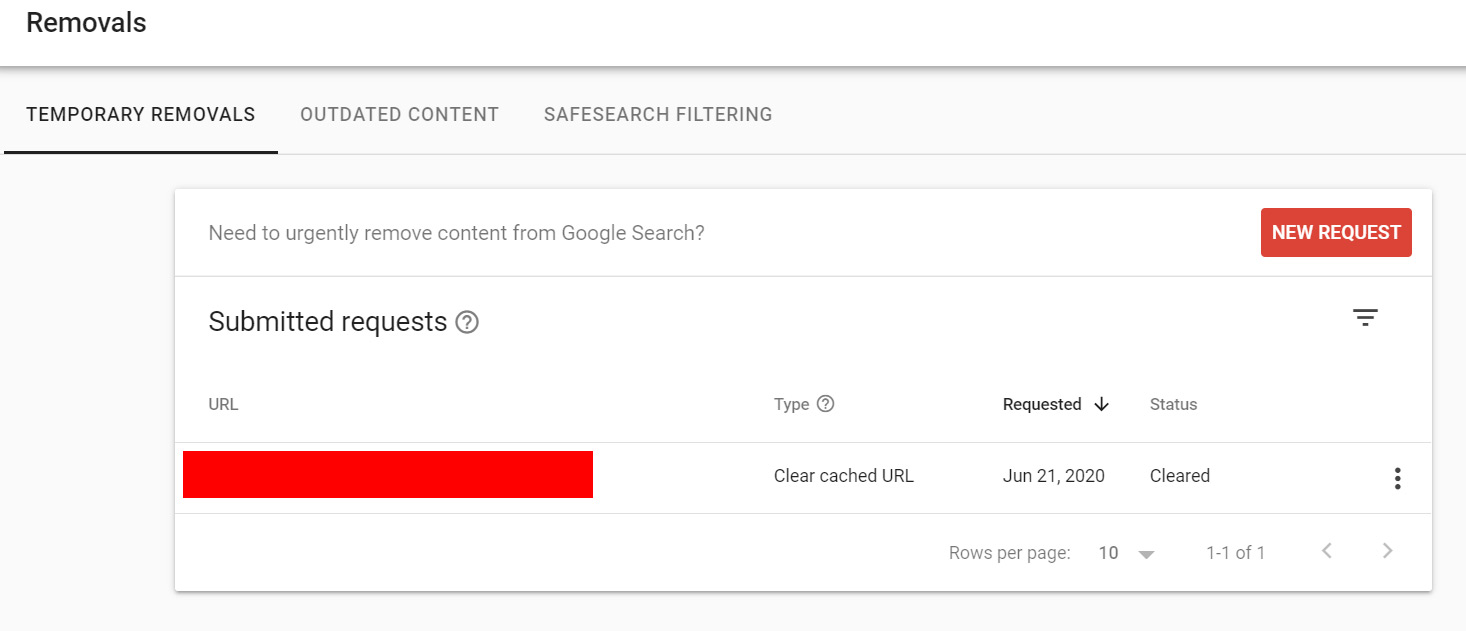

4. URL Removal Tool

Extreme caution must be taken when using this tool unless you want to cause severe issues sitewide.

You don’t want to remove a URL that was actually causing good things to happen.

And, on the flip side, you do want to remove problematic URLs that are no longer valuable, have outdated content, or there is no way you can really improve them in the context of your current SEO efforts.

In short, if you are unsure of what you are doing, it’s best to ask a seasoned professional before you screw up your rankings beyond repair.

Be warned…this tool is not for the faint of heart. Or the inexperienced.

5. Context: The Last Hidden Gem of GSC

One last hidden gem in Google Search Console in your quest to identify and correct website errors is understanding the real-world context of the problems reported by the tool.

While problems with indexation can reveal issues like too many indexed pages, be careful there isn’t another site or design issue that is interfering with this.

Find out about these issues by performing your due diligence and investigating problems you aren’t sure about.

Ask questions.

And follow-up with your whoever manages these types of issues on your site if there are major issues.

Context can mean the difference between getting issues fixed on time and within budget or just creating more problems.

As you learn the intricacies of GSC it will be easier to use and you will be able to identify issues more quickly.

These suggestions are just the tips of the iceberg.

But, they can help you identify some serious site issues without having to spend more money on additional tools.

More Resources:

- A Complete Guide to the Google Search Console

- Why Google Search Console & Google Analytics Data Never Matches

- Google Search Console Updated With Core Web Vitals Report

Image Credits

All screenshots taken by author, July 2020