In November I wrote a post explaining how just one line of code could destroy your SEO. It underscored the fact that sometimes hidden dangers can kill your SEO efforts. In addition, it also explained how a thorough audit can reveal those issues and get your site back on track SEO-wise. Well, I’m back with a new post about audits and SEO gremlins. And as part of this post, I’m going to include information about one of my favorite tools (one that I’ve used for a long time – Xenu Link Sleuth).

In this post, I’m going to explain the power of a test crawl when auditing a web site. You can learn a lot by crawling a web site, and I’m going to focus on three hidden dangers that can be uncovered by using a tool like Xenu. And since the information is actionable, you just might be running to your development team once the crawl is done.

Xenu Link Sleuth – Free, Simple, and Powerful

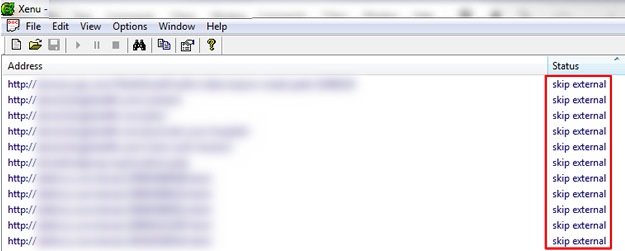

I’m going to focus on Xenu Link Sleuth for running a test crawl, although Screaming Frog is another solution you can try (but it’s a paid solution). I’ve been using Xenu for years and it’s a great tool for spidering a website. There’s a wealth of information Xenu returns, including status codes, server errors, inbound links, external links, page metadata, etc. As part of the process of auditing a web site, it’s always a good idea to run a test crawl. You never know what you’re going to find.

Note, this post isn’t meant to be a deep Xenu tutorial that covers all of its functionality. Instead, my goal is to show you three things you can uncover during a test crawl that could be hurting your SEO efforts. Let’s take a look at what Xenu can find after you unleash it on your website.

3 SEO Dangers Xenu Can Uncover:

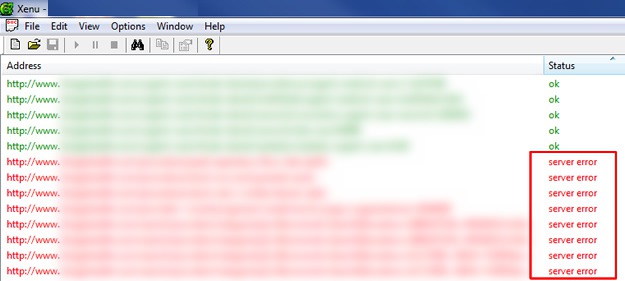

1. Server Errors

There are times I run Xenu on a medium to larger site and the report comes back with hundreds (or thousands) of server errors. As you can imagine, having Googlebot encounter thousands of server errors isn’t a good thing. The crazy part is that clients often don’t know this is happening, and that’s especially true with larger sites.

In addition, there are times those server errors are occurring on pages that the client didn’t even know existed. Confused? They were too. In a recent audit, the CMS being used was dynamically building URL’s based on a glitch. Those URL’s threw server errors. And Googlebot was finding them too. Not good.

2. 404’s

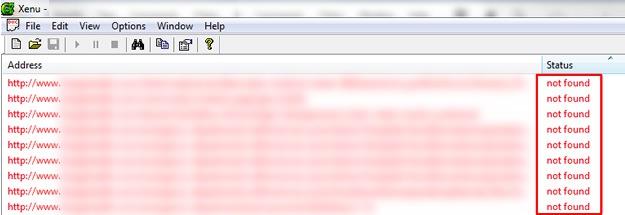

If you’re not familiar with header response codes, a 404 is “Page Not Found”. For SEO, you want to hunt down 404’s for several important reasons. For example, are powerful pages on your site throwing 404’s? Why is that happening? And how much search equity are you losing if they are throwing 404’s? A search engine will remove a page that 404’s from its index, and you could lose valuable inbound links that are pointing to those pages. As you can imagine, correcting pages that 404, that shouldn’t 404, is an important task for SEO.

On the flip side, if web pages have been removed correctly (and throwing 404’s), the last thing you want to do is to drive Googlebot to a “Page Not Found” via your own internal linking structure. That’s a waste of a link on your site (and it’s bad for usability as well). Using Xenu, you can easily view 404’s in the reporting, export that report, and then work with your developers on fixing navigation issues.

3. Weird (and Potentially Dangerous) Outbound Links

Let’s focus on a more sinister issue for a minute. Unfortunately, hackers are continually trying to infiltrate web sites, CMS packages, etc. to benefit their own web sites (or client web sites).

Here’s a simple question for you. Do you know all of the pages you are linking to from your website? Are you 100% sure you know? Are you hesitating? 🙂

For larger sites in particular, tracking down all outbound links isn’t a simple task. If your server or CMS package was infiltrated, and outbound links were injected into your site, it can be hard to manually find those links in a short period of time (if you’re even lucky enough to know this is going on).

There are times I run a test crawl and find some crazy outbound links to less-than-desirable web sites. And as you can guess, they contain rich anchor text that can boost the rankings of those sites. And worse, some web site owners have no idea this is going on. Xenu can pick up these external links and provide reporting that you can analyze.

Summary – Crawling To Action

Running a test a crawl on a web site is a smart task to complete on a regular basis. There are several actionable findings a tool like Xenu can uncover, and at a very low cost. Finding these simple, yet destructive problems can help webmasters improve the SEO health of their web sites. And that’s exactly the goal of an SEO Audit.

Again, I just covered a few insights you can glean from a test crawl. Try it out for yourself today. As I mentioned earlier, you never know what you’re going to find.