I have already shared my opinion on the variety of various Robots.txt checkers and verifiers: be aware of them but use those with caution. They are full of errors and misinterpretations.

However I happened to come across one really good one the other day: robots.txt checker. I found it useful to use for self-education in the first place.

Checks are done considering the original 1994 document A Standard for Robot Exclusion, the 1997 Internet Draft specification A Method for Web Robots Control and nonstandard extensions that have emerged over the years.

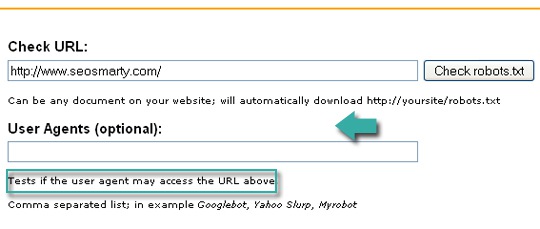

The tool allows to both run a general check and a user-agent specific analysis:

Translate Robots.txt File Easily

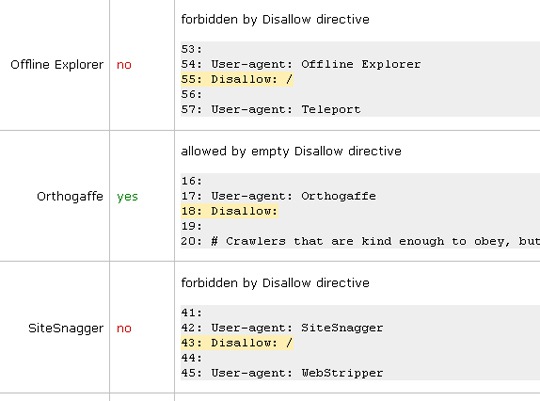

The tool does a good job “translating” the Robots.txt file in easy-to-understand language.

Here’s an example of it explaining the default Robots.txt file:

allowed by empty Disallow directive

The tool is also good at organizing the Robots.txt file by breaking it into sections based on the useragent:

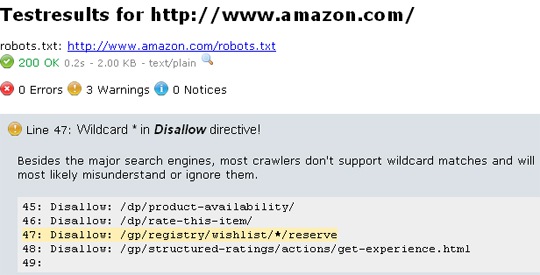

Be Aware of Warnings

The tool warns you of some essential issues, for example the way search engines might treat the wildcard in the Disallow directive:

All in all, I found the tool basic, yet useful enough and would recommend using it for those learning Robots.txt syntax for easier data organization.