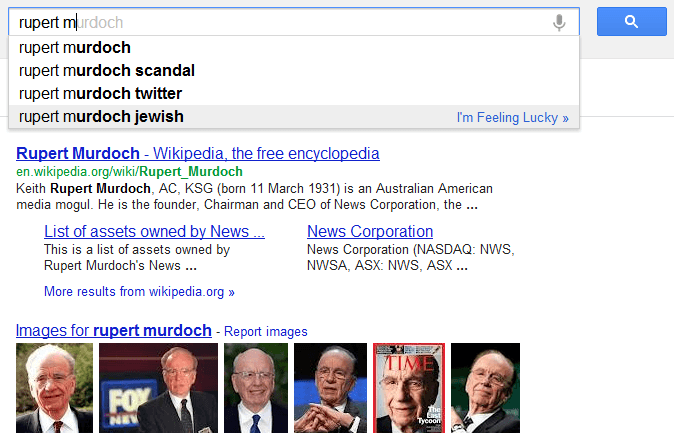

Google’s autocomplete feature, which has faced many legal challenges over the past several years, is facing a new lawsuit in a French court related to Rupert Murdoch search query suggestions. When a Google user types “Rupert Murdoch,” the search engine suggests they complete a search for “Rupert Murdoch Jewish.” The lawsuit, which was filed by SOS Racisme, accuses Google of mislabeling celebrities, connecting people to an often persecuted religion, and creating the “biggest Jewish file in history.”

Google’s autocomplete feature, which has faced many legal challenges over the past several years, is facing a new lawsuit in a French court related to Rupert Murdoch search query suggestions. When a Google user types “Rupert Murdoch,” the search engine suggests they complete a search for “Rupert Murdoch Jewish.” The lawsuit, which was filed by SOS Racisme, accuses Google of mislabeling celebrities, connecting people to an often persecuted religion, and creating the “biggest Jewish file in history.”

On the webpage describing the autcomplete algorithm, Google states that the algorithm determines the autcomplete content without human intervention:

“Just like the web, the search queries presented may include silly or strange or surprising terms and phrases. While we always strive to algorithmically reflect the diversity of content on the web (some good, some objectionable), we also apply a narrow set of removal policies for pornography, violence, hate speech, and terms that are frequently used to find content that infringes copyrights.”

In addition to the Rupert Murdoch queries, Google’s autocomplete feature has faced several other legal battles. For an unfortunate Japanese man, the autocomplete algorithm displayed search results related to crimes he did not commit. Although he ultimately won the cyber-defamation case in a Japanese court, the feature tarnished his reputation and cost him several job opportunities. In addition, Google was fined $65,000 last December by a French court for an autocomplete suggestion that hurt the reputation of an insurance company by adding the French word for “crook” to the end of the company’s name.

Since Google is able to filter certain terms related to pornography, racism, and violence, it is obvious that Google has the technology to easily apply filters to certain types of queries. Is it time for Google to update its autocomplete algorithm to prevent reputation damage and ensure compliance with each country’s laws?

The initial lawsuit hearing is scheduled to take place in a French court on Wednesday.