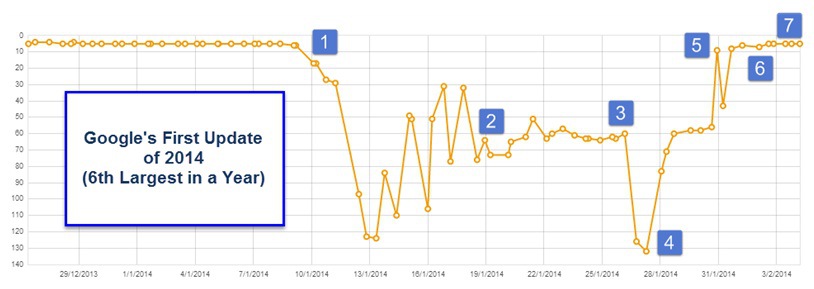

For those of us who track our own websites, you may have noticed a Google update roll out on the 8th of January that’s been causing large fluctuations in the SERPs since then. This was the 6th largest update in a year according to DejanSEO, and hundreds more can’t stop talking about it over at SERoundTable and WebmasterWorld.

Personally, a site of mine was set back nearly 130 spots. It dropped from rank 5 to rank 131, and over the course of 3 weeks, I continually tested in order to understand what the update had done. After some minor changes, I successfully pushed my site back up all the way to rank 5 again.

Here is a quick summary of the timeline of events:

- January 9th – Site was hit by the penalty and dropped from rank 5 to rank 124.

- January 19th – Investigations led to having a PR0 exact match anchor text link removed

- January 26th – Monitored the results and actually saw a further drop of about 60 places since the link was removed. Fell to rank 131 at this point.

- January 28th – Decided to change the URL to avoid using my exact keyword. 301 redirected the old URL to the new one so that existing link juice would flow through.

- January 30th – Huge jump back to page 1, woohoo!

- February 1st – Site climbs to rank 6

- February 4th – Site climbs further up to rank 5

See here for more details on the timeline of events on my website.

Thankfully I documented all my steps, and reflecting back, learnt some valuable information. Let’s see what we can all take away from this.

Content Isn’t King

A distinction needs to be made for when content is actually king, and when it’s not. Too often people assume that if they have great content they’ll automatically rank for terms and never get hit by any penalty whatsoever. Wrong. I had a quality page up with beautifully crafted text and ShutterStock-worthy images (I even took these myself using my Girlfriend’s DSLR), and I still got hit. Unfortunately Google isn’t yet sophisticated enough to tell how well written your content is (although it’s getting there).

Content is king however for social sharing, building a brand, improving visitors’ time on site etc. I’m a huge fan of good content, but unfortunately it doesn’t make you immune to penalties.

301 Redirects Pass All Link Juice

Although Matt Cutts has already come out and publicly stated that all link juice is passed through a 301 redirect, I still see this question asked a lot. My site leapfrogged up nearly 130 spots to page 1 and there was no way this could have happened without having all that link juice flowing from my newly redirected backlinks.

301 Redirects Pass Link Juice Immediately

I was recently reading a blog which had indicated that link juice takes time to be passed through a 301 redirect. Based on what this test shows, this is also a myth. From the time I had setup my 301 redirect to the time I was sitting on page 1 was a mere 2 days. The juice from the redirect must have taken effect almost instantaneously.

The Update Was Not Penguin Related

Forums are oftentimes the first place to find breaking news. At the same time, forums are probably the best place to start a rumor.

A thread started brewing in the WebmasterWorld Forum with many people claiming a Penguin update. I tested this by having all exact match anchor texts removed from my site (luckily there was only 1, otherwise contacting all those webmasters would have been quite a task). As you know from looking at the timeline of events, I saw zero improvement, and in fact dropped a further 60 places a few days later.

Google is Lenient

I was quite surprised as to how significant the improvements were after simply changing the page URL. All this happened within a few days of making the changes- which is incredibly fast. What this tells me is that Google is actually quite lenient when it comes to this specific penalty.

Don’t Over-Optimize Your Header Tags

Only a few months ago was I seeing great results from having my exact keyword in header tags (title, h1, URL). Things change quickly in SEO though, and this test indicates to me that we want to now avoid overusing your keyword in the header tags. What seems to work better now though are variations of your keyword – almost as if you had no idea what keyword you were targeting. Almost as if, it came out naturally that way, which brings me to our final point…

Biggest Takeaway: Keep Everything Natural

If there’s one thing to learn from this, it’s to keep your site looking as natural as possible – almost as if you knew nothing about SEO. With the new Hummingbird update, Google is able to understand the meaning behind sentences, and because of that, you don’t need to worry about stuffing your keyword in every tag. Instead, use variations and synonyms of your keyword to create that natural effect.

I realize that it may be hard for some SEO’s to determine what’s natural or not anymore because sometimes it goes against everything we’ve learnt. So, here are a few more tips you can use to keep your site looking natural in Google’s eyes:

- Don’t link hoard. Link out to others when it makes sense

- Have a Privacy Policy and Terms page

- Have a thorough About Us page

- Have active Social Media accounts linked to your site

- Have a branded domain name

There is a lot we can learn from this first 2014 update, and if we embrace all future updates with the same mindset, then we’d have no reason to be afraid of any algorithm changes in the future.