An amusing thread was taking place over at WebmasterWorld discussing a way to prevent Google from ranking Robots.txt file in SERPs.

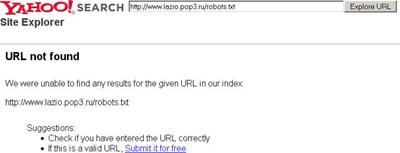

Google currently indexes 62,100 robots.txt files. Many of them have a decent PR while others have no backlinks at all (according to Yahoo Site Explorer at least):

The irony is that:

- you can’t use robots.txt to block robots.txt (that’s truly insane, as in this case a search engine would be unable to crawl robots.txt file and thus to find out that it is unable to do that);

- you are unable to use meta tags in a robots.txt file;

- you can’t remove the file using Google Webmaster Tools because for that you either need to block it in robots.txt or use meta tags (you are unable to do that) or return 404 header which is also impossible (because it actually exists).

According to the forum member:

At any rate, this does bring up the crazy question, how can you remove a robots.txt file from Google’s index? If you use robots.txt to block it, that would mean that googlebot should not even request robots.txt – an insane loop. And of course, you don’t use meta tags in a robots.txt file.

Interesting, isn’t it?

Another board member suggested using an X-Robots-Tag in the HTTP header:

<FilesMatch “robots\.txt”>

Header set X-Robots-Tag “noindex, nofollow”

</FilesMatch>

The solution looks pretty good and that’s also nice that SEOs started at last seeing value in the X-Robots-Tag which is vaguely used.

Another question is why on Earth you would need to block your robots.txt file from being indexed and ranked (a much easier solution would be removing the file completely). But that is not at all important in this case. The truth remains the same: webmasters should have and be aware of the ways to hide any of their pages from search crawlers or prevent it from appearing in SERPs.